Research on abusive language detection earns Outstanding Paper Award

In today's digital age, social media platforms have become an integral part of our lives. They provide a space for communication, information sharing, and community building.

However, social platforms do come with their fair share of challenges, one of the most pressing being the rampant use of abusive language. Detecting and addressing abusive language on these platforms is crucial for maintaining a safe and healthy online environment.

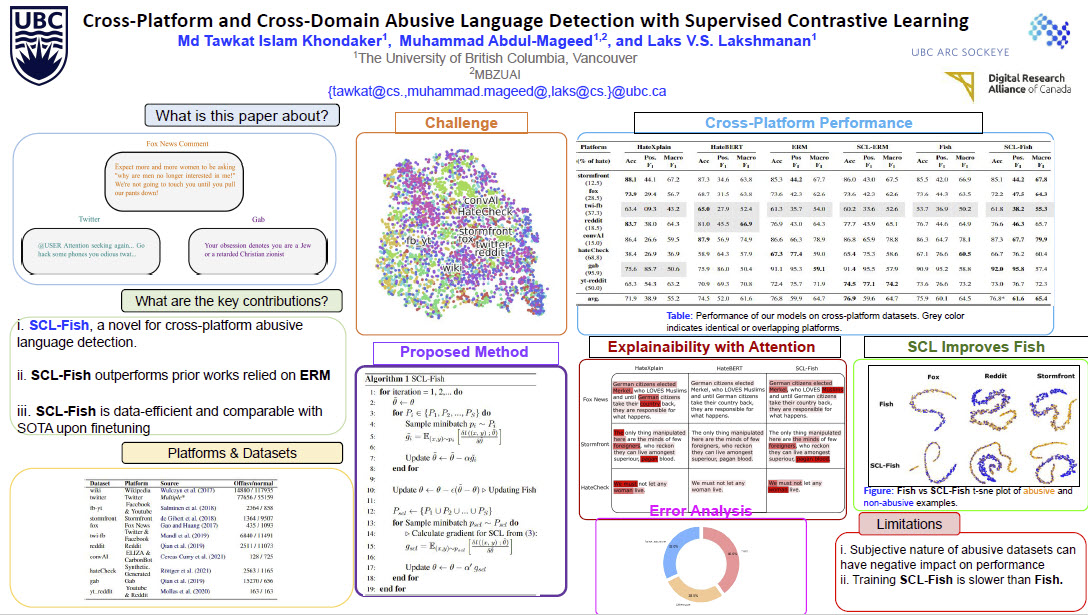

UBC Computer Science researchers, Md Tawkat Islam Khondaker (grad student), Muhammad Abdul-Mageed (associate member), and Laks V.S. Lakshmanan (professor) have proposed a solution with their paper, Cross-Platform and Cross-Domain Abusive Language Detection with Supervised Contrastive Learning. The paper was recognized in July 2023 with a prestigious Outstanding Paper Award at the Workshop on Online Abuse and Harm (WOAH 2023), co-colocated with ACL 2023.

“Given the impact of social media and how it affects and regulates our daily life, we wanted to identify and alleviate one of the most detrimental sides of it,” said Dr. Lakshmanan. “That is how we decided to work on abusive language detection because this problem needs to be addressed to create a better environment for online discourse and dialogue.”

They unveiled a novel algorithmic solution called SCL-Fish, which detects abusive language across various social media platforms. In simple terms, SCL-Fish is a tool that helps identify and flag abusive language in online conversations. It consists of two key components: "Fish" and “supervised contrastive learning” (SCL). "Fish" seeks platform-agnostic features to ensure its applicability across various platforms, while SCL distinguishes between abusive and normal language representations, regardless of the platform they originate from. It also contrasts these examples with instances from the opposite class. By doing so, SCL-Fish can effectively detect abusive language on unseen platforms better than previous methods.

"Our ultimate goal is to alleviate toxicity from social media platforms."

~ Dr. Laks V.S. Lakshmanan

Machine Learning Backbone: BERT

Machine learning plays a fundamental role in the functioning of SCL-Fish. The researchers utilize a robust transformer-based model known as BERT as the backbone for their algorithm. BERT, short for Bidirectional Encoder Representations from Transformers, has become a cornerstone in the field of natural language processing. It serves as the foundation for many deep learning models and large language models. SCL-Fish leverages BERT's capabilities to classify and identify abusive language across various social media platforms.

The effectiveness of SCL-Fish was put to the test on eight different, previously unseen platforms. The results are impressive. “SCL-Fish outperforms the previous state-of-the-art models by a margin of 0.7% - 10.2%,” Dr. Lakshmanan said. This significant enhancement in performance demonstrates the algorithm's ability to adapt to diverse online environments and effectively combat abusive language.

Is the investment worthwhile?

Although SCL-Fish usually requires slightly more time for training compared to its predecessor "Fish," the benefits far outweigh this minor overhead. The one-time fine-tuning process of SCL-Fish takes 1.2 times longer than Fish but yields a substantial improvement in performance. Additionally, there is no need to continually fine-tune the model as new social media platforms emerge. Given the importance of detecting and addressing abusive language, the extra investment in time and resources is well justified.

One remarkable aspect of SCL-Fish is its accessibility. The researchers have made their source code fully open-source, allowing anyone interested to study and use their model. While some datasets used require permission from their creators, the model itself is available for public use.

Future directions

The researchers' ultimate goal extends beyond merely detecting abusive language. They aim to create a safer and healthier online environment by addressing the issue of toxic language. Dr. Lakshmanan explained, “Our ultimate goal is to alleviate toxicity from social media platforms. So the next challenge is to correct the toxic language, i.e., detoxify it. Detoxification is necessary because just deleting anything that is deemed toxic can hurt the core value of freedom of speech. Therefore, we need to alter the toxic language such that the original message can be conveyed in a non-toxic way as much as possible.” This comprehensive approach aims to ensure a more balanced and constructive online discourse.

The award is a source of great excitement and pride for the research team. “Our work has received 5 out of 5 scores from all the reviewers, which is very rare,” he said. This acknowledgment underscores the significance of their contribution to the field of online safety and encourages further research and attention to the issue. “We believe this is a big step toward making social media a safe and healthy environment. The proliferation of abusive language across social media is really alarming. We find the award humbling and gratifying, and we hope the attention bestowed on this research motivates other researchers to continue further work in this area.”

The computer scientists behind SCL-Fish have embarked on a mission to transform the digital landscape by combatting abusive language. Their innovative approach, powered by machine learning and supervised contrastive learning, as well as ongoing research efforts, all represent a beacon of hope in the fight against online toxicity. The journey toward a safer, more inclusive digital future continues, one algorithm at a time.

The poster presentation of their research below, provides an illustrated view.