Laks V.S. Lakshmanan and co-authors publish two significant papers on misinformation

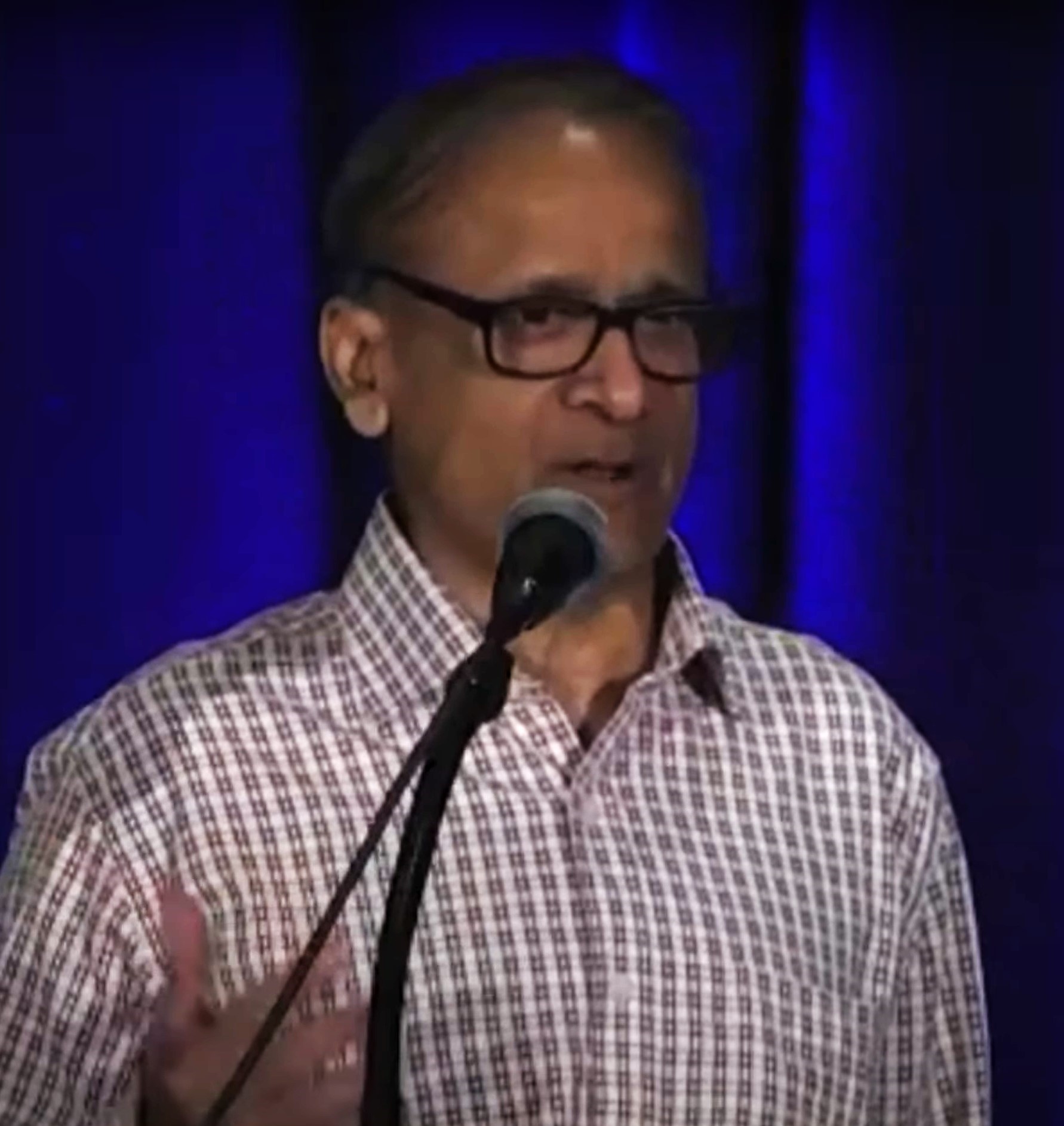

A UBC computer science professor, Dr. Laks V.S. Lakshmanan, has recently had two papers published alongside his co-authors, and gave the keynote at SIGMOD 2022 on the topic of ‘misinformation,’ otherwise known as ‘fake news.’

Most of us can appreciate how damaging fake news can be and how many people it can affect in a very short duration since the advent of social media.

In a study published in March 2018, researchers from the Massachusetts Institute of Technology found a few unsettling things: fake news was more commonly re-tweeted by humans than bots; false news stories were 70% more likely to be re-tweeted than true stories; it took true stories around six times longer to reach a group of 1,500 people; and, that true stories were rarely shared beyond 1,000 people but the most popular false news could reach up to 100,000.

Dr. Lakshmanan and his colleagues from the Data Management & Mining (DMM) group have been studying the phenomenon and their papers address solutions for this topic.

Consider the facts.

Is climate change a hoax?

Was there widespread voter fraud in the 2020 US Presidential elections?

Do Covid 19 vaccines have harmful side effects?

Dr. Lakshmanan says questions like these relate to assertions that can be fact-checked by analyzing available data. In one case: climate science data can be fact-checked, elections in the second case, and results of clinical trials in the third. In other words, the answer to these three questions is a simple True or False, or at most: a degree of truth/falsehood in the claim underlying the questions. These are referred to as factual questions.

Consider these value questions.

Should ownership of firearms be restricted?

Should abortion be banned outright?

Lakshmanan says, “These claims are somehow related to value judgments: there is no truth value that can be meaningfully associated with the claims underlying the last two questions, only value judgments or recommendations.” He refers to these questions as value questions.

Societal polarization and misinformation

“All questions have great relevance for society,” he says. “There is considerable potential for misinformation (popularly known as fake news) surrounding the factual questions. In fact, all three of the factual questions have been the subject of tremendous amount of misinformation campaign with grave consequences.”

He cites a 2021 study out of Johns Hopkins Center for Health Security that estimates misinformation surrounding Covid-19 costs $50-300M each day.

“As for value questions, they are often related to public policy where it is extremely important to engage opposing sides in a constructive and civil discourse, in order that a policy that benefits most, if not all, can be designed.” Laks says. “Filter bubbles, by amplifying polarization in the society around value questions, make it challenging to have civil public discourse effectively engaging opposing sides.”

He explains that furthermore, they can sometimes lead to one-sided policy decisions. As an example, a ‘pro pain killer’ echo chamber that existed during the drug epidemic in the US has been found to have influenced public policy. The problems of misinformation and filter bubbles can combine to lead to an erosion of public trust in institutions with dire consequences.

Through the DMM research group Laks belongs to, they had developed solutions for addressing both the problems of misinformation and filter bubbles in two papers which were accepted at the International Conference on Very Large Databases (VLDB 2022) Conference:

Misinformation Mitigation under Differential Propagation Rates and Temporal Penalties

Michael Simpson, Farnoosh Hashemi, Laks V.S. Lakshmanan; Proc. VLDB Endow. 15(10): 2216-2229 (2022)

Finding Locally Densest Subgraphs: A Convex Programming Approach

Chenhao Ma, Reynold Cheng, Laks V. S. Lakshmanan, Xiaolin Han; Proc. VLDB Endow.15(11): 2719-2732 (2022)

For countering misinformation, in their paper, the authors explain their strategy for launching a counter-campaign that exposes as many users as possible to the true campaign. In particular, the focus is on those social media users who are in the “line of fire” of misinformation. The goal is to expose them to the truth, either well before, or not much later than, the falsehood reaches them. In this sense, it is a race between truth and falsehood and the goal is to win the race for truth.

The winning truth

Designing such strategies is challenging on many levels. The model of information propagation must take into account the differential rates of propagation between truth and falsehood that have been observed in practice. Also, users react differently to incoming information: some react instinctively, and forward it on to their peers in social media almost instantly, while others take some time to read the content and reflect on it before deciding. The decision time can depend on the length of the content. Finally, during the ‘decision window,’ some users may consider conflicting content that reaches them and then make a decision which content, if anything, to forward on. None of the previous models developed by others account for these variables.

On the computational side, designing strategies that ‘save’ as many social media users from falsehood, is computationally very hard. The research team has developed efficient approximation algorithms with provable quality guarantees for this problem and has demonstrated its effectiveness via experiments.

Communications in social media can be modeled using graphs, with users represented as vertices and communications as edges. When detecting filter bubbles and echo chambers in social media, it has been observed they typically correspond to subgraphs which are among the densest out of various subgraphs. Through a duo of papers published at SIGMOD 2022 and previously at VLDB 2022, the team effectively developed efficient algorithms for identifying the densest subgraphs over input graphs, containing tens of millions of users and hundreds of millions to over a billion communication edges.

To identify echo chambers of communication is an inherently exploratory exercise: one needs to examine several dense subgraphs before finding the right one that corresponds to a genuine filter bubble. The approach the team was able to develop makes it possible to efficiently find the top-k densest subgraphs.

It is truly significant that the algorithms they have developed are several orders of magnitude faster than the state-of-the-art algorithms. In this way, their research is helping to get the truth out there faster, and to more people, and effectively slowing down and diminishing the harmful effects of fake news.

"A lie gets halfway around the world before the truth has a chance to get its pants on."

~ Winston Churchill

At SIGMOD 2022, Laks and his co-authors Chenhao Ma, Yixiang Fang, Reynold Cheng and Xiaolin Han had this paper accepted: A Convex-Programming Approach for Efficient Directed Densest Subgraph Discovery. SIGMOD Conference 2022: 845-859.

Here is the video of Dr. Lakshmanan's keynote at the SIGMOD 2022 conference.

Dr. Lakshmanan acknowledges the contributions made by his students and postdocs, “I’d like to acknowledge Michael Simpson, my former postdoc who is currently a research associate at UVic, and Farnoosh Hashemi, my MSc student, who has been spectacular in making key contributions to 2-3 projects completely outside her thesis topic.”

He also acknowledges Chenhao Ma, who recently graduated with a PhD from Hong Kong University. “He has been at the forefront of much of our research on densest subgraphs,” along with Xiaolin Han, a current PhD student at Hong Kong University, Reynold Cheng, a professor at Hong Kong University, Yixiang Fang, another PhD graduate from Hong Kong University and currently a professor at The Chinese University of Hong Kong, Shenzhen.

It is clear that Dr. Lakshmanan has made significant strides toward helping combat misinformation. With this work and more from himself and others, fake news can expect a much greater run for its money.