NeurIPS’22: Collaborative effort from UBC and Google yields paper

This is part 7 of a series featuring some of the UBC CS department’s accepted papers for NeurIPS 2022 (conference runs Nov. 29 – Dec. 9).

Assistant Professor Kwang Moo Yi collaborated with two of his PhD students and two researchers from Google on a paper that is being presented at NeurIPS, the premiere conference in AI and Machine Learning (ML):

TUSK: Task-Agnostic Unsupervised Keypoints

Yuhe Jin (UBC), Weiwei Sun (UBC), Jan Hosang (Google), Eduard Trulls (Google), Kwang Moo Yi (UBC)

Google and UBC: hand in hand

The five researchers came together through a Master Sponsored Research Agreement, spearheaded by Dr. Yi in 2020, which serves as a university-wide agreement for research that can be conducted in concert with Google.

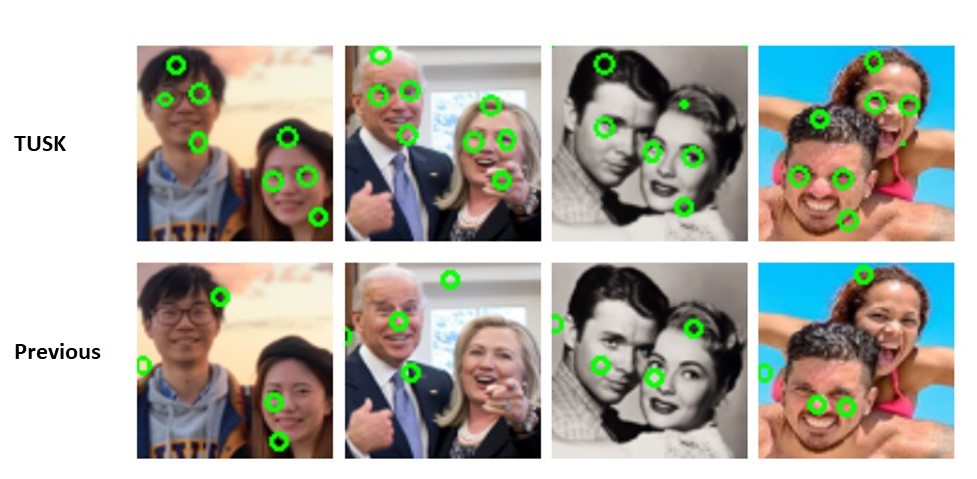

Under the grant, the team investigated a way to identify multiple keypoints within an image, where a keypoint might capture some kind of specific feature ranging from a human elbow, to a numeric digit, to an abstract geometric shape. What’s also new is the ability to handle many keypoints of the same kind within an image, in contrast to the typical assumption that there is only one of a particular object in an image scene.

They are spatial locations, or points in the image, that define what is interesting or what stands out in the image

For example, a group shot of people will contain multiple faces, with fairly similar sets of eyes, ears or the like. Dr. Yi said, “Regardless of how many of these interesting key points there are, we would want to abstract these images into key points. By abstracting, I mean to just have keypoints in certain locations and some associated signals. Then you want to be able to reconstruct the image back.”

He explains it further, saying that abstractions allow your model to ignore the small differences that might happen across different instances of images. For example, if a person is wearing a certain type of clothing in an image, that might interfere with the AI trying to understand the pose, whereas if you're relying on keypoints, the AI recognizes the location of them. So as long as the keypoints are correct, the outcome will be accurate.

Dr Yi said, “Essentially, we are trying to do the simplest thing possible. It’s kind of like zipping an image: destroying it and bringing it back. TUSK is a framework that allows the use of this artificial task to learn keypoints without associating with any particular task. Thus, it’s task-agnostic.”

In their paper, they show experiments on a variety of tasks: multiple-instance detection and classification, object discovery, and landmark detection, all unsupervised. The outcome is on par with the state of the art methods that only handle single objects.

Congratulations to Dr. Yi and his collaborators for their accomplishment with TUSK.

Learn more about Kwang Moo Yi and his research.

Learn more about the UBC Computer Vision group.

In total, the department has 13 accepted papers by 9 professors at the NeurIPS conference. Read more about the papers and their authors.