Data

Download links (GoogleUrban datset is password protected, please contact organizer at image-matching@googlegroups.com for the password):

- PragueParks Test Set (md5sum: acaf216ce0cf093b2cd740e1b2c60b78)

- PragueParks Test Set with Ground Truth (md5sum: 3470f721f720d9c0548a56f2d8d5e95e)

- PragueParks Validation Set with Ground Truth (md5sum: 541bc9cf892fd9241dacbd2cfbc73af5)

- GoogleUrban Test Set (available only for the duration of the 2021 challenge)

- GoogleUrban Validation Set with Ground Truth (available only for the duration of the 2021 challenge)

- Phototourism Test Set (md5sum: e437b756b231eecb505a97e345e9bd9a)

- Phototourism Test Set with Ground Truth (md5sum: 2adf7ee780dd28082a8db2d5e4a9d933)

- Phototourism Validation Set with Ground Truth (md5sum: 3602292142140a6d0d78e58bae1f9c82)

- Photourism Training Set with Ground Truth

The basic principle behind our approach is to obtain dense and accurate 3D reconstructions from large collections of images with off-the-shelf Structure from Motion (SfM), such as Colmap or RealityCapture. This provides us with (pseudo-) ground truth poses and, optionally, densified depth maps. We then subsample these image collections into smaller subsets (e.g. pairs of images for stereo, or 5 to 25 images for multiview reconstruction with SfM) and evaluate different methods against the "ground-truth". We publish up to 100 images per scene, after subsampling.

This year we have 3 datasets: "Phototourism", "PragueParks" and "GoogleUrban". Each of them is obtained in a different way, which we will describe below. The "Phototourism" dataset is unchanged from the 2020 challenge, and comes with a training set, along with validation data with a public ground truth, and test data with a private ground truth. PragueParks and GoogleUrban provide validation data, but not training data. Our experiments show that the optimal hyperparameters (such as the RANSAC threshold or the ratio test) vary among datasets, which is why we encourage participants to tune their methods on the each of 3 validation sets, separately, before submitting.

The PragueParks dataset

The PragueParks dataset contains images from video sequences captured by the organizers with an iPhone 11, in 2021. The iPhone 11 has two cameras, with normal and wide lenses, both of which were used. Note that while the video is high quality, some of the frames suffer from motion blur. These videos were then processed by the commercial 3D reconstruction software RealityCapture, which is orders of magnitude faster than COLMAP, while delivering a comparable output in terms of accuracy. Similarly to we did for the "PhotoTourism" dataset, this data is then subsampled in order to generate the subsets used for evaluation.

The dataset contains small-scale scenes like tree, pond, wooden and metal sculptures with different level of zoom, lots of vegetation, and no people. The distribution of its camera poses differs from Phototourism. Learned detectors like SuperPoint and R2D2 work better on this subset than Difference-of-Gaussians used in a SIFT: baselines will be released soon.

| Validation scene | Num. images | Num. 3D points |

|---|---|---|

| Wooden Lady | 2049 | 1484682 |

| Total | 2k | 1.5M |

| Test scenes | Num. images | Num. 3D points |

|---|---|---|

| Tree | 614 | 578252 |

| Pond | 3654 | 1435656 |

| Lizard | 264 | 419477 |

| Total | 4532 | 2.4M |

The GoogleUrban dataset

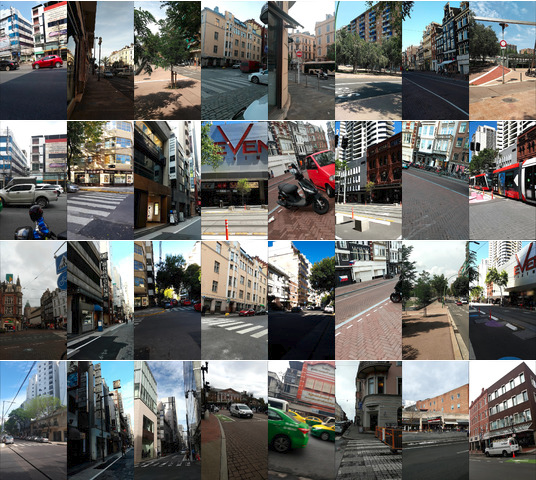

The GoogleUrban dataset contains images used by Google to evaluate localization algorithms, such as those in Google's Visual Positioning System, which powers Live View on millions on mobile devices. They are obtained from videos collected from different cell phones, on many countries all over the world, often years apart. They contain poses, but not depth maps. Please note that due to legal reasons, this data is released with a restricted license, and must be deleted by the end of the challenge.

| Validation scenes | Num. images |

|---|---|

| Edinburgh | 75 |

| Mexico DF | 75 |

| Vancouver | 74 |

| Test scenes | Num. images |

|---|---|

| Amsterdam | 64 |

| Bangkok | 75 |

| Barcelona | 75 |

| Buenos Aires | 64 |

| Cambridge (MA) | 75 |

| Cannes | 75 |

| Chicago | 75 |

| Helsinki | 75 |

| Madrid | 75 |

| Mountain View | 75 |

| New Orleans | 75 |

| San Francisco | 75 |

| Singapore | 75 |

| Sydney | 75 |

| Tokyo | 75 |

| Toronto | 75 |

| Zurich | 75 |

The Phototourism dataset

This was the dataset used in the previous two versions of the challenge, which remains one of our three datasets. We publish training data with images, poses, depth maps, and co-visibility estimates. We also provide a validation set in the format expected by the benchmark, to allow challenge participants to tune their methods before submission. The test set will remain private.

In order to learn and evaluate models that can perform well under a wide range of situations, it is of paramount importance to collect information from multiple sensors obtained at different times, from different viewpoints, and with occlusions. A natural solution is thus to turn to photo-tourism data. In this dataset we rely on 26 photo-tourism image collections of popular landmarks originally collected by the Yahoo Flickr Creative Commons 100M (YFCC) dataset and Reconstructing the world in six days. They range from ~100s to ~1000s of images.

We provide the full data, with ground truth, for 15 scenes, which can be optionally used for training. We format 3 of them (Reichstag, Sacre Coeur and Saint Peter's Square) for validation, and reserve 12 for testing.

We provide examples to parse the training data on the benchmark repository: please refer to this notebook for details.

| Training scene | Num. images | Num. 3D points |

|---|---|---|

| Brandenburg Gate | 1363 | 100040 |

| Buckingham Palace | 1676 | 234052 |

| Colosseum Exterior | 2063 | 259807 |

| Grand Place Brussels | 1083 | 229788 |

| Hagia Sophia Interior | 888 | 235541 |

| Notre Dame Front Facade | 3765 | 488895 |

| Palace of Westminster | 983 | 115868 |

| Pantheon Exterior | 1401 | 166923 |

| Reichstag | 75 | 17823 |

| Sacre Coeur | 1179 | 140659 |

| Saint Peter's Square | 2504 | 232329 |

| Taj Mahal | 1312 | 94121 |

| Temple Nara Japan | 904 | 92131 |

| Trevi Fountain | 3191 | 580673 |

| Westminster Abbey | 1061 | 198222 |

| Total | 25.6k | 3.7M |

| Test scenes | Num. images | Num. 3D points |

|---|---|---|

| British Museum | 660 | 73569 |

| Florence Cathedral Side | 108 | 44143 |

| Lincoln Memorial Statue | 850 | 58661 |

| London Bridge | 629 | 72235 |

| Milan Cathedral | 124 | 33905 |

| Mount Rushmore | 138 | 45350 |

| Piazza San Marco | 249 | 95895 |

| Sagrada Familia | 401 | 120723 |

| Saint Paul's Cathedral | 615 | 98872 |

| Total | 4107 | 696k |