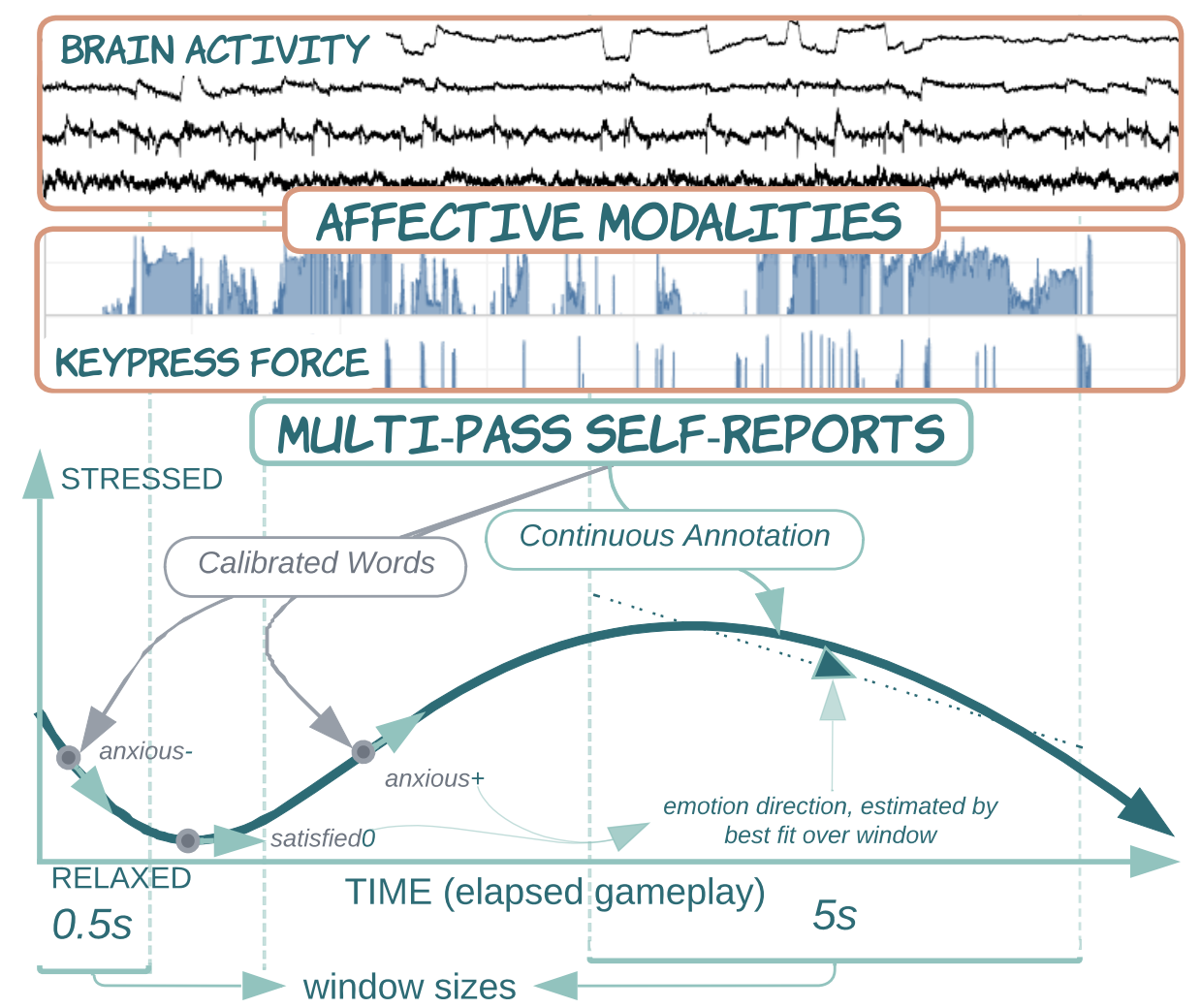

We present our FEEL (Force, EEG and Emotion-Labelled) dataset, a collection of brain activity, keypress force data, labeled with self-reported emotion during tense videogame play (N=16); open-sourced for community exploration and available for download here.

Our multi-resolution emotion self reporting procedure allows the construction of emotion labels differentiating between emotions by transition (best-fit line) -- eg ``anxious getting more stressed'' vs ``anxious but resolving towards relaxed''. As a benchmark demonstration, we trained subject-dependent hierarchical models of contextualized individual experience to compare emotion classification by modality (brain activity and keypress force), reporting F1-scores=[0.44, 0.82] (chance empirically determined at F1=0.22, sd=0.01).