I am an adjunct professor at UBC Computer Science and a full-time professor at Oxford. My goal as a researcher is to develop new ideas, algorithms and mathematical models to extend the frontiers of science and technology so as to improve the quality of life of humans and their environment. Mine is also a search for knowledge and a desire to understand how brains work and intelligence in general.

For more recent information and projects, please visit my Oxford website.

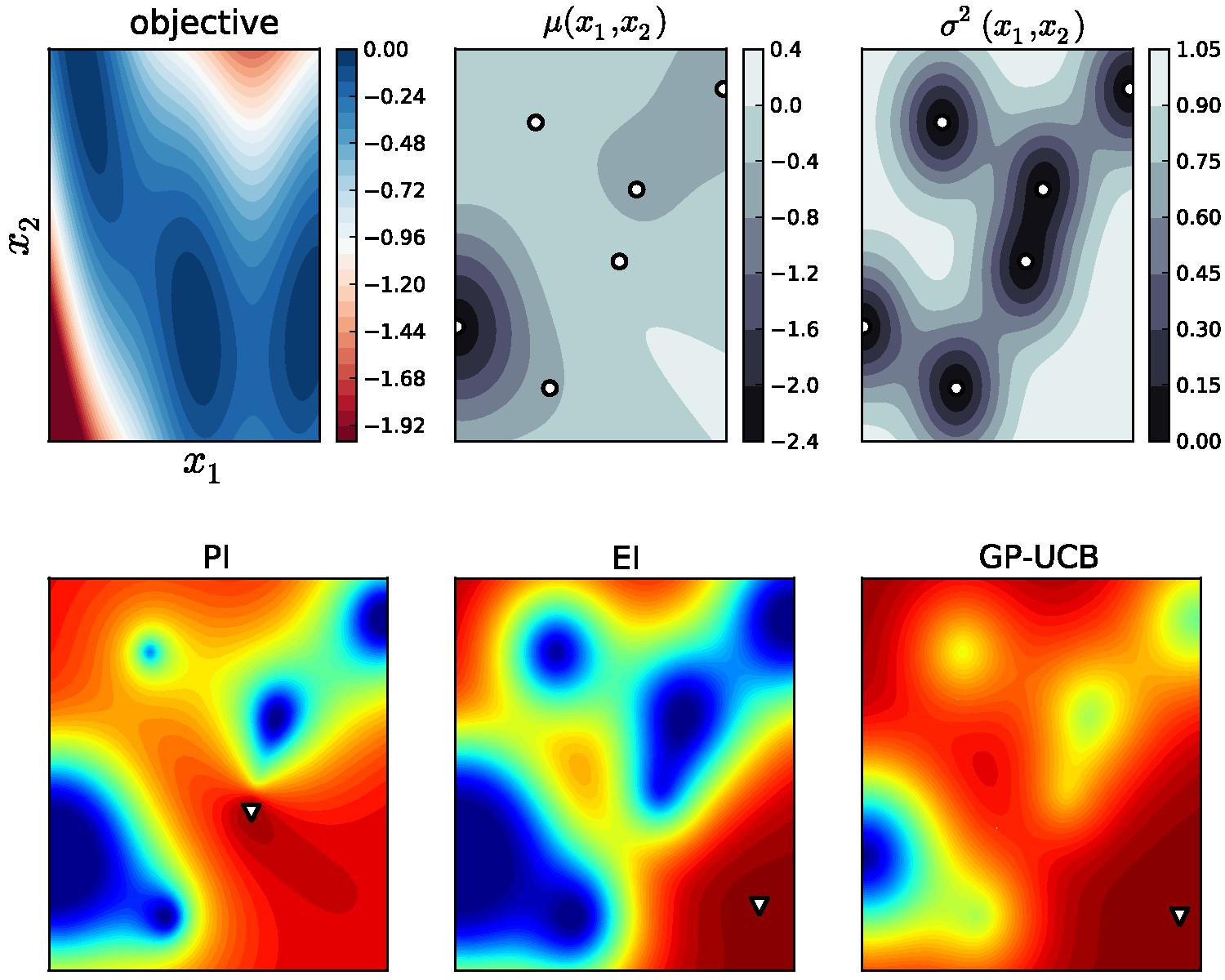

Our paper on Bayesian Optimization in high dimensions received an award at IJCAI 2013.

I was the General Chair for UAI 2013. Thanks to all the many people who helped make UAI 2013 a fantastic conference.

I taught an unsupervised feature learning course at the Institute of Applied Math at UCLA in 2012 (slides ). The first video provides an introduction and motivation, presents basic stastical arguments, discusses the problem of feature learning and makes important connections between satisfiability problems (SAT) and Boltzmann machines, talks about the eco-statistical hypothesis in learning, and how to use nature, Hopfield nets and quantum computers to do learning. The second video focuses on learning principles: maximum likelihood, pseudo-likelihood, contrastive learning, max-margin learning, ratio matching and score matching. The third video focuses on attentional architectures for deep learning in video.

My undergraduate machine learning course in youtube.

Our big data spin-off Zite was acquired by CNN. It is now the engine powering CNN Trends. Zite is a good example of how the machine learning ideas developed with my students Eric Brochu and Mike Klaas impact millions of people.

In January 2013, I received the Charles A. McDowell Award for Excellence in Research. I would like to thank my students and collaborators, Kevin Leyton-Brown and Michiel van de Panne, and UBC for this. I am fortunate to work in a great department.

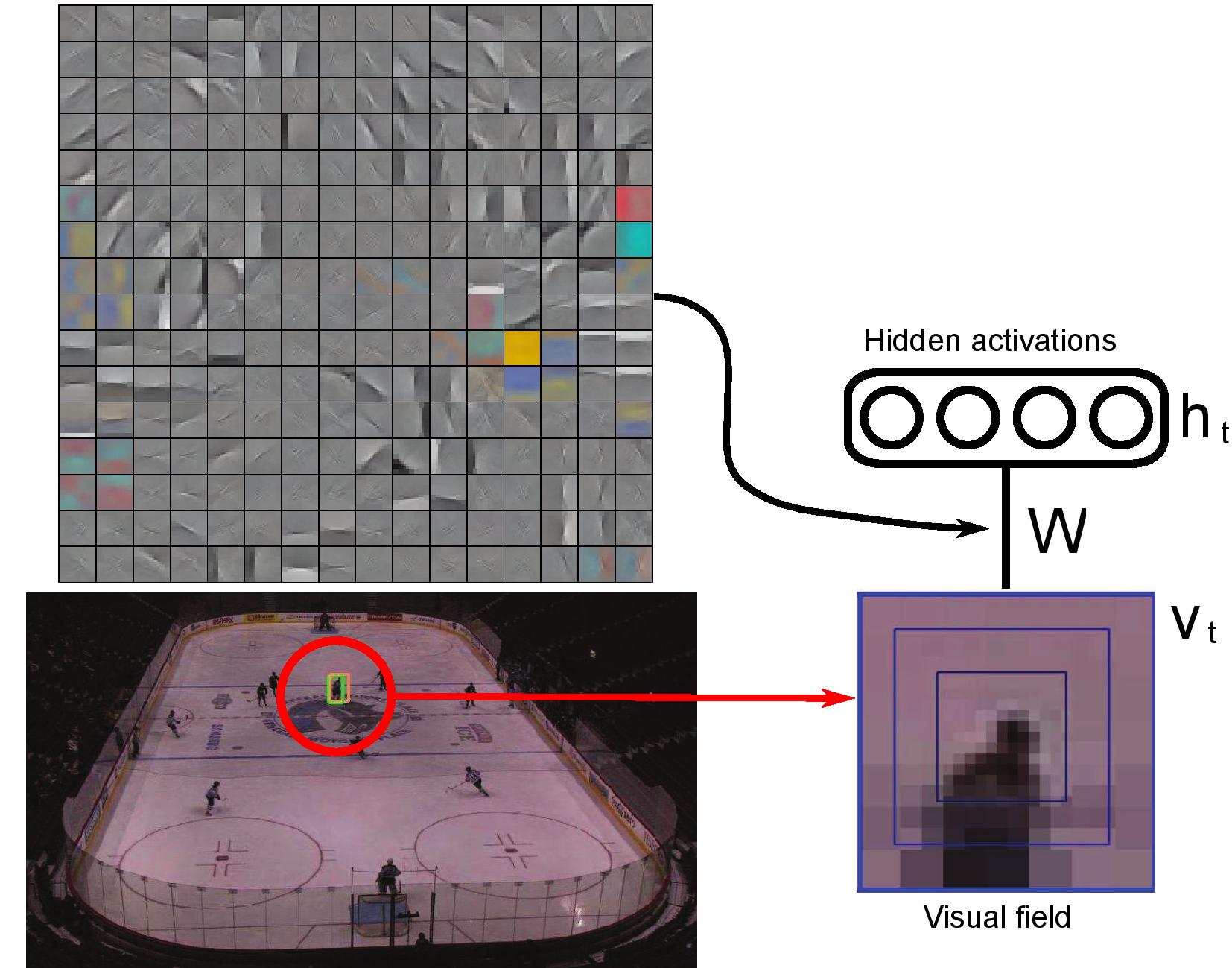

Our Neural Computation paper Learning Where to Attend with Deep Architectures for Image Tracking shows that attentional mechanisms, using Bayesian optimization and particle filters, allow us to deploy deep learning techniques to track and recognize objects in HD video.

Globe and Mail article about Reasons for optimism on the VC front showcases Ali, Mike and me in front of one of our mathy whiteboards downtown.

I believe Bayesian Optimization will result in huge leaps in automation. It will impact the design, selection and configuration of models, experiments, algorithms, hardware, compilers, and much more. My group has shown that it can be used to personalize user interfaces, learn preferences, learn controllers for robots and entertainment games, adapt particle filters and MCMC algorithms, configure mixed-integer linear programming solvers, configure classifiers such as random forests.

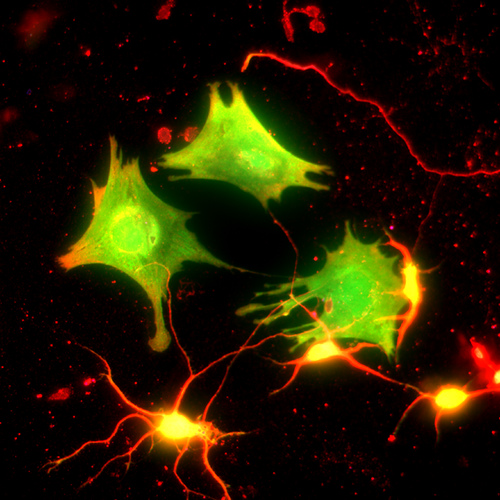

I am a Fellow of CIFAR's Neural Computation and Adaptive Perception program.I am interested in neural architectures and learning from web-scale datasets. My conjecture is that with a predictive theory of intelligence, the right models and big data, we will discover very simple (yet extremely powerful) algorithms for perception, motor control and probabilistic reasoning.

Ben Marlin, Kevin Swersky and I have been working with a few other colleagues on formalizing the field of deep learning from a mathematical statistics perspective. We have studied the statistic efficiency of estimators and made an created a way of mapping probabilistic models to autoencoders with sophisticated regularizers. Ben created a great demo showing the differences between ratio matching, pseudo-likelihood, stochastic maximum likelihood and contrastive divergence.

MITACS kindly offered me the 2010 MITACS Young Researcher Award. I thank all my students and academic-industry collaborators for it. My talk was on big data.

My Monte Carlo lectures at videolectures.net. NIPS tutorial slides on SMC methods for Bayesian inference, eigen-function computation, rare-event simulation, probabilistic graphical models, approximate Bayesian computation and self-avoiding random walks.

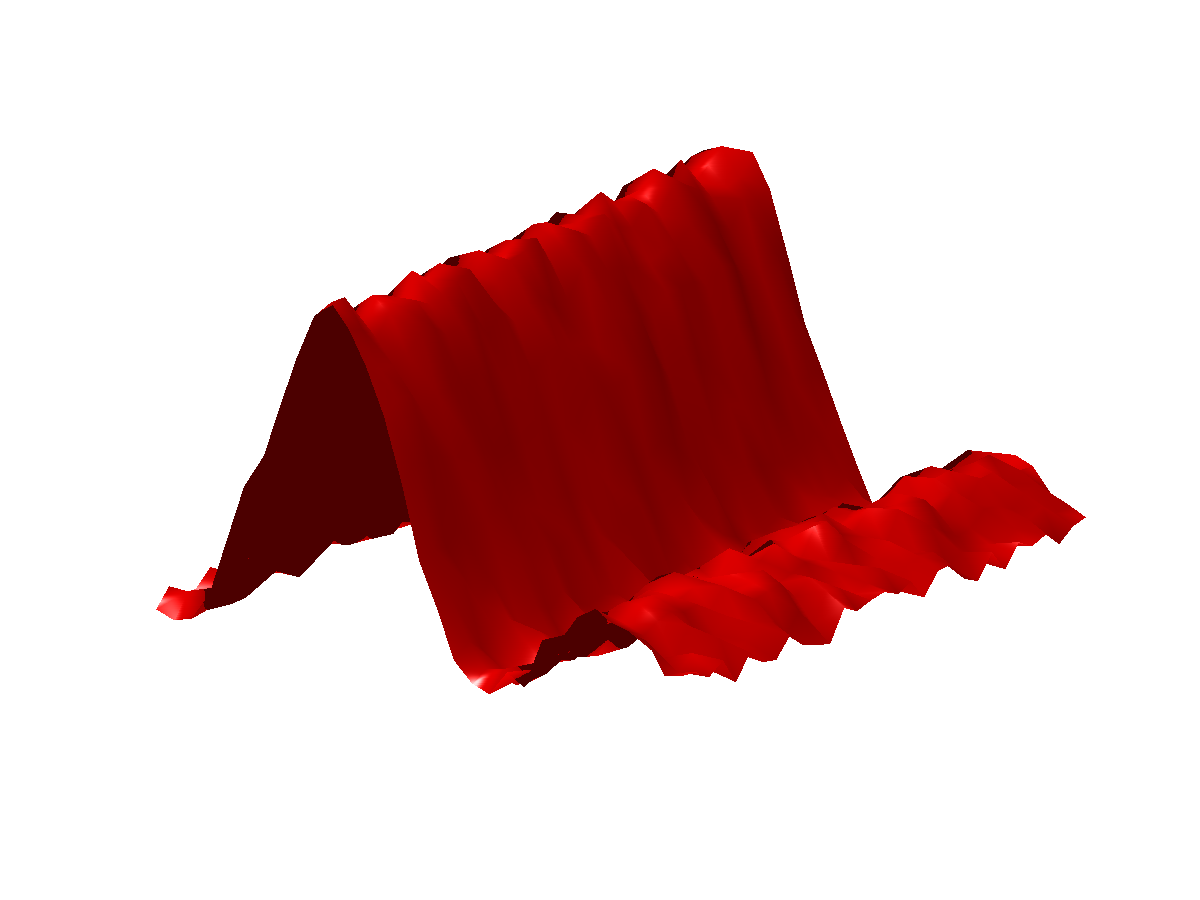

Bayesian Interactive Optimization for procedural animation. This work received the 2007 First Place at the ACM SIGGRAPH Student Research Competition. It is a new approach of harnessing humans to optimize unknown objective functions.

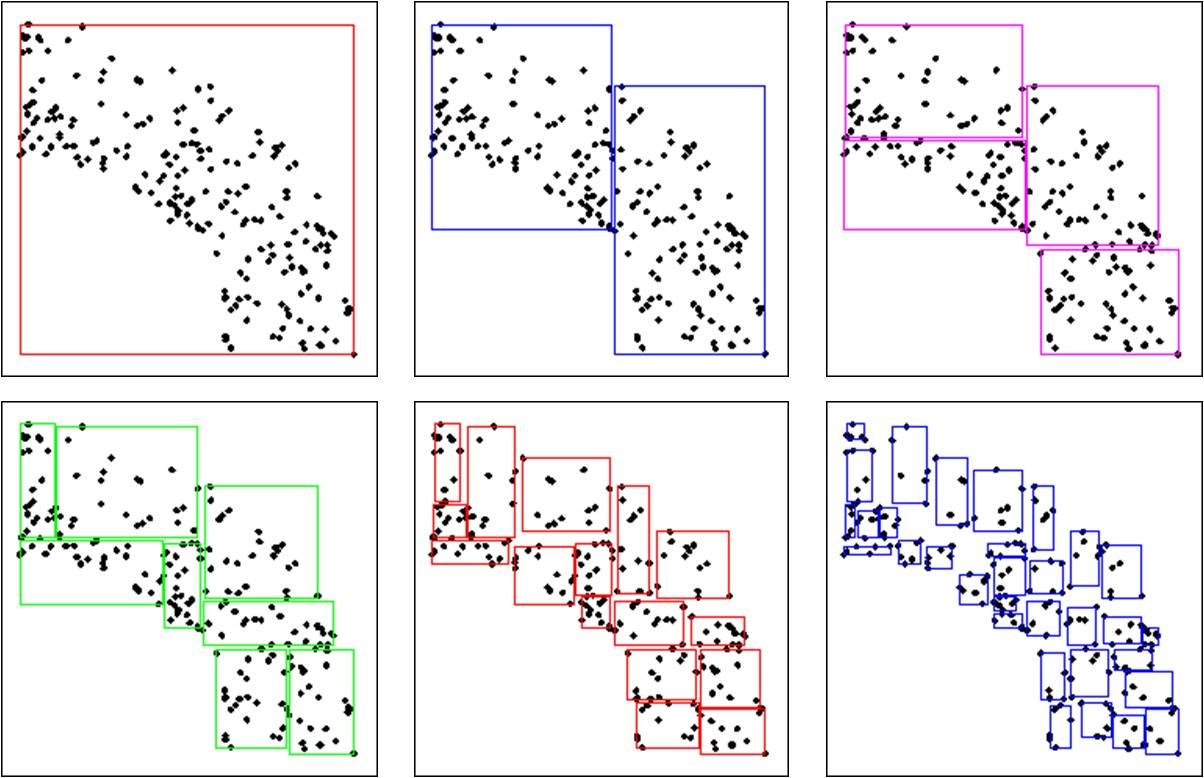

I am interested in fast N-body computational techniques such as the Fast Gauss Transform, fast multipole methods, kd-trees and dual trees. I have used these to design novel particle filters and smoothers, as well as to speed up kernel methods in machine learning. Our software for n-body learning is freely available. I co-organized a workshop with Dan Huttenlocher on this topic: NIPS fast N-body learning workshop.