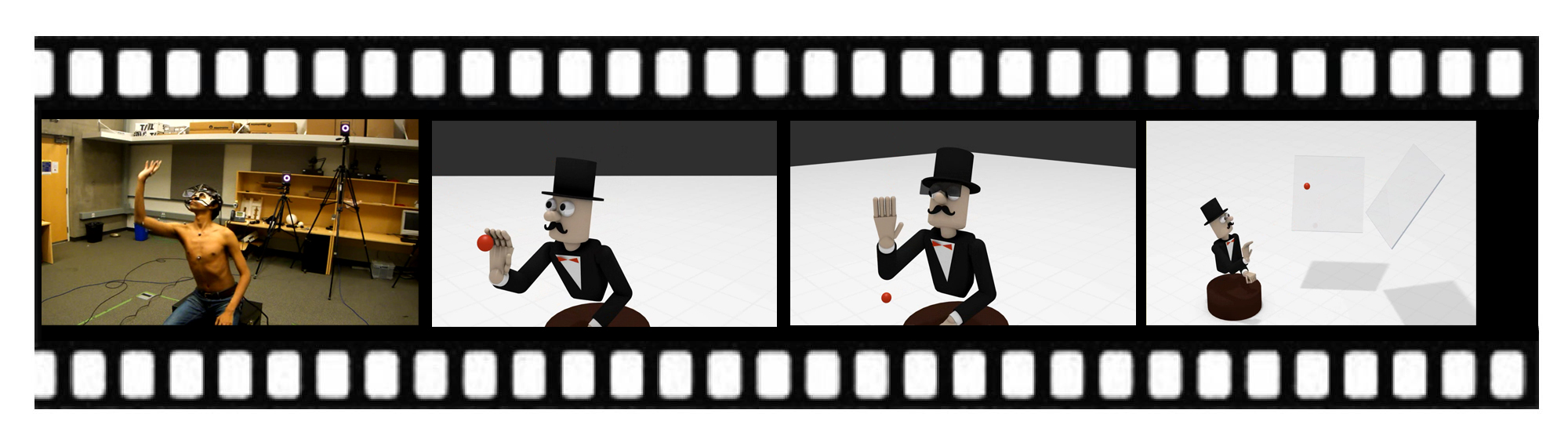

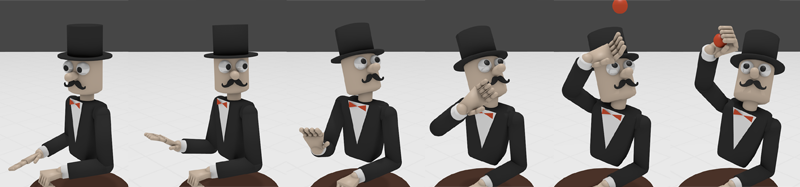

Catching a thrown ball. The movement depends on visual estimates of the ball’s motion, which trigger shared motor programs for eye, head, arm, and torso movement. The gaze sets the goal for the hand. Initially the movements are reactive, but as visual estimates improve predictive movements are generated to the final catching position.