Iterative Design and Sim-to-Real

CORL 2019 -- Conference on Robot Learning

(1) University of British Columbia

(2) Oregon State University

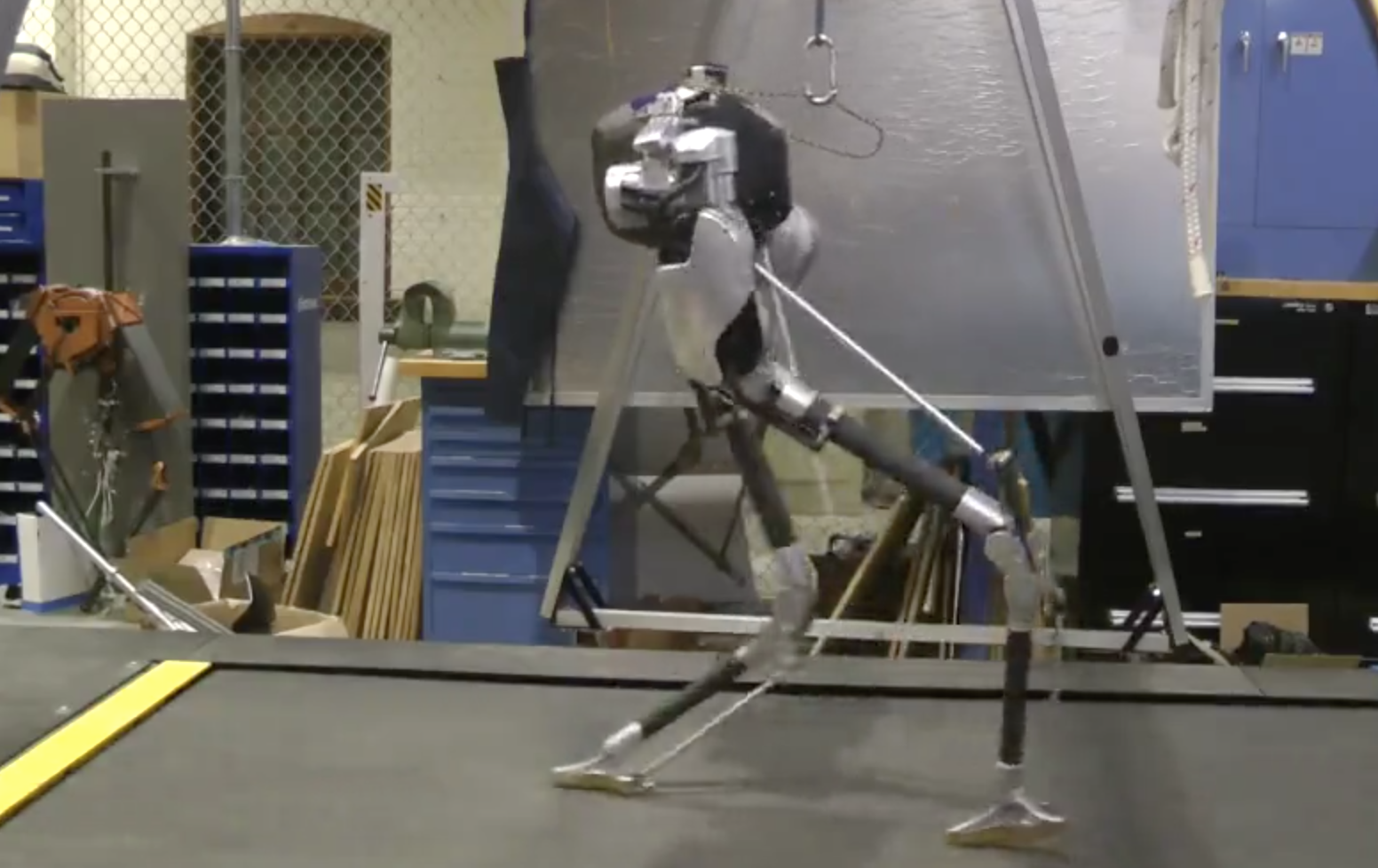

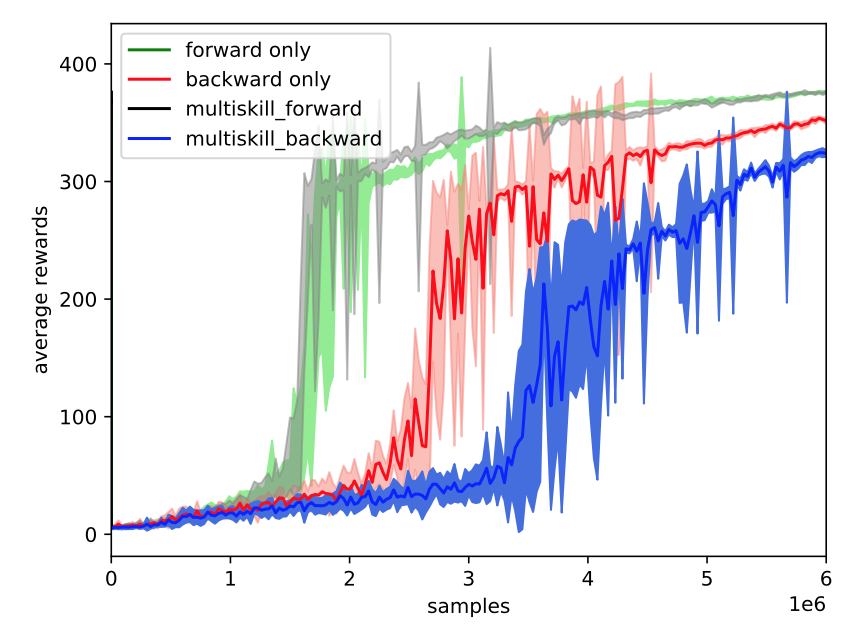

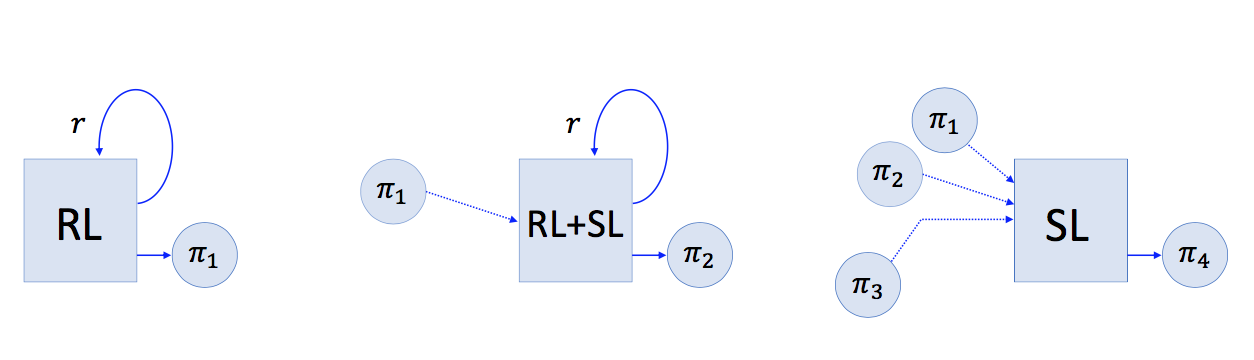

Deep reinforcement learning (DRL) is a promising approach for developing legged locomotion skills. However, current work commonly describes DRL as being a one-shot process, where the state, action and reward are assumed to be well defined and are directly used by an RL algorithm to obtain policies. In this paper, we describe and document an iterative design approach, which reflects the multiple design iterations of the reward that are often (if not always) needed in practice. Throughout the process, transfer learning is achieved via Deterministic Action Stochastic State (DASS) tuples, representing the deterministic policy actions associated with states visited by the stochastic policy. We demonstrate the transfer of policies learned in simulation to the physical robot without dynamics randomization. We also identify several key components that are critical for sim-to-real transfer in our setting.

ArXiV publication: https://arxiv.org/abs/1903.09537

@inproceedings{2019-CORL-cassie,

title={Learning Locomotion Skills for Cassie: Iterative Design and Sim-to-Real},

author={Zhaoming Xie and Patrick Clary and Jeremy Dao and Pedro Morais and Jonathan Hurst and Michiel van de Panne},

booktitle = {Proc. Conference on Robot Learning (CORL 2019)},

year={2019}

}