Glen Berseth(*)

Cheng Xie(*)

Paul Cernek

Michiel van de Panne

University of British Columbia

International Conference on Learning Representations (ICLR 2018)

Note: (*) denotes co-first authorship

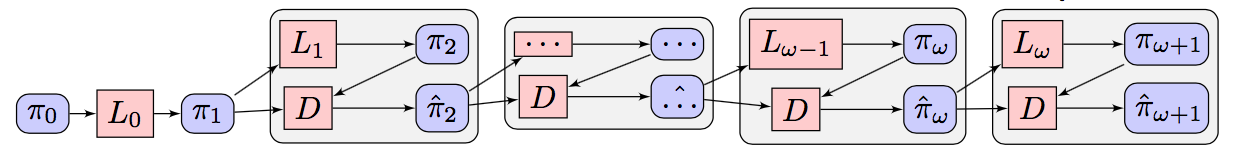

Deep reinforcement learning has demonstrated increasing capabilities for con- tinuous control problems, including agents that can move with skill and agility through their environment. An open problem in this setting is that of develop- ing good strategies for integrating or merging policies for multiple skills, where each individual skill is a specialist in a specific skill and its associated state distribution. We extend policy distillation methods to the continuous action setting and leverage this technique to combine expert policies, as evaluated in the do- main of simulated bipedal locomotion across different classes of terrain. We also introduce an input injection method for augmenting an existing policy network to exploit new input features. Lastly, our method uses transfer learning to assist in the efficient acquisition of new skills. The combination of these methods allows a policy to be incrementally augmented with new skills. We compare our progressive learning and integration via distillation (PLAID) method against three alternative baselines.

@inproceedings{2018-ICLR-distill,

title={Progressive Reinforcement Learning with Distillation for Multi-Skilled Motion Control},

author={Glen Berseth and Cheng Xie and Paul Cernek and Michiel van de Panne},

booktitle = {Proc. International Conference on Learning Representations},

year={2018}

}