Transactions on Graphics (Proc. ACM SIGGRAPH 2015)

Xue Bin Peng Glen Berseth Michiel van de Panne

University of British Columbia

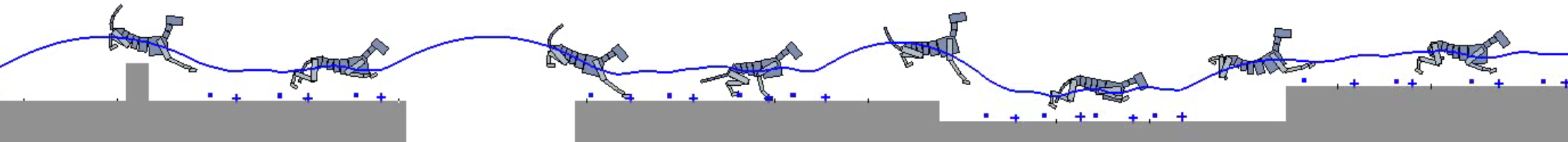

The locomotion skills developed for physics-based characters most often target flat terrain. However, much of their potential lies with the creation of dynamic, momentum-based motions across more complex terrains. In this paper, we learn controllers that allow simulated characters to traverse terrains with gaps, steps, and walls using highly dynamic gaits. This is achieved using reinforcement learning, with careful attention given to the action representation, non-parametric approximation of both the value function and the policy; epsilon-greedy exploration; and the learning of a good state distance metric. The methods enable a 21-link planar dog and a 7-link planar biped to navigate challenging sequences of terrain using bounding and running gaits. We evaluate the impact of the key features of our skill learning pipeline on the resulting performance.

@article{2015-TOG-terrainRL,

author = {Peng, Xue Bin and Berseth, Glen and van de Panne, Michiel},

title = {Dynamic Terrain Traversal Skills Using Reinforcement Learning},

journal = {ACM Trans. Graph.},

issue_date = {August 2015},

volume = {34},

number = {4},

month = jul,

year = {2015},

issn = {0730-0301},

pages = {80:1--80:11},

articleno = {80},

numpages = {11},

url = {http://doi.acm.org/10.1145/2766910},

doi = {10.1145/2766910},

acmid = {2766910},

publisher = {ACM}

}

GRAND NCE: Graphics, Animation, and New Media