ACM SIGGRAPH / Eurographics Symposium on Computer Animation 2015

(one of three "best paper" award winners, out of 20 accepted papers)

Kai Ding Libin Liu

Michiel van de Panne KangKang Yin

University of British Columbia, Microsoft Research Asia, and National University of Singapore

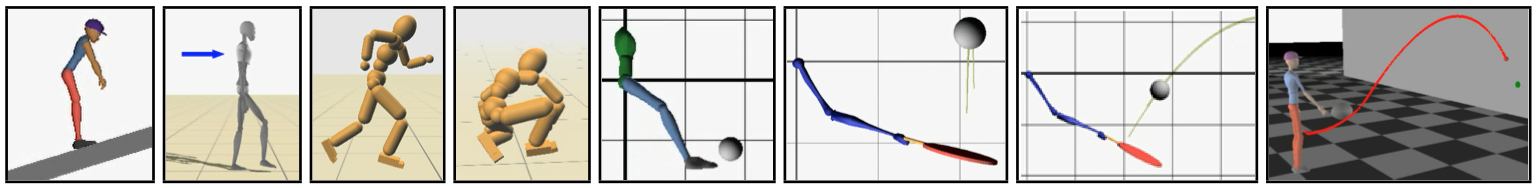

We introduce a method for learning low-dimensional linear feedback strategies for the control of physics-based animated characters around a given reference trajectory. This allows for learned low-dimensional state abstractions and action abstractions, thereby reducing the need to rely on manually designed abstractions such as the center-of-mass state or foot-placement actions. Once learned, the compact feedback structure allow simulated characters to respond to changes in the environment and changes in goals. The approach is based on policy search in the space of reduced-order linear output feedback matrices. We show that these can be used to replace or further reduce manually-designed state and action abstractions. The approach is sufficiently general to allow for the development of unconventional feedback loops, such as feedback based on ground reaction forces. Results are demonstrated for a mix of 2D and 3D systems, including tilting-platform balancing, walking, running, rolling, targeted kicks, and several types of ball- hitting tasks.

@inproceedings{2015-SCA-reducedOrder,

title={Learning Reduced-Order Feedback Policies for Motion Skills},

author={Kai Ding and Libin Liu and Michiel van de Panne and KangKang Yin},

booktitle = {Proc. ACM SIGGRAPH / Eurographics Symposium on Computer Animation},

year={2015}

}

GRAND NCE: Graphics, Animation, and New Media