|

Overview of Research Projects

Laboratory for Computational Intelligence

Computer Science Department

University of British Columbia

Following are summaries of a few research projects prior to 2015. See

publications

for papers on many other projects.

|

|

FLANN: Fast Library for Approximate Nearest Neighbors

(Marius Muja and David Lowe)

The most time consuming part of performing object recognition or

machine learning in large datasets is often the need to find the

closest matches for high-dimensional vectors among millions of similar

vectors. There are usually no exact algorithms for solving this

problem faster than linear search, but linear search is much too slow

for many applications. Fortunately, it is possible to use approximate

algorithms that sometimes return a slightly less than optimal answer

but can be thousands of times faster. We have implemented the best

known previous algorithms for approximate nearest neighbor search and

developed our own improved methods. The optimal choice of algorithm

depends on the problem, so we have also developed an automated

algorithm configuration system that determines the best algorithm and

parameters for particular data and accuracy requirements. This

software, FLANN, has been released as open source. It requires only a

few library calls in Python, Matlab, or C++ to select and apply the

best algorithm for any dataset.

FLANN GitHub page

with source code

|

|

|

Autostitch: Automated panorama creation

(Matthew Brown and David Lowe)

An ordinary digital camera can be used to create wide-angle

high-resolution images by stitching many images together into seamless

panoramas. However, previous methods for panorama creation required

complex user interfaces or required that images be taken in a specific

linear sequence with fixed focal lengths and exposure settings. We

have used local invariant features to allow panorama matching to be

fully automated without assuming any ordering of the images or any

restriction on focal lengths, orientations, or exposures. The

matching time is linear in the number of images, so all panoramas can

be automatically detected in large sets of images. We have also

developed approaches for seamlessly blending images even when

illumination changes or there are small misregistrations.

Autostitch research site

|

|

|

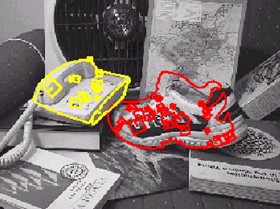

SIFT: Matching with local invariant features

Local invariant features allow us to efficiently match small portions

of cluttered images under arbitrary rotations, scalings, change of

brightness and contrast, and other transformations. The idea is to break

the image into many small overlapping pieces of varying size, each of which is

described in a manner invariant to the possible transformations. Then

each part can be individually matched, and the matching pieces checked

for consistency. It may sound like a lot of computation, but it can all

be done in a fraction of a second on an ordinary computer.

This particular approach is called

SIFT (for Scale-Invariant Feature Transform) as it transforms each

local piece of an image into coordinates that are independent of image

scale and orientation.

More information and demo

|

|

|

Augmented reality in natural scenes

(Iryna Gordon and David Lowe)

Augmented reality means that we can take video images of the natural

world and superimpose synthetic objects with accurate 3D registration

so that they appear to be part of the scene. Applications include any

situation in which it is useful to add information to a scene, such as

for the film industry, tourism, heads-up automobile displays, or

surgical visualization. We have developed an approach that can

operate without markers in natural scenes by matching local invariant

features. It begins with a few images of each scene taken from

different viewpoints, which are used to automatically construct

accurate 3D models. The models can then be reliably recognized in

subsequent images and precisely localized and tracked.

More information.

|

[Return to home page]

|