538B Distributed Systems: Assignment 1

Due: Monday February 4th, 11:59PM

Winter 2018

In this assignment you will create a simple replicated key-value store with serializable key-value consistency semantics.

Background

Your key-value store must follow the theory of replicated state machines (RSM) from the tutorial/overview paper we read in the course: Implementing fault-tolerant services using the state machine approach: a tutorial.

In particular, you will implement an RSM that uses Lamport logical clocks to determine ordering and stability (carefully read Section 3.2.1 in the paper). Your implementation should be able to tolerate fail-stop client and replica failures. Your implementation should not handle addition of clients/replicas to the system (all of the clients/replicas are known ahead of time and can only drop out with fail stop failures). You should include 'null' requests (or an equivalent technique) to make sure that your system does not block a client indefinitely if it is the last one to invoke an operation.

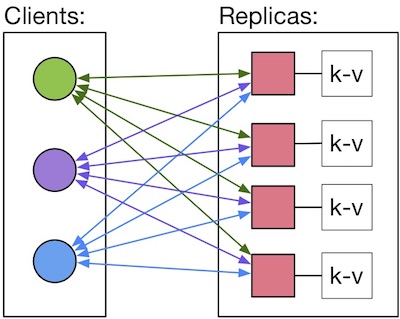

Connectivity between clients and replicas in your key-value store.

Assumptions you can/cannot make

Your implementation can assume the following:

- No network failures.

- The network connection/channel between a client and a replica is FIFO.

- Your can interpret RPC failures as node failures (i.e., this is your "failure detector").

- Use this to detect replica failures (since only clients invoke RPCs in this system)

- Client failures are detected using an explicit Disconnect RPC (see below)

- You can use a best-effort function to implement reliable broadcast (to satisfy the Agreement requirement explained in Section 3.1 of the paper). You can implement this function by simply iterating over the set of nodes to broadcast to and send each node a message using Go's RPC. You can interpret an RPC failure inside this function as a node failure (and not a network failure).

- The clients will invoke the Disconnect RPC prior to quitting/failing (fail-stop clients)

Your implementation cannot assume the following:

- The amount of time that it takes for an RPC to complete -- you should trust the RPC system to return an error when it cannot reach the replica. But, you should not assume that this will happen after some specific amount of time (i.e., your code should be independent of RPC system implementation assumptions/details).

Design details

An initial draft of the key-value store and a client that uses it are posted here:

You are not allowed to change the RPC API between the replicas and clients. You are also not allowed to change the replica's command line arguments.

You will have to re-formulate/refactor the current client code to create a higher-level application-level API (think library) for the client to use. In this API the client library must return a Lamport timestamp to the client application (with the expectation that the client will relay this timestamp to other clients, if it happens to communicate with them). The code above takes a list of replicas on the command line and uses the first one in a get operations. Your task is to implement the proper replication semantics, described above, for the RPC commands in the code.

RPC commands overview: Below, the clientid argument denotes the id of client (uint8) making the invocation, while the clock argument (uint64) denotes this client's most recent lamport clock value.

- State access RPCs:

- Get(key, clientid, clock): execute a get of the key against the internal key-value store (that maps string keys to string values). Returns the string value for key.

- Put(key,val,clientid, clock): execute a put of (key,val) against the internal key-value store. Return value uninterpreted (can be anything).

- Notification RPCs:

- ClockUpdate(clientid, clock): notify replica of the client's latest clock value (the "null" call) Return value uninterpreted.

- Disconnect(clientid): notify the replica that the client is disconnecting/failing. Return value uninterpreted.

Connectivity requirements:

- Replicas should not communicate to each other, nor be aware of each other (e.g., number of replicas, replica IPs, etc).

- In coordinating replication, clients should not communicate with each other, nor be aware of each other.

More precise "logical" Get/Put semantics:

- In servicing a "logical get", the client should select one replica on which to invoke the RPC get. The client does not need to, and should not, invoke this RPC against multiple replicas. If the replica fails while the get RPC is pending, the client should retry with another replica: the replica failure is not a reason to fail the logical get. The logical get, of course, must fail if all the replicas have failed.

- Client should translate a "logical put" into a set of put RPCs issued against all of the replicas (concurrently, or not is your choice). The client must block until all of the replicas have processed these RPCs (it should not issue any other RPC to any replica while servicing a logical put). If a replica fails while a client is waiting on it to process a put RPC, the client should ignore the replica (i.e., not re-issue the put RPC to it).

- All logical operations must be serialized at the client. For example, the client cannot issue a Disconnect before a Put returns (this is done to simplify things :-)

Maintaining lamport clocks:

- Clients should increment their local Lamport clocks at least before each replica RPC invocation, although you can increment them at a faster rate, if you'd like. Note that the ClockUpdate RPC triggers periodically, depending on the clock-update-rate command line argument given to the client.

- Note that the replicas do not participate in lamport clock maintenance (they passively observe clock values to order client operations, but do not need to maintain them themselves, nor pollute the client's clock space with their own values/updates).

Deliverables

- An email to Ivan with a link to your UBC git repository with your code.

- Your code repository should include a README file that:

- Explains your system design

- Describes how to set up your system and run it on a Linux machine

- Note: you do not have to deploy/test your assignment on Azure. Testing on your local machine and department servers is sufficient.

Honesty guidelines: You should do your work individually. Make sure to follow the course collaboration policy and refer to the assignments instructions that detail how to submit your solution.