416 Distributed Systems: Assignment 6

Due: Mar 24 at midnight

2016W2, Winter 2017

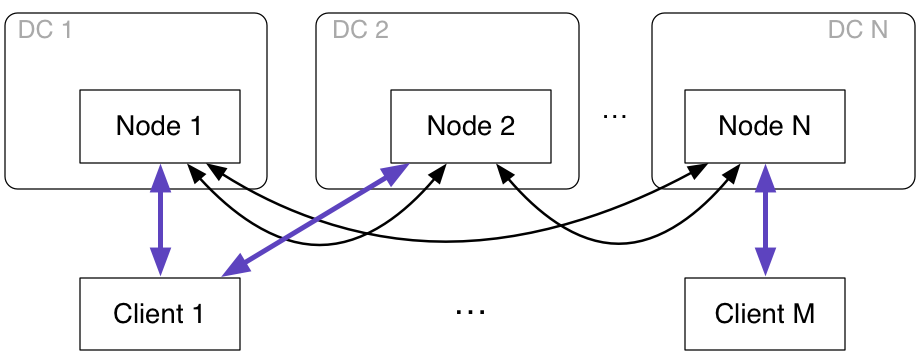

In this assignment you will build a distributed key-value service. Your service will use a collection of nodes to provide fault tolerance. Your service will provide transactional (ACID) semantics to clients without Durability. For this assignment you will use traditional concurrency control techniques. In the following assignment you will extend the back-end to use a block-chain protocol to mitigate byzantine nodes.

High-level description

A key-value store maintains a mapping of keys to values. Each key maps to exactly one value. Your key-value service will be composed of N nodes, each of which will replicate the entire key-value store; the system will therefore be available (continue to service clients) even if N-1 of the nodes fail. In this assignment your store must handle node failures. However, the set of nodes is static and nodes that fail do not come back.

Your service must provide transactional semantics to clients. Specifically, your service must provide ACID semantics, without traditional durability guarantees --- all state, including the key-value map, can be stored in memory (e.g., on failure of all N nodes, the entire key-value store is lost).

The details

There are two kinds of processes in the system: nodes, which maintain the key-value store and service key-value transactions; and clients, each of which connects to some nodes to execute some number of transactions.

- Nodes. The nodes are statically configured, all know one another, and all start within some bounded time window. Each node must be available to service client requests. A node may fail at any time in a fail-stop manner.

- Clients. There are an arbitrary number of clients. The clients do not know one another. Each client knows about all the nodes in the system, and connects/communicates with some or all of these nodes to coordinate its transaction processing. A client may fail at any time in a fail-stop manner.

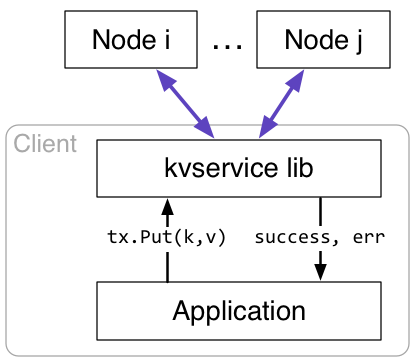

Client-service API. A client application uses a local library to interact with the service (see diagram on the right). This library exposes an interface that is detailed in the kvservice.go file. You are not allowed to change this client interface. However, you have design freedom to implement this interface however you want. For example, you can design the communication protocol between the client library and the service to use RPC/HTTP/TCP/etc, and you can make the client as stateful or as stateless as you want. Your library does not need to be thread safe.

You can use a stub implementation of the client-library API and a client application that uses it as starters:

- kvservice.go (implementation)

- client.go (client of kvservice.go)

Concurrency control. Non-conflicting client transactions are those that range over different sets of keys. These transactions must run in parallel. Conflicting client transactions must use either pessimistic or optimistic concurrency control.

Aborting transactions. Your service must abort a transaction in two cases: (1.must) when the client decides to abort a transaction, (2.must) when there is a deadlock between active (non-committed/non-aborted) transactions. Your service may abort a transaction in two cases: (1.may) when any one node has failed, and (2.may) when the client that originated the transaction has failed. Your system must not abort transactions except in these four cases.

When aborting transactions because of deadlock you must implement the following policy, respecting this order: (1) abort the minimal number of transactions necessary to resolve the deadlock (and for some transaction to make progress and commit), and (2) preferentially abort those transactions that have touched the fewest number of keys (number of unique keys accessed by all operations in a transaction). If there is a tie, choose the transaction to abort arbitrarily.

Node failures. Nodes may fail at any time. Your service must survive fail-stop node failures without compromising the ACI semantics. In particular, you may abort transactions when a node fails. The client library must properly handle this case (signal to the application that these transactions have aborted). The client must then transparently connect to and use a different available node for future transactions. Your service must be able to tolerate up to N-1 node failures without any loss of (committed) key-value state. In this context committed means committed transactions (all state must be maintained in memory).

Serializability semantics. Your service must provide transaction serializability semantics: execution of some number of transactions by your service must be equivalent to the execution of the same transactions in some linear sequence.

- If two transactions execute concurrently (i.e., one started while another has not yet been committed), then the serialized order of the two transactions (exposed to clients via the txID value returned in the commit call) is up to your system to decide. There is no constraint on this ordering.

- If a transaction, t1, committed, and another transaction, t2, began executing after t1 committed, then t2 should appear as having executed after t1, and it should receive a corresponding higher txID value.

Client failures. Clients may fail at any time. Your service must survive fail-stop client failures without compromising the ACI semantics. In particular, all outstanding transactions associated with the failed client must be safely aborted by the service.

Assumptions you can make

- Each node and each client will run in its own VM (e.g., will have a unique IP address and have the usual port range available).

- All nodes will start within 4 seconds of each other.

- Clients will not start until all nodes have started and have been running for 4 seconds.

- Clients will not issue get requests on keys that have no values (have not been written with a put request).

- The client library only needs to support one transaction at a time for the application it is hosting (does not need to support multiple concurrent transactions).

- Each node can be identified by a unique ip:port argument (give on the command line)

- No network failures.

- txID values must be monotonically increasing and correspond to the serialized ordering of transactions (as observed by the client). The txID values can skip numbers (e.g., txID for one transactions could be 5, and for the next transaction could be 42).

- Node-node and node-client round-trip times are at most 2s.

- The client will pass a list IP:port strings, one per kv node, in its call to NewConnection. Each of these IP:port strings will be an external IP:port that corresponds to the listen-client-in IP:port for some kvnode instances (see below). This list of IP:port strings is not guaranteed to be in any particular order.

- Nodes, started using your submitted kvnode.go file, will be passed an identical [nodesFile] argument.

Assumptions you cannot make

- Nodes have synchronized clocks (e.g., running on the same physical host).

- Perfectly reliable network (e.g., if you use UDP for your peer protocol, expect loss and reordering)

- Nodes/clients fail at some particular time or in some particular order.

Implementation requirements

- All nodes run the same code.

- The code, including the client library, must be runnable on Azure Ubuntu machines configured with Go 1.7.4 (see the azureinstall.sh script and the Google slides presentation from prior assignments for more info).

- Your solution can only use the standard library Go packages.

- Your solution code must be Gofmt'd using gofmt.

Extra credit

This assignment is not extensible.

Solution spec

You must submit kvnode.go, your version of kvservice.go, and any other go files your service implementation requires. Clients should be able to use your library by (1) setting GOPATH to the root of your repository, and (2) using import "./kvservice" at top of file.

The kvnode process command line usage must be:

go run kvnode.go [nodesFile] [nodeID] [listen-node-in IP:port] [listen-client-in IP:port]

- [nodesFile] : a file containing one line per node in the key-value service. Each line must be terminated by '\n' and indicates the IP:port that should be used to by this node to connect to the other nodes in the service.

- [nodeID] : an integer between 1 and number of lines in nodesFile. The IP:port on line i of nodesFile is the external IP:port corresponding to the listen-node-in IP:port, which will be used by other nodes to connect to this node.

- [listen-node-in IP:port] : the IP:port that this node should listen on to receive connections from other nodes.

- [listen-client-in IP:port] : the IP:port that this node should listen on to receive connections from clients.

Advice

- Be methodical: start simple and create increasingly more complex versions of your system. Start by creating a transactional key-value service with a single node and a single connecting client. Have this service support transactional semantics without handling node/client failures. Next, extend this design towards multiple clients that connect to the one node. Next, extend this design to several nodes where there is a single node that acts as a 'transaction manager' for all transactions. Then, remove this constraint and allow clients to connect to any node. Finally, add support for node/client failures.

- Think carefully about where you store state in your system. There are many kinds of state and you can store it some/all nodes, at the clients, or both. The more distributed your state is, the more challenging it is to coordinate updates to this state. But, distributed state can better survive failures.

- Unlike prior assignments, this assignment does not define the protocol between the clients and your service (client-node API). You can start with a direct RPC-based protocol to handle the common case, and then evolve this protocol as you run into corner cases.

- Much of the mark will be based on the functionality of your code without failures. Therefore, work towards a complete solution before extending it to work with client failures, and then with node failures.

- Concurrency control between multiple concurrent transactions is a major challenge in this assignment. Plan out the design of this mechanism before attempting an implementation. Do the same for mechanisms responsible for handling failures.

- Develop a suite of clients to test your implementation in both the failure-free case and in the case where a variety of failures occur.

Rough grading rubric

- 5%: No failures, 1-client, non-aborting txns

- 5%: No failures, 1-client, aborting and non-aborting txns

- 10%: No failures, n-clients, non-conflicting txns

- 15%: No failures, n-clients, conflicting txns

- 15%: No failures, n-clients, deadlocking txns progress check

- 5%: Client-failures, n-clients, incomplete txns abort

- 5%: Client-failures, n-clients, committed txns retained

- 2%: Node-failures, 1-client, kv-service available

- 2%: Node-failures, n-clients, kv-service available

- 2%: Node-failures, n-clients, aborting and non-aborting txns

- 5%: Node-failures, n-clients, non-conflicting txns

- 6%: Node-failures, n-clients, conflicting txns

- 8%: Node-failures, n-clients, deadlocking txns progress check

- 5%: Node+Client-failures, n-clients, non-conflicting txns

- 5%: Node+Client-failures, n-clients, conflicting txns

- 5%: Node+Client-failures, n-clients, deadlocking txns progress check

Make sure to follow the course collaboration policy and refer to the assignments instructions that detail how to submit your solution.