WHAT IS TCC?

Illuminant estimation leveraging temporal information

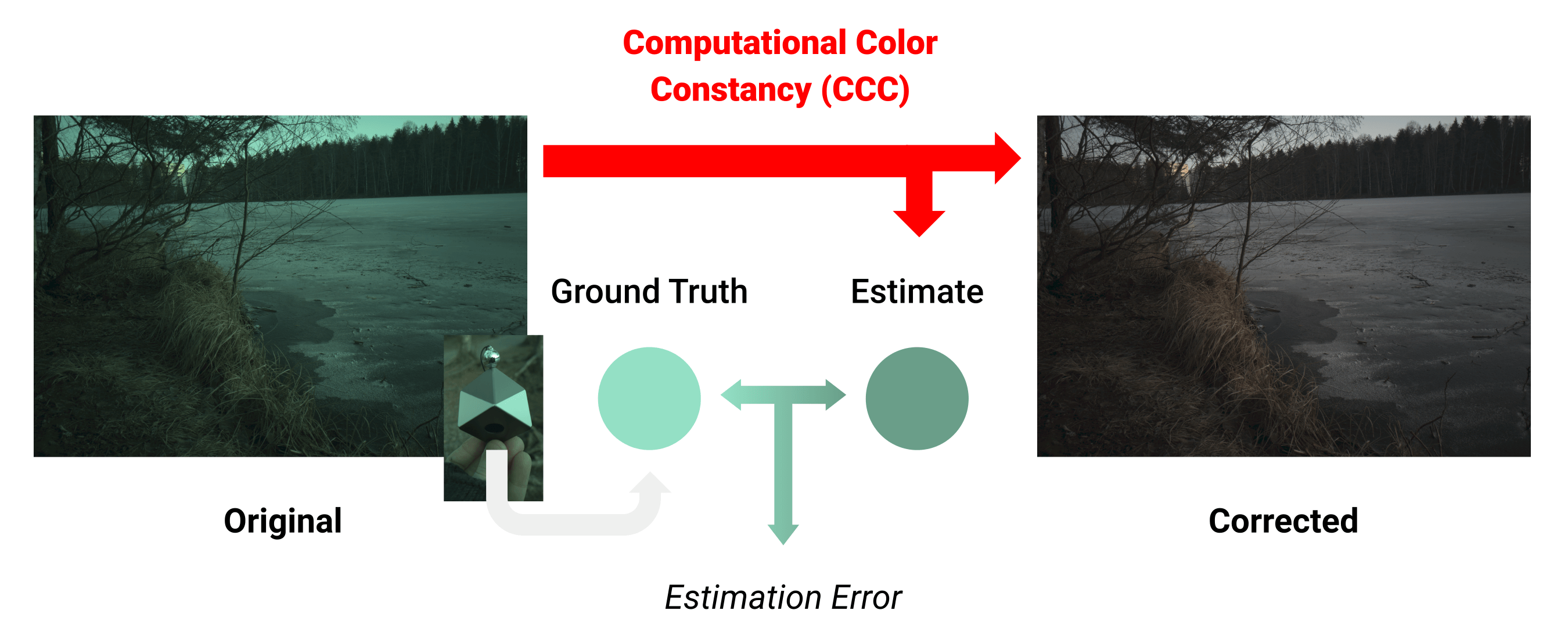

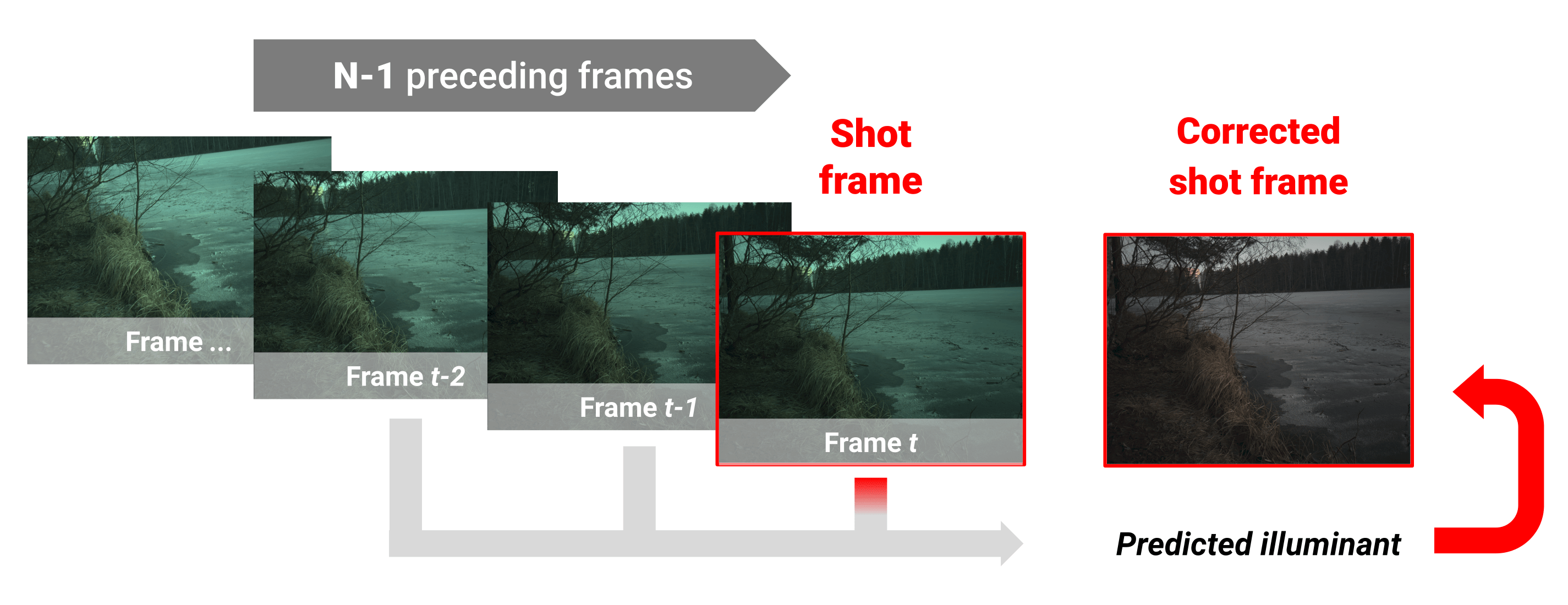

Computational Colour Constancy (CCC) consists of estimating the colour of one or more illuminants in a scene and using them to remove unwanted chromatic distortions. The Temporal Colour Constancy (TCC) task pursue the same aim but leverages the temporal information intrinsic in sequences of correlated images (e.g., the frames in a video).

How color constancy works in general

How TCC works specifically

RELEVANT PAPERS ON TCC

Cascading Convolutional Temporal Colour Constancy

Matteo Rizzo, Cristina Conati, Daesik Jang, Hui Hu (ArXiv, 2021) [https://github.com/matteo-rizzo/cctcc]

Computational Colour Constancy (CCC) consists of estimating the colour of one or more illuminants in a scene and using them to remove unwanted chromatic distortions. Much research has focused on illuminant estimation for CCC on single images, with few attempts of leveraging the temporal information intrinsic in sequences of correlated images (e.g., the frames in a video), a task known as Temporal Colour Constancy (TCC). The state-of-the-art for TCC is TCCNet, a deep-learning architecture that uses a ConvLSTM for aggregating the encodings produced by CNN submodules for each image in a sequence. We extend this architecture with different models obtained by (i) substituting the TCCNet submodules with C4, the state-of-the-art method for CCC targeting images; (ii) adding a cascading strategy to perform an iterative improvement of the estimate of the illuminant. We tested our models on the recently released TCC benchmark and achieved results that surpass the state-of-the-art. Analyzing the impact of the number of frames involved in illuminant estimation on performance, we show that it is possible to reduce inference time by training the models on few selected frames from the sequences while retaining comparable accuracy.

A Benchmark for Temporal Color Constancy

Yanlin Qian, Jani Käpylä, Joni-Kristian Kämäräinen, Samu Koskinen, Jiri Matas (ArXiv, 2020) [https://github.com/yanlinqian/Temporal-Color-Constancy]

Temporal Color Constancy (CC) is a recently proposed approach that challenges the conventional single-frame color constancy. The conventional approach is to use a single frame - shot frame - to estimate the scene illumination color. In temporal CC, multiple frames from the view finder sequence are used to estimate the color. However, there are no realistic large scale temporal color constancy datasets for method evaluation. In this work, a new temporal CC benchmark is introduced. The benchmark comprises of (1) 600 real-world sequences recorded with a high-resolution mobile phone camera, (2) a fixed train-test split which ensures consistent evaluation, and (3) a baseline method which achieves high accuracy in the new benchmark and the dataset used in previous works. Results for more than 20 well-known color constancy methods including the recent state-of-the-arts are reported in our experiments.

DATASETS FOR TCC

TCC Dataset

Download links: RAW + PNG [~56 GB] or PNG [~14 GB]

Related paper: A Benchmark for Temporal Color Constancy

Yanlin Qian, Jani Käpylä, Joni-Kristian Kämäräinen, Samu Koskinen, Jiri Matas (ArXiv, 2020)

The benchmark dataset comprises 600 real-world sequences recorded with a high-resolution mobile phone camera and a fixed train-test split which ensures consistent evaluation. Results for more than 20 well-known color constancy methods including the recent state-of-the-arts are reported in the related paper.