Contents

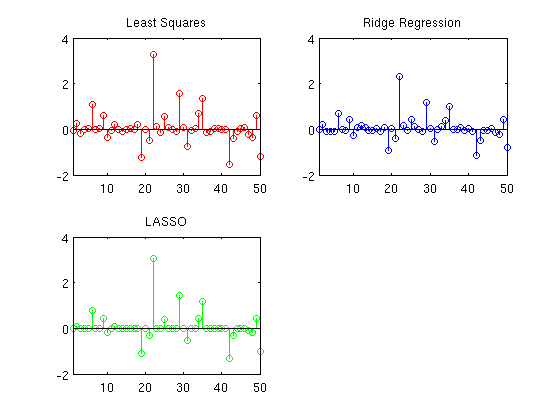

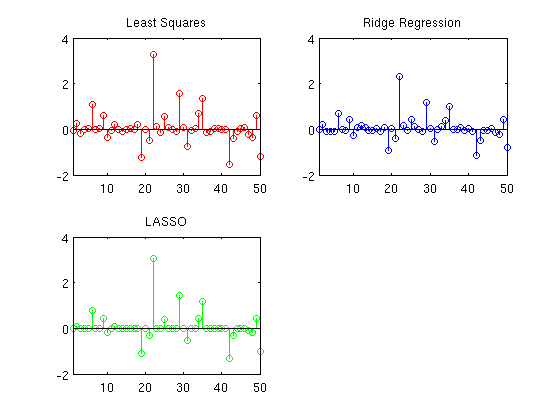

LASSO

nInstances = 250;

nVars = 50;

X = randn(nInstances,nVars);

y = X*((rand(nVars,1) > .5).*randn(nVars,1)) + randn(nInstances,1);

wLS = X\y;

lambda = 100*ones(nVars,1);

R = chol(X'*X + diag(lambda));

wRR = R\(R'\(X'*y));

lambda = 100*ones(nVars,1);

funObj = @(w)SquaredError(w,X,y);

w_init = wRR;

fprintf('\nComputing LASSO Coefficients...\n');

wLASSO = L1General2_PSSgb(funObj,w_init,lambda);

fprintf('Number of non-zero variables in Least Squares solution: %d\n',nnz(wLS));

fprintf('Number of non-zero variables in Ridge Regression solution: %d\n',nnz(wRR));

fprintf('Number of non-zero variables in LASSO solution: %d\n',nnz(wLASSO));

figure;

clf;hold on;

subplot(2,2,1);

stem(wLS,'r');

xlim([1 nVars]);

yl = ylim;

title('Least Squares');

subplot(2,2,2);

stem(wRR,'b');

xlim([1 nVars]);

ylim(yl);

title('Ridge Regression');

subplot(2,2,3);

stem(wLASSO,'g');

xlim([1 nVars]);

title('LASSO');

ylim(yl);

pause;

Computing LASSO Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 2.49592e-04 1.99301e+03 3.16398e+02 43

2 3 1.00000e+00 1.76918e+03 8.91349e+01 19

3 4 1.00000e+00 1.75367e+03 3.12464e+01 21

4 5 1.00000e+00 1.75160e+03 4.64930e+00 21

5 6 1.00000e+00 1.75145e+03 8.46870e-01 20

6 7 1.00000e+00 1.75145e+03 2.53280e-01 20

7 8 1.00000e+00 1.75145e+03 9.39831e-02 20

8 9 1.00000e+00 1.75145e+03 1.55213e-02 20

9 10 1.00000e+00 1.75145e+03 5.85301e-03 20

10 11 1.00000e+00 1.75145e+03 8.86230e-04 20

11 12 1.00000e+00 1.75145e+03 9.06708e-04 20

Progress in parameters or objective below progTol

Number of non-zero variables in Least Squares solution: 50

Number of non-zero variables in Ridge Regression solution: 50

Number of non-zero variables in LASSO solution: 20

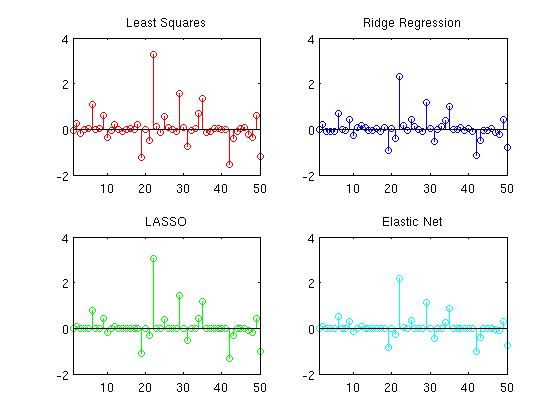

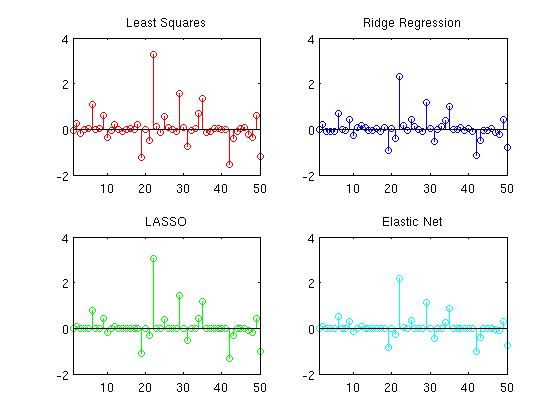

Elastic Net

lambdaL2 = 100*ones(nVars,1);

lambdaL1 = 100*ones(nVars,1);

penalizedFunObj = @(w)penalizedL2(w,funObj,lambdaL2);

fprintf('\nComputing Elastic Net Coefficients...\n');

wElastic = L1General2_PSSgb(penalizedFunObj,w_init,lambdaL1);

fprintf('Number of non-zero variables in Elastic Net solution: %d\n',nnz(wElastic));

subplot(2,2,4);

stem(wElastic,'c');

xlim([1 nVars]);

ylim(yl);

title('Elastic Net');

pause;

Computing Elastic Net Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 2.00000e-04 3.27151e+03 9.28964e+01 43

2 3 1.00000e+00 3.12917e+03 2.52775e+01 22

3 4 1.00000e+00 3.12721e+03 1.26982e+01 22

4 5 1.00000e+00 3.12675e+03 1.25912e+00 21

5 6 1.00000e+00 3.12674e+03 2.57555e-01 21

6 7 1.00000e+00 3.12674e+03 1.07619e-01 21

7 8 1.00000e+00 3.12674e+03 1.64976e-02 21

8 9 1.00000e+00 3.12674e+03 1.06693e-03 21

9 10 1.00000e+00 3.12674e+03 4.13000e-04 21

10 11 1.00000e+00 3.12674e+03 7.03746e-05 21

Progress in parameters or objective below progTol

Number of non-zero variables in Elastic Net solution: 21

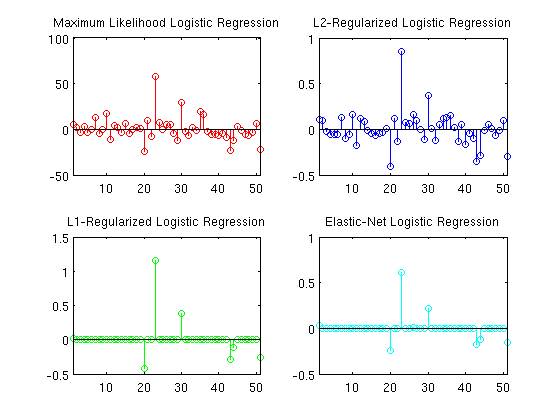

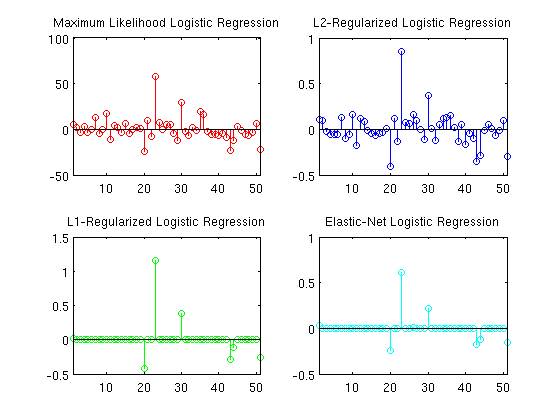

Logistic Regression

X = [ones(nInstances,1) X];

y = sign(y);

funObj = @(w)LogisticLoss(w,X,y);

w_init = zeros(nVars+1,1);

fprintf('\nComputing Maximum Likelihood Logistic Regression Coefficients\n');

mfOptions.Method = 'newton';

wLogML = minFunc(funObj,w_init,mfOptions);

fprintf('\nComputing L2-Regularized Logistic Regression Coefficients...\n');

lambda = 15*ones(nVars+1,1);

lambda(1) = 0;

funObjL2 = @(w)penalizedL2(w,funObj,lambda);

wLogL2 = minFunc(funObjL2,w_init,mfOptions);

fprintf('\nComputing L1-Regularized Logistic Regression Coefficients...\n');

wLogL1 = L1General2_PSSgb(funObj,w_init,lambda);

fprintf('\nComputing Elastic-Net Logistic Regression Coefficients...\n');

wLogL1L2 = L1General2_PSSgb(funObjL2,w_init,lambda);

figure;

clf;hold on;

subplot(2,2,1);

stem(wLogML,'r');

xlim([1 nVars+1]);

title('Maximum Likelihood Logistic Regression');

subplot(2,2,2);

stem(wLogL2,'b');

xlim([1 nVars+1]);

title('L2-Regularized Logistic Regression');

subplot(2,2,3);

stem(wLogL1,'g');

xlim([1 nVars+1]);

title('L1-Regularized Logistic Regression');

subplot(2,2,4);

stem(wLogL1L2,'c');

xlim([1 nVars+1]);

title('Elastic-Net Logistic Regression');

fprintf('Number of Features Selected by Maximum Likelihood Logistic Regression classifier: %d (out of %d)\n',nnz(wLogML(2:end)),nVars);

fprintf('Number of Features Selected by L2-regualrized Logistic Regression classifier: %d (out of %d)\n',nnz(wLogL2(2:end)),nVars);

fprintf('Number of Features Selected by L1-regualrized Logistic Regression classifier: %d (out of %d)\n',nnz(wLogL1(2:end)),nVars);

fprintf('Number of Features Selected by Elastic-Net Logistic Regression classifier: %d (out of %d)\n',nnz(wLogL1L2(2:end)),nVars);

fprintf('Classification error rate on training data for L1-regularied Logistic Regression: %.2f\n',sum(y ~= sign(X*wLogL1))/length(y));

pause;

Computing Maximum Likelihood Logistic Regression Coefficients

Iteration FunEvals Step Length Function Val Opt Cond

1 2 1.00000e+00 7.07045e+01 1.97507e+01

2 3 1.00000e+00 4.23053e+01 8.07759e+00

3 4 1.00000e+00 2.66959e+01 3.49396e+00

4 5 1.00000e+00 1.62143e+01 1.55590e+00

5 6 1.00000e+00 8.10661e+00 6.90238e-01

6 7 1.00000e+00 3.38291e+00 2.82238e-01

7 8 1.00000e+00 1.25949e+00 1.07228e-01

8 9 1.00000e+00 4.61854e-01 3.98042e-02

9 10 1.00000e+00 1.69851e-01 1.47850e-02

10 11 1.00000e+00 6.26252e-02 5.48087e-03

11 12 1.00000e+00 2.31072e-02 2.02741e-03

12 13 1.00000e+00 8.52514e-03 7.48738e-04

13 14 1.00000e+00 3.14421e-03 2.76176e-04

14 15 1.00000e+00 1.15922e-03 1.01770e-04

15 16 1.00000e+00 4.27240e-04 3.74723e-05

16 17 1.00000e+00 1.57415e-04 1.37887e-05

17 18 1.00000e+00 5.79822e-05 5.07135e-06

Optimality Condition below optTol

Computing L2-Regularized Logistic Regression Coefficients...

Iteration FunEvals Step Length Function Val Opt Cond

1 2 1.00000e+00 1.05577e+02 8.83408e+00

2 3 1.00000e+00 1.03449e+02 5.21616e-01

3 4 1.00000e+00 1.03438e+02 3.09434e-03

4 5 1.00000e+00 1.03438e+02 1.26932e-07

Optimality Condition below optTol

Computing L1-Regularized Logistic Regression Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 6.00892e-03 1.50194e+02 3.29826e+01 11

2 3 1.00000e+00 1.35832e+02 8.96380e+00 7

3 4 1.00000e+00 1.34305e+02 2.60035e+00 7

4 5 1.00000e+00 1.34139e+02 3.92810e-01 7

5 6 1.00000e+00 1.34136e+02 1.24296e-01 7

6 7 1.00000e+00 1.34135e+02 6.21160e-02 7

7 8 1.00000e+00 1.34135e+02 1.12322e-03 7

8 9 1.00000e+00 1.34135e+02 1.24925e-04 7

9 10 1.00000e+00 1.34135e+02 8.38783e-06 7

First-order optimality below optTol

Computing Elastic-Net Logistic Regression Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 6.00892e-03 1.52889e+02 2.33081e+01 11

2 3 1.00000e+00 1.48589e+02 2.77799e+00 8

3 4 1.00000e+00 1.48514e+02 2.03804e-01 8

4 5 1.00000e+00 1.48514e+02 1.74764e-02 8

5 6 1.00000e+00 1.48514e+02 3.36673e-03 8

6 7 1.00000e+00 1.48514e+02 1.50247e-04 8

Directional derivative below progTol

Number of Features Selected by Maximum Likelihood Logistic Regression classifier: 50 (out of 50)

Number of Features Selected by L2-regualrized Logistic Regression classifier: 50 (out of 50)

Number of Features Selected by L1-regualrized Logistic Regression classifier: 6 (out of 50)

Number of Features Selected by Elastic-Net Logistic Regression classifier: 7 (out of 50)

Classification error rate on training data for L1-regularied Logistic Regression: 0.10

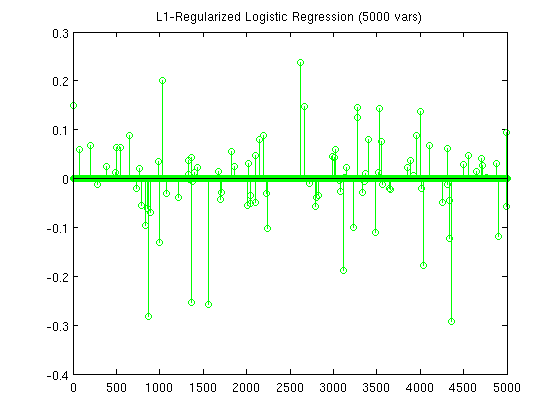

L-BFGS Variant

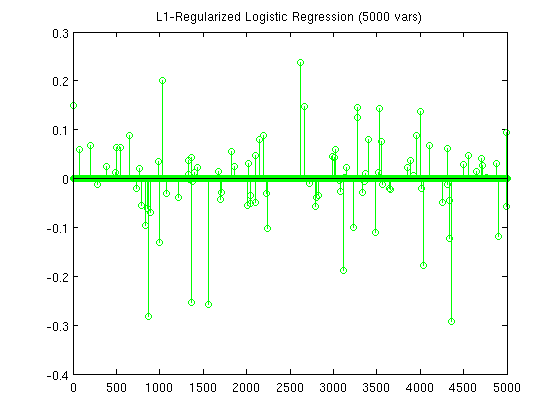

nVars = 5000;

X = [ones(nInstances,1) randn(nInstances,nVars-1)];

y = sign(X*((rand(nVars,1) > .5).*randn(nVars,1)) + randn(nInstances,1));

lambda = 10*ones(nVars,1);

lambda(1) = 0;

funObj = @(w)LogisticLoss(w,X,y);

fprintf('\nComputing Logistic Regression Coefficients for model with %d variables with L-BFGS\n',nVars);

wLogistic = L1General2_PSSgb(funObj,zeros(nVars,1),lambda);

figure;

clf;

stem(wLogistic,'g');

title(sprintf('L1-Regularized Logistic Regression (%d vars)',nVars));

fprintf('Number of Features Selected by Logistic Regression classifier: %d (out of %d)\n',nnz(wLogistic(2:end)),nVars);

fprintf('Classification error rate on training data: %.2f\n',sum(y ~= sign(X*wLogistic))/length(y));

pause;

Computing Logistic Regression Coefficients for model with 5000 variables with L-BFGS

Iter fEvals stepLen fVal optCond nnz

1 2 2.24113e-04 1.67068e+02 1.96701e+01 1147

2 3 1.00000e+00 1.48643e+02 8.84668e+00 768

3 4 1.00000e+00 1.45242e+02 6.51621e+00 583

4 5 1.00000e+00 1.42914e+02 4.75094e+00 386

5 6 1.00000e+00 1.39937e+02 3.44001e+00 312

6 7 1.00000e+00 1.36860e+02 2.99006e+00 191

7 8 1.00000e+00 1.36225e+02 4.07078e+00 122

Backtracking...

Backtracking...

Backtracking...

Backtracking...

8 13 6.59677e-04 1.36204e+02 3.92474e+00 125

9 14 1.00000e+00 1.35018e+02 2.49947e+00 128

10 15 1.00000e+00 1.34552e+02 1.19953e+00 120

11 16 1.00000e+00 1.34424e+02 1.19847e+00 110

12 17 1.00000e+00 1.34295e+02 6.10298e-01 106

Backtracking...

13 19 8.03893e-03 1.34287e+02 6.31270e-01 109

14 20 1.00000e+00 1.34267e+02 1.10060e+00 106

15 21 1.00000e+00 1.34223e+02 3.80897e-01 101

16 22 1.00000e+00 1.34209e+02 1.68550e-01 102

17 23 1.00000e+00 1.34203e+02 1.52216e-01 101

18 24 1.00000e+00 1.34201e+02 1.75575e-01 100

19 25 1.00000e+00 1.34199e+02 1.29820e-01 99

20 26 1.00000e+00 1.34199e+02 6.71145e-02 99

Backtracking...

21 28 3.08890e-01 1.34198e+02 3.54430e-02 99

22 29 1.00000e+00 1.34198e+02 2.49175e-02 99

23 30 1.00000e+00 1.34198e+02 1.44747e-02 99

24 31 1.00000e+00 1.34198e+02 2.46634e-02 99

25 32 1.00000e+00 1.34198e+02 3.25376e-02 98

26 33 1.00000e+00 1.34198e+02 4.11185e-03 99

27 34 1.00000e+00 1.34198e+02 2.29290e-03 99

28 35 1.00000e+00 1.34198e+02 2.41097e-03 99

29 36 1.00000e+00 1.34198e+02 1.80338e-03 99

30 37 1.00000e+00 1.34198e+02 4.79784e-04 99

31 38 1.00000e+00 1.34198e+02 2.82431e-04 99

32 39 1.00000e+00 1.34198e+02 1.58254e-04 99

33 40 1.00000e+00 1.34198e+02 1.12707e-04 99

34 41 1.00000e+00 1.34198e+02 1.27808e-04 99

35 42 1.00000e+00 1.34198e+02 3.38671e-05 99

Directional derivative below progTol

Number of Features Selected by Logistic Regression classifier: 98 (out of 5000)

Classification error rate on training data: 0.00

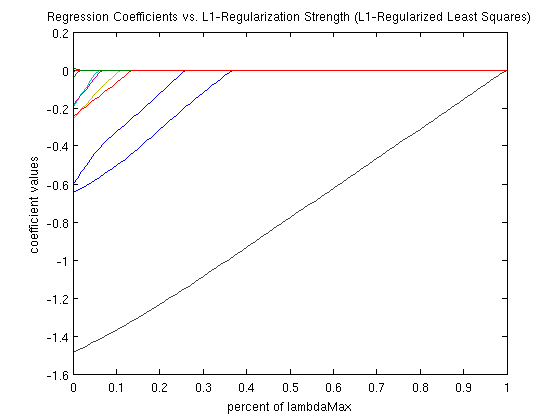

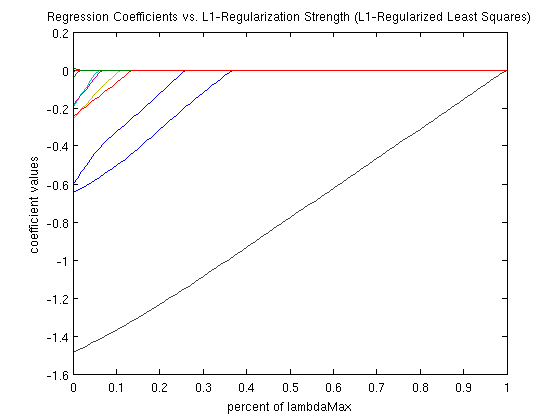

Lasso Regularization Path

nInstances = 100;

nVars = 10;

X = randn(nInstances,nVars);

y = X*((rand(nVars,1) > .5).*randn(nVars,1)) + randn(nInstances,1);

[f,g] = SquaredError(zeros(nVars,1),X,y);

lambdaMax = max(abs(g));

lambdaInc = .01;

fprintf('Computing Least Squares L1-Regularization path\n');

funObj = @(w)SquaredError(w,X,y);

w = zeros(nVars,1);

options = struct('verbose',0);

for mult = 1-lambdaInc:-lambdaInc:0

lambda = mult*lambdaMax*ones(nVars,1);

w(:,end+1) = L1General2_PSSgb(funObj,w(:,end),lambda,options);

end

figure;xData = 1:-lambdaInc:0;

yData = w;

plot(xData,yData);

title('Regression Coefficients vs. L1-Regularization Strength (L1-Regularized Least Squares)');

xlabel('percent of lambdaMax');

ylabel('coefficient values');

pause;

Computing Least Squares L1-Regularization path

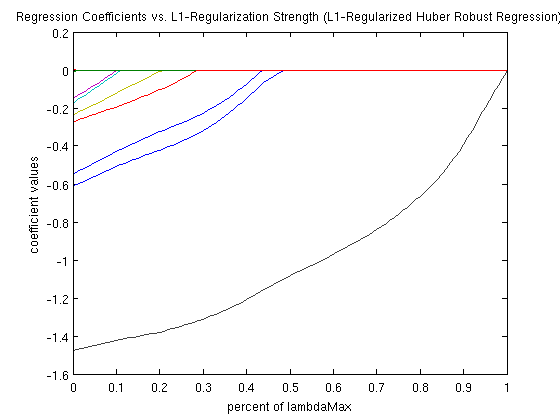

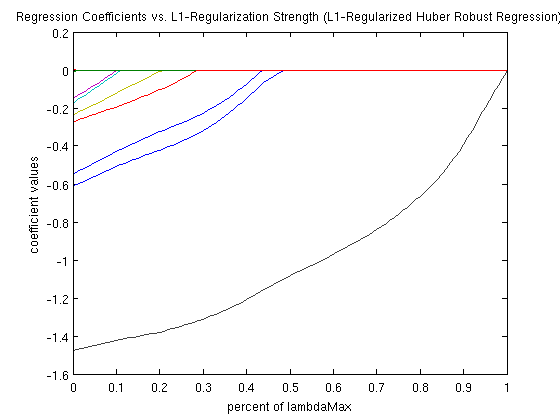

Huber Robust Regression Regularization Path

changePoint = 1;

[f,g] = HuberLoss(zeros(nVars,1),X,y,changePoint);

lambdaMax = max(abs(g));

lambdaInc = .01;

fprintf('Computing Huber Robust Regression L1-Regularization path\n');

funObj = @(w)HuberLoss(w,X,y,changePoint);

w = zeros(nVars,1);

options = struct('verbose',0);

for mult = 1-lambdaInc:-lambdaInc:0

lambda = mult*lambdaMax*ones(nVars,1);

w(:,end+1) = L1General2_PSSgb(funObj,w(:,end),lambda,options);

end

figure;xData = 1:-lambdaInc:0;

yData = w;

plot(xData,yData);

title('Regression Coefficients vs. L1-Regularization Strength (L1-Regularized Huber Robust Regression)');

xlabel('percent of lambdaMax');

ylabel('coefficient values');

pause;

Computing Huber Robust Regression L1-Regularization path

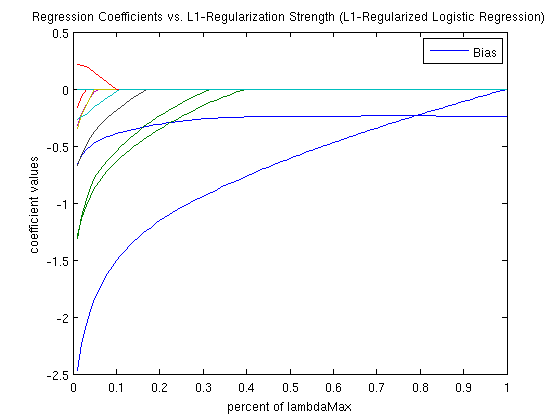

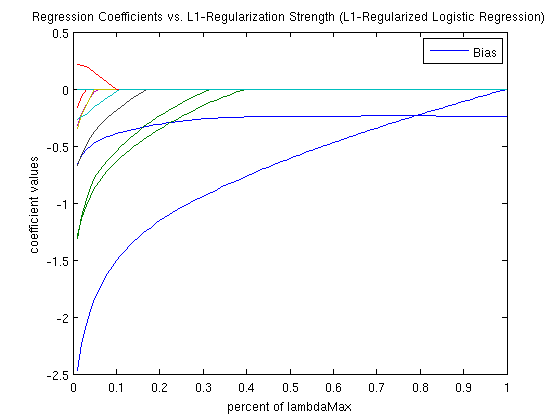

Logistic Regression Regularization Path

X = [ones(nInstances,1) X];

y = sign(y);

funObjBias = @(w)LogisticLoss(w,ones(nInstances,1),y);

bias = minFunc(funObjBias,0,struct('Display','none'));

w = [bias;zeros(nVars,1)];

[f,g] = LogisticLoss(w,X,y);

lambdaMax = max(abs(g));

lambdaInc = .01;

fprintf('Computing Logistic Regression L1-Regularization path\n');

funObj = @(w)LogisticLoss(w,X,y);

for mult = 1-lambdaInc:-lambdaInc:lambdaInc

lambda = [0;mult*lambdaMax*ones(nVars,1)];

w(:,end+1) = L1General2_PSSgb(funObj,w(:,end),lambda,options);

end

figure;

plot(1:-lambdaInc:lambdaInc,w');

legend({'Bias'});

title('Regression Coefficients vs. L1-Regularization Strength (L1-Regularized Logistic Regression)');

xlabel('percent of lambdaMax');

ylabel('coefficient values');

pause;

Computing Logistic Regression L1-Regularization path

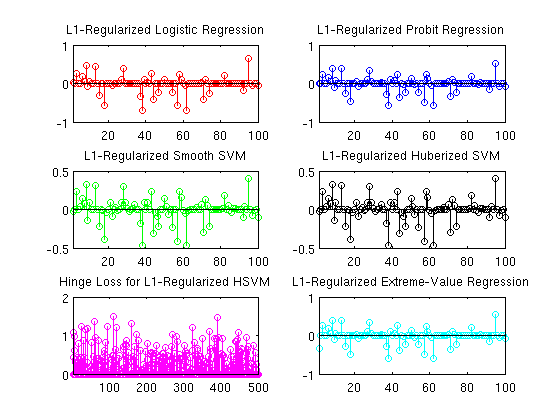

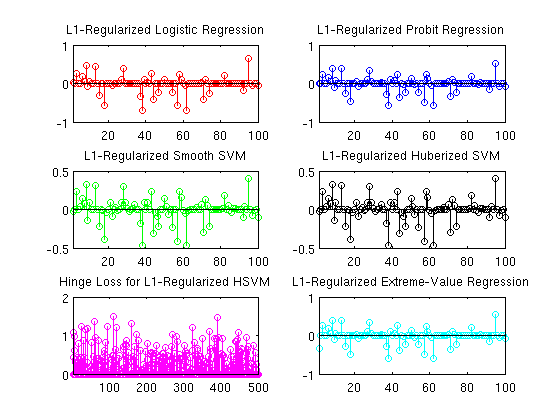

Probit Regression, Smooth SVM, Huberized SVM

nInstances = 500;

nVars = 100;

X = randn(nInstances,nVars);

y = sign(X*((rand(nVars,1) > .5).*randn(nVars,1)) + randn(nInstances,1)/5);

X = [ones(nInstances,1) X];

lambda = [0;10*ones(nVars,1)];

fprintf('\nComputing Logistic Regression Coefficients...\n');

funObj = @(w)LogisticLoss(w,X,y);

wLogit = L1General2_PSSgb(funObj,zeros(nVars+1,1),lambda);

fprintf('\nComputing Probit Regression Coefficients...\n');

funObj = @(w)ProbitLoss(w,X,y);

wProbit = L1General2_PSSgb(funObj,zeros(nVars+1,1),lambda);

fprintf('\nComputing Smooth Support Vector Machine Coefficients...\n');

funObj = @(w)SSVMLoss(w,X,y);

wSSVM = L1General2_PSSgb(funObj,zeros(nVars+1,1),lambda);

fprintf('\nComputing Huberized Support Vector Machine Coefficients...\n');

t = .5;

funObj = @(w)HuberSVMLoss(w,X,y,t);

wHSVM = L1General2_PSSgb(funObj,zeros(nVars+1,1),lambda);

fprintf('\nComputing Extreme Value Regression Coefficients...\n');

funObj = @(w)ExtremeLoss(w,X,y);

wExtreme = L1General2_PSSgb(funObj,zeros(nVars+1,1),lambda);

figure;

clf;hold on;

subplot(3,2,1);

stem(wLogit,'r');

xlim([1 nVars]);

title('L1-Regularized Logistic Regression');

subplot(3,2,2);

stem(wProbit,'b');

xlim([1 nVars]);

title('L1-Regularized Probit Regression');

subplot(3,2,3);

stem(wSSVM,'g');

xlim([1 nVars]);

title('L1-Regularized Smooth SVM');

subplot(3,2,4);

stem(wSSVM,'k');

xlim([1 nVars]);

title('L1-Regularized Huberized SVM');

subplot(3,2,5);

stem(max(0,1-y.*(X*wHSVM)),'m');

xlim([1 nInstances]);

title('Hinge Loss for L1-Regularized HSVM');

subplot(3,2,6)

stem(wExtreme,'c');

xlim([1 nVars]);

title('L1-Regularized Extreme-Value Regression');

fprintf('Number of non-zero variables for Logistic Regression: %d out of %d\n',nnz(wLogit(2:end)),nVars);

fprintf('Number of non-zero variables for Probit Regression: %d out of %d\n',nnz(wProbit(2:end)),nVars);

fprintf('Number of non-zero variables for Smooth Support Vector Machine: %d out of %d',nnz(wSSVM(2:end)),nVars);

fprintf(' (%d support vectors)\n',sum(1-y.*(X*wSSVM)>=0));

fprintf('Number of non-zero variables for Huberized Support Vector Machine: %d out of %d',nnz(wHSVM(2:end)),nVars);

fprintf(' (%d support vectors)\n',sum(1-y.*(X*wHSVM)>=0));

fprintf('Number of non-zero variables for Extreme Value Regression: %d out of %d\n',nnz(wExtreme(2:end)),nVars);

pause;

Computing Logistic Regression Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 1.11219e-03 3.16670e+02 5.24024e+01 57

2 3 1.00000e+00 2.35672e+02 1.43651e+01 41

3 4 1.00000e+00 2.25972e+02 5.97437e+00 39

4 5 1.00000e+00 2.23176e+02 2.36338e+00 39

5 6 1.00000e+00 2.23072e+02 2.01425e+00 36

6 7 1.00000e+00 2.22938e+02 3.12517e-01 38

7 8 1.00000e+00 2.22937e+02 8.83875e-02 38

8 9 1.00000e+00 2.22937e+02 1.42327e-02 38

9 10 1.00000e+00 2.22937e+02 4.08680e-03 38

10 11 1.00000e+00 2.22937e+02 2.92593e-03 38

11 12 1.00000e+00 2.22937e+02 3.79409e-04 38

12 13 1.00000e+00 2.22937e+02 1.28940e-04 38

13 14 1.00000e+00 2.22937e+02 2.42499e-05 38

Progress in parameters or objective below progTol

Computing Probit Regression Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 5.54304e-04 3.00238e+02 8.39334e+01 70

2 3 1.00000e+00 2.02146e+02 2.79057e+01 55

3 4 1.00000e+00 1.84718e+02 1.19909e+01 54

4 5 1.00000e+00 1.78376e+02 7.11523e+00 52

5 6 1.00000e+00 1.77394e+02 5.00142e+00 47

6 7 1.00000e+00 1.77010e+02 1.53044e+00 48

7 8 1.00000e+00 1.76994e+02 4.22121e-01 49

8 9 1.00000e+00 1.76991e+02 1.09574e-01 49

9 10 1.00000e+00 1.76990e+02 4.26942e-02 49

10 11 1.00000e+00 1.76990e+02 2.76763e-02 49

11 12 1.00000e+00 1.76990e+02 7.00188e-03 49

12 13 1.00000e+00 1.76990e+02 2.48059e-03 49

13 14 1.00000e+00 1.76990e+02 1.13110e-03 49

14 15 1.00000e+00 1.76990e+02 3.87316e-04 49

Backtracking...

15 17 4.11044e-01 1.76990e+02 9.68925e-05 49

Directional derivative below progTol

Computing Smooth Support Vector Machine Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 1.75512e-04 3.84887e+02 2.17640e+02 89

2 3 1.00000e+00 1.85515e+02 5.45835e+01 79

3 4 1.00000e+00 1.63225e+02 2.68508e+01 84

4 5 1.00000e+00 1.47825e+02 1.83658e+01 72

5 6 1.00000e+00 1.44710e+02 1.31008e+01 75

6 7 1.00000e+00 1.43129e+02 4.64824e+00 76

7 8 1.00000e+00 1.42845e+02 3.32625e+00 74

8 9 1.00000e+00 1.42638e+02 2.47059e+00 73

9 10 1.00000e+00 1.42542e+02 2.05454e+00 68

10 11 1.00000e+00 1.42527e+02 1.42891e+00 68

11 12 1.00000e+00 1.42512e+02 2.77524e-01 69

12 13 1.00000e+00 1.42511e+02 1.86336e-01 69

13 14 1.00000e+00 1.42510e+02 1.03574e-01 69

14 15 1.00000e+00 1.42510e+02 8.98808e-02 69

15 16 1.00000e+00 1.42510e+02 2.62123e-02 69

16 17 1.00000e+00 1.42510e+02 1.28982e-02 69

17 18 1.00000e+00 1.42510e+02 6.27322e-03 69

18 19 1.00000e+00 1.42510e+02 7.70964e-03 69

19 20 1.00000e+00 1.42510e+02 1.39856e-03 69

20 21 1.00000e+00 1.42510e+02 1.04135e-03 69

21 22 1.00000e+00 1.42510e+02 7.66865e-04 69

22 23 1.00000e+00 1.42510e+02 6.20470e-04 69

23 24 1.00000e+00 1.42510e+02 1.37625e-04 69

Directional derivative below progTol

Computing Huberized Support Vector Machine Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 4.09809e-04 3.09573e+02 1.37617e+02 76

Backtracking...

Backtracking...

Backtracking...

Backtracking...

Backtracking...

2 8 1.82115e-03 1.80320e+02 4.68808e+01 70

Backtracking...

3 10 1.90768e-01 1.70621e+02 3.96830e+01 69

4 11 1.00000e+00 1.44292e+02 1.89085e+01 65

5 12 1.00000e+00 1.42175e+02 2.90943e+01 56

6 13 1.00000e+00 1.36127e+02 9.61605e+00 58

7 14 1.00000e+00 1.34907e+02 4.21485e+00 61

8 15 1.00000e+00 1.34341e+02 3.61168e+00 59

9 16 1.00000e+00 1.34096e+02 3.43694e+00 61

10 17 1.00000e+00 1.33976e+02 2.31866e+00 61

11 18 1.00000e+00 1.33938e+02 9.81897e-01 60

12 19 1.00000e+00 1.33921e+02 5.69325e-01 61

13 20 1.00000e+00 1.33913e+02 4.59229e-01 61

14 21 1.00000e+00 1.33909e+02 3.31591e-01 61

15 22 1.00000e+00 1.33907e+02 2.17188e-01 61

16 23 1.00000e+00 1.33907e+02 1.01368e-01 60

17 24 1.00000e+00 1.33907e+02 4.39434e-02 60

18 25 1.00000e+00 1.33907e+02 3.06268e-02 60

19 26 1.00000e+00 1.33907e+02 3.10286e-02 60

20 27 1.00000e+00 1.33907e+02 1.04722e-02 60

21 28 1.00000e+00 1.33907e+02 8.10877e-03 60

22 29 1.00000e+00 1.33907e+02 3.55788e-03 60

23 30 1.00000e+00 1.33907e+02 1.75012e-03 60

24 31 1.00000e+00 1.33907e+02 1.35978e-03 60

Backtracking...

25 33 4.67586e-01 1.33907e+02 9.97574e-04 60

26 34 1.00000e+00 1.33907e+02 2.80376e-04 60

27 35 1.00000e+00 1.33907e+02 1.71551e-04 60

28 36 1.00000e+00 1.33907e+02 1.31008e-04 60

Progress in parameters or objective below progTol

Computing Extreme Value Regression Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 5.40408e-04 3.13060e+02 7.98254e+01 66

2 3 1.00000e+00 2.23061e+02 2.81775e+01 57

3 4 1.00000e+00 1.99555e+02 1.32625e+01 52

4 5 1.00000e+00 1.90739e+02 6.44873e+00 50

5 6 1.00000e+00 1.89194e+02 4.24375e+00 47

6 7 1.00000e+00 1.88798e+02 1.24892e+00 46

7 8 1.00000e+00 1.88766e+02 4.46984e-01 47

8 9 1.00000e+00 1.88762e+02 1.16921e-01 47

9 10 1.00000e+00 1.88761e+02 6.50265e-02 47

10 11 1.00000e+00 1.88761e+02 3.61242e-02 47

11 12 1.00000e+00 1.88761e+02 4.43847e-03 47

12 13 1.00000e+00 1.88761e+02 1.99794e-03 47

13 14 1.00000e+00 1.88761e+02 8.04958e-04 47

14 15 1.00000e+00 1.88761e+02 8.22021e-04 47

15 16 1.00000e+00 1.88761e+02 1.02701e-04 47

Directional derivative below progTol

Number of non-zero variables for Logistic Regression: 37 out of 100

Number of non-zero variables for Probit Regression: 48 out of 100

Number of non-zero variables for Smooth Support Vector Machine: 68 out of 100 (246 support vectors)

Number of non-zero variables for Huberized Support Vector Machine: 59 out of 100 (256 support vectors)

Number of non-zero variables for Extreme Value Regression: 46 out of 100

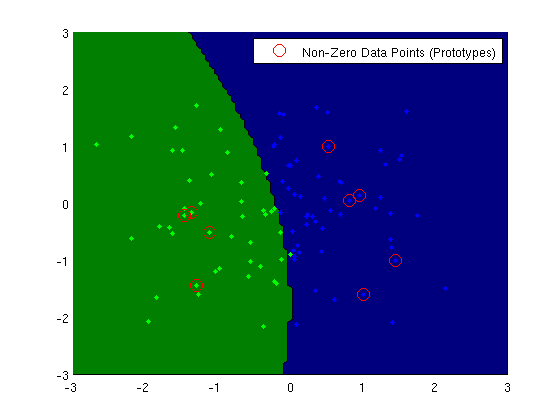

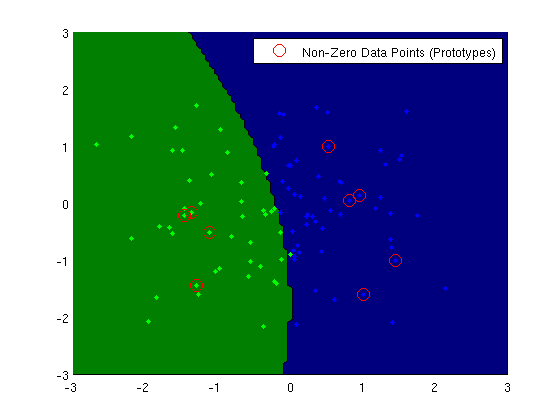

Non-Parametric Logistic Regression with Sparse Prototypes

nInstances = 100;

nVars = 2;

nExamplePoints = 4;

examplePoints = randn(nExamplePoints,nVars);

X = randn(nInstances,nVars);

y = zeros(nInstances,1);

for i = 1:nInstances

dists = sum((repmat(X(i,:),nExamplePoints,1) - examplePoints).^2,2);

[minVal minInd] = min(dists);

y(i,1) = sign(mod(minInd,2)-.5);

end

XX = kernelRBF(X,X,1);

fprintf('Computing Non-Parametric Logistic Regression Coefficients...\n');

funObj = @(u)LogisticLoss(u,XX,y);

lambda = .5*ones(nInstances,1);

u = L1General2_PSSgb(funObj,zeros(nInstances,1),lambda);

fprintf('Generating Plot...\n');

increment = 100;

figure;

clf; hold on;

plot(X(y==1,1),X(y==1,2),'.','color','g');

plot(X(y==-1,1),X(y==-1,2),'.','color','b');

domainx = xlim;

domain1 = domainx(1):(domainx(2)-domainx(1))/increment:domainx(2);

domainy = ylim;

domain2 = domainy(1):(domainy(2)-domainy(1))/increment:domainy(2);

d1 = repmat(domain1',[1 length(domain1)]);

d2 = repmat(domain2,[length(domain2) 1]);

vals = sign(kernelRBF([d1(:) d2(:)],X,1)*u);

zData = reshape(vals,size(d1));

contourf(d1,d2,zData+rand(size(zData))/1000,[-1 0],'k');

colormap([0 0 .5;0 .5 0]);

plot(X(y==1,1),X(y==1,2),'.','color','g');

plot(X(y==-1,1),X(y==-1,2),'.','color','b');

xlim(domainx);

ylim(domainy);

prototypes = X(u~=0,:);

fprintf('(%d prototypes)\n',size(prototypes,1));

h=plot(prototypes(:,1),prototypes(:,2),'ro');

set(h,'MarkerSize',10);

legend(h,'Non-Zero Data Points (Prototypes)');

pause;

Computing Non-Parametric Logistic Regression Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 5.20093e-03 6.67109e+01 3.98997e+00 95

2 3 1.00000e+00 4.58075e+01 2.30255e+00 87

3 4 1.00000e+00 3.94334e+01 1.18437e+00 95

4 5 1.00000e+00 3.76799e+01 5.71931e-01 94

5 6 1.00000e+00 3.69351e+01 4.62534e-01 81

6 7 1.00000e+00 3.65222e+01 3.60834e-01 74

7 8 1.00000e+00 3.60562e+01 2.90577e-01 60

8 9 1.00000e+00 3.56919e+01 3.29955e-01 58

9 10 1.00000e+00 3.51743e+01 5.80422e-01 39

Backtracking...

10 12 4.94147e-01 3.50166e+01 3.76194e-01 57

11 13 1.00000e+00 3.49203e+01 3.51194e-01 48

Backtracking...

12 15 4.24323e-01 3.48336e+01 5.50364e-01 43

13 16 1.00000e+00 3.47961e+01 3.82925e-01 38

Backtracking...

14 18 1.53452e-01 3.47810e+01 3.44684e-01 43

15 19 1.00000e+00 3.47560e+01 2.37006e-01 40

16 20 1.00000e+00 3.47112e+01 1.84156e-01 39

Backtracking...

17 22 3.77444e-01 3.46402e+01 2.80544e-01 36

Backtracking...

Backtracking...

18 25 1.52675e-03 3.46398e+01 1.26413e-01 34

19 26 1.00000e+00 3.46247e+01 1.34099e-01 31

20 27 1.00000e+00 3.45933e+01 8.93053e-02 32

Backtracking...

21 29 3.51940e-01 3.45605e+01 1.55374e-01 28

22 30 1.00000e+00 3.45233e+01 1.27413e-01 30

23 31 1.00000e+00 3.44337e+01 1.40339e-01 26

24 32 1.00000e+00 3.43791e+01 6.37444e-02 26

25 33 1.00000e+00 3.43549e+01 6.82287e-02 26

26 34 1.00000e+00 3.43109e+01 7.09055e-02 24

Backtracking...

27 36 4.64589e-01 3.42888e+01 7.98909e-02 24

28 37 1.00000e+00 3.42771e+01 7.27030e-02 22

Backtracking...

29 39 2.66997e-01 3.42700e+01 6.56971e-02 22

30 40 1.00000e+00 3.42575e+01 3.44141e-02 20

31 41 1.00000e+00 3.42456e+01 4.57312e-02 17

Backtracking...

32 43 1.74912e-01 3.42423e+01 1.72429e-02 18

Backtracking...

33 45 3.82482e-01 3.42399e+01 2.25954e-02 17

34 46 1.00000e+00 3.42365e+01 3.08340e-02 18

Backtracking...

35 48 1.54622e-01 3.42267e+01 4.95427e-02 17

Backtracking...

36 50 4.83985e-01 3.42238e+01 1.37321e-02 16

37 51 1.00000e+00 3.42193e+01 1.86491e-02 17

38 52 1.00000e+00 3.42045e+01 3.49788e-02 16

39 53 1.00000e+00 3.41973e+01 6.28513e-02 15

Backtracking...

40 55 1.63954e-01 3.41943e+01 4.28451e-02 17

41 56 1.00000e+00 3.41907e+01 2.04922e-02 17

42 57 1.00000e+00 3.41870e+01 2.52734e-02 19

43 58 1.00000e+00 3.41833e+01 2.02741e-02 15

Backtracking...

44 60 4.34681e-01 3.41824e+01 1.19566e-02 14

45 61 1.00000e+00 3.41817e+01 7.43078e-03 14

46 62 1.00000e+00 3.41803e+01 4.93236e-03 13

Backtracking...

47 64 5.58898e-01 3.41734e+01 9.05689e-03 12

Backtracking...

48 66 3.42166e-01 3.41725e+01 2.68754e-02 12

49 67 1.00000e+00 3.41677e+01 4.04584e-02 14

Backtracking...

50 69 9.78283e-02 3.41658e+01 1.14057e-02 15

Backtracking...

51 71 1.88171e-01 3.41655e+01 3.55383e-03 11

52 72 1.00000e+00 3.41654e+01 1.08598e-02 11

53 73 1.00000e+00 3.41653e+01 7.74173e-03 11

54 74 1.00000e+00 3.41651e+01 3.52344e-03 11

55 75 1.00000e+00 3.41645e+01 8.91291e-03 11

56 76 1.00000e+00 3.41631e+01 2.06320e-02 12

Backtracking...

57 78 2.31946e-01 3.41601e+01 1.94931e-02 12

Backtracking...

58 80 6.76889e-03 3.41599e+01 1.95370e-02 12

Backtracking...

59 82 4.16411e-03 3.41598e+01 1.95078e-02 12

Backtracking...

60 84 2.71201e-03 3.41597e+01 1.94916e-02 12

Backtracking...

61 86 1.99485e-03 3.41595e+01 1.94838e-02 12

Backtracking...

62 88 1.78948e-03 3.41594e+01 1.94696e-02 12

Backtracking...

63 90 1.47365e-03 3.41593e+01 1.94257e-02 12

Backtracking...

64 92 1.50794e-03 3.41591e+01 1.93989e-02 12

Backtracking...

65 94 1.37584e-03 3.41590e+01 1.93798e-02 12

Backtracking...

66 96 1.31671e-03 3.41589e+01 1.93498e-02 12

Backtracking...

67 98 1.24712e-03 3.41587e+01 1.93265e-02 12

Backtracking...

68 100 1.20677e-03 3.41586e+01 1.92994e-02 12

Backtracking...

69 102 1.15896e-03 3.41584e+01 1.92750e-02 12

Backtracking...

70 104 1.12421e-03 3.41583e+01 1.92489e-02 12

Backtracking...

71 106 1.08777e-03 3.41581e+01 1.92243e-02 12

Backtracking...

72 108 1.06066e-03 3.41580e+01 1.91987e-02 12

Backtracking...

73 110 1.02772e-03 3.41578e+01 1.91742e-02 12

Backtracking...

74 112 1.00146e-03 3.41577e+01 1.91494e-02 12

Backtracking...

75 114 1.00000e-03 3.41575e+01 1.91245e-02 12

Backtracking...

76 116 1.00000e-03 3.41574e+01 1.90990e-02 12

Backtracking...

77 118 1.00000e-03 3.41572e+01 1.90733e-02 12

Backtracking...

78 120 1.00000e-03 3.41570e+01 1.90470e-02 12

Backtracking...

79 122 1.00000e-03 3.41568e+01 1.90205e-02 12

Backtracking...

80 124 1.00000e-03 3.41566e+01 1.89936e-02 12

Backtracking...

81 126 1.00000e-03 3.41564e+01 1.89665e-02 12

Backtracking...

82 128 1.00000e-03 3.41561e+01 1.29535e-02 11

83 129 1.00000e+00 3.41538e+01 1.23784e-02 14

Backtracking...

Backtracking...

Backtracking...

84 133 5.50929e-05 3.41538e+01 1.25504e-02 13

85 134 1.00000e+00 3.41534e+01 2.51666e-02 10

Backtracking...

86 136 1.37384e-03 3.41533e+01 2.47699e-02 15

Backtracking...

87 138 3.51700e-03 3.41528e+01 1.76441e-02 13

Backtracking...

88 140 1.89601e-01 3.41517e+01 7.73706e-03 12

Backtracking...

89 142 3.37197e-03 3.41517e+01 9.62912e-03 12

Backtracking...

90 144 2.97847e-01 3.41515e+01 1.64100e-02 11

91 145 1.00000e+00 3.41511e+01 6.18655e-03 12

Backtracking...

Backtracking...

92 148 1.93642e-02 3.41511e+01 5.83796e-03 10

Backtracking...

93 150 8.28119e-02 3.41511e+01 7.42889e-03 9

94 151 1.00000e+00 3.41509e+01 1.07153e-02 9

95 152 1.00000e+00 3.41505e+01 7.41690e-03 11

Backtracking...

Backtracking...

96 155 2.93548e-02 3.41505e+01 7.50382e-03 11

Backtracking...

Backtracking...

97 158 8.68140e-03 3.41504e+01 9.12714e-03 10

Backtracking...

98 160 1.40617e-01 3.41504e+01 7.55935e-03 11

99 161 1.00000e+00 3.41499e+01 1.03130e-02 12

Backtracking...

Backtracking...

Backtracking...

100 165 8.82333e-03 3.41499e+01 1.01387e-02 10

Backtracking...

101 167 1.00000e-03 3.41489e+01 6.75874e-03 11

Backtracking...

Backtracking...

102 170 2.65946e-04 3.41489e+01 7.65777e-03 10

103 171 1.00000e+00 3.41486e+01 6.94239e-03 12

Backtracking...

104 173 1.00000e-03 3.41484e+01 4.77170e-03 12

105 174 1.00000e+00 3.41479e+01 4.86944e-03 12

106 175 1.00000e+00 3.41476e+01 3.78948e-03 12

107 176 1.00000e+00 3.41462e+01 6.57536e-03 12

Backtracking...

108 178 5.87849e-01 3.41448e+01 1.01789e-02 11

Backtracking...

109 180 2.22710e-02 3.41447e+01 1.02974e-02 11

Backtracking...

110 182 1.75794e-02 3.41444e+01 1.50959e-02 10

111 183 1.00000e+00 3.41438e+01 1.17165e-02 13

Backtracking...

Backtracking...

112 186 1.00000e-06 3.41438e+01 9.29967e-03 13

Backtracking...

113 188 1.00000e-03 3.41437e+01 8.84492e-03 13

Backtracking...

114 190 1.89882e-01 3.41437e+01 1.61095e-02 12

Backtracking...

Backtracking...

115 193 1.00000e-06 3.41434e+01 1.21053e-02 13

Backtracking...

Backtracking...

116 196 1.92639e-04 3.41434e+01 7.50063e-03 11

Backtracking...

117 198 4.55396e-02 3.41434e+01 5.90399e-03 12

Backtracking...

Backtracking...

118 201 2.18368e-03 3.41434e+01 5.12003e-03 11

Backtracking...

119 203 3.39055e-01 3.41433e+01 6.91099e-03 11

120 204 1.00000e+00 3.41432e+01 5.68694e-03 11

121 205 1.00000e+00 3.41429e+01 4.05702e-03 11

122 206 1.00000e+00 3.41425e+01 2.49618e-03 10

Backtracking...

Backtracking...

Backtracking...

123 210 7.54404e-06 3.41425e+01 3.39387e-03 11

Backtracking...

124 212 8.71145e-02 3.41424e+01 3.24684e-03 10

Backtracking...

125 214 4.12030e-03 3.41424e+01 3.01374e-03 10

126 215 1.00000e+00 3.41424e+01 4.33100e-03 10

Backtracking...

127 217 3.99388e-02 3.41424e+01 4.80645e-03 10

Backtracking...

Backtracking...

128 220 1.37085e-03 3.41424e+01 4.14702e-03 10

Backtracking...

129 222 7.43227e-02 3.41423e+01 2.64810e-03 9

Backtracking...

130 224 3.96939e-01 3.41422e+01 1.36154e-03 9

131 225 1.00000e+00 3.41422e+01 2.45462e-03 10

Backtracking...

132 227 6.40876e-03 3.41422e+01 1.29458e-03 10

133 228 1.00000e+00 3.41422e+01 6.06514e-04 9

134 229 1.00000e+00 3.41421e+01 1.68009e-03 10

135 230 1.00000e+00 3.41420e+01 2.64087e-03 10

Backtracking...

136 232 4.93517e-01 3.41419e+01 8.76492e-03 9

Backtracking...

137 234 2.96325e-01 3.41418e+01 4.52922e-03 12

Backtracking...

Backtracking...

138 237 7.01401e-03 3.41418e+01 2.71449e-03 11

Backtracking...

Backtracking...

139 240 1.76529e-04 3.41418e+01 3.18220e-03 10

140 241 1.00000e+00 3.41417e+01 1.71625e-03 10

Backtracking...

141 243 3.07791e-01 3.41417e+01 2.92124e-03 9

Backtracking...

142 245 1.41743e-01 3.41416e+01 2.41884e-03 9

Backtracking...

143 247 1.75011e-03 3.41416e+01 2.41427e-03 9

Backtracking...

144 249 1.09712e-03 3.41416e+01 2.40656e-03 9

Backtracking...

145 251 1.02424e-03 3.41416e+01 2.40346e-03 9

Backtracking...

146 253 1.00000e-03 3.41416e+01 2.39969e-03 9

Backtracking...

147 255 1.00000e-03 3.41416e+01 2.39668e-03 9

Backtracking...

148 257 1.00000e-03 3.41416e+01 2.39354e-03 9

Backtracking...

149 259 1.00000e-03 3.41416e+01 2.39055e-03 9

Backtracking...

150 261 1.00000e-03 3.41416e+01 2.38754e-03 9

Backtracking...

151 263 1.00000e-03 3.41416e+01 2.38455e-03 9

Backtracking...

152 265 1.00000e-03 3.41416e+01 2.38156e-03 9

Backtracking...

153 267 1.00000e-03 3.41416e+01 2.37858e-03 9

Backtracking...

154 269 1.00000e-03 3.41416e+01 2.37559e-03 9

Backtracking...

155 271 1.00000e-03 3.41416e+01 2.37260e-03 9

Backtracking...

156 273 1.00000e-03 3.41416e+01 2.36961e-03 9

Backtracking...

157 275 1.00000e-03 3.41416e+01 2.36663e-03 9

Backtracking...

158 277 1.00000e-03 3.41416e+01 2.36364e-03 9

Backtracking...

159 279 1.00000e-03 3.41416e+01 2.36065e-03 9

Backtracking...

160 281 1.00000e-03 3.41416e+01 2.35765e-03 9

Backtracking...

161 283 1.00000e-03 3.41416e+01 2.35466e-03 9

Backtracking...

162 285 1.00000e-03 3.41416e+01 2.35167e-03 9

Backtracking...

163 287 1.00000e-03 3.41416e+01 2.34867e-03 9

Backtracking...

164 289 1.00000e-03 3.41416e+01 2.34568e-03 9

Backtracking...

165 291 1.00000e-03 3.41416e+01 2.34268e-03 9

Backtracking...

166 293 1.00000e-03 3.41416e+01 2.33969e-03 9

Backtracking...

167 295 1.00000e-03 3.41416e+01 2.33669e-03 9

Backtracking...

168 297 1.00000e-03 3.41416e+01 2.33369e-03 9

Backtracking...

169 299 1.00000e-03 3.41416e+01 2.33069e-03 9

Backtracking...

Backtracking...

170 302 6.00000e-04 3.41416e+01 2.32889e-03 9

Backtracking...

Backtracking...

171 305 7.84761e-05 3.41416e+01 2.32866e-03 9

Backtracking...

Backtracking...

172 308 6.65533e-05 3.41416e+01 2.32846e-03 9

Backtracking...

Backtracking...

Backtracking...

173 312 8.75293e-06 3.41416e+01 2.32843e-03 9

Backtracking...

Backtracking...

Backtracking...

174 316 5.64212e-06 3.41416e+01 2.32841e-03 9

Backtracking...

Backtracking...

Backtracking...

175 320 4.52019e-06 3.41416e+01 2.32840e-03 9

Backtracking...

Backtracking...

Backtracking...

176 324 3.87492e-06 3.41416e+01 2.32839e-03 9

Backtracking...

Backtracking...

Backtracking...

Backtracking...

Backtracking...

177 330 9.55608e-08 3.41416e+01 2.32839e-03 9

Progress in parameters or objective below progTol

Generating Plot...

(9 prototypes)

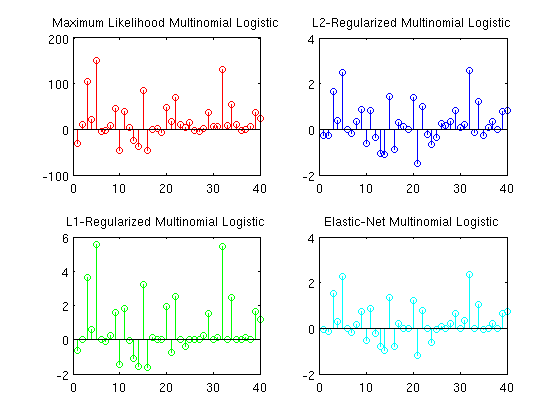

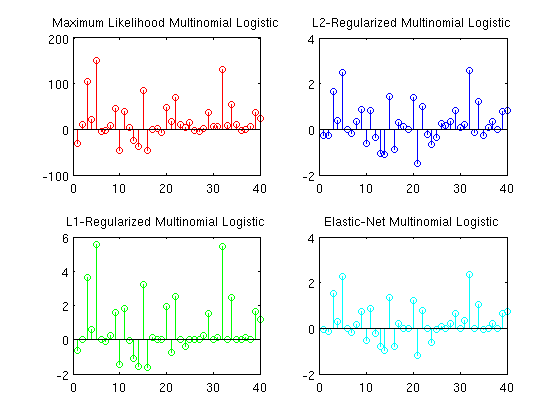

Multinomial Logistic Regression

nInstances = 200;

nVars = 10;

nClasses = 5;

X = [ones(nInstances,1) randn(nInstances,nVars-1)];

w = randn(nVars,nClasses-1).*(rand(nVars,nClasses-1)>.5);

[junk y] = max(X*[w zeros(nVars,1)],[],2);

w_init = zeros(nVars,nClasses-1);

w_init = w_init(:);

funObj = @(w)SoftmaxLoss2(w,X,y,nClasses);

lambda = 1*ones(nVars,nClasses-1);

lambda(1,:) = 0;

lambda = lambda(:);

fprintf('\nComputing Maximum Likelihood Multinomial Logistic Regression Coefficients\n');

mfOptions.Method = 'newton';

wMLR_ML = minFunc(funObj,w_init,mfOptions);

wMLR_ML = reshape(wMLR_ML,nVars,nClasses-1);

fprintf('\nComputing L2-Regularized Multinomial Logistic Regression Coefficients...\n');

funObjL2 = @(w)penalizedL2(w,funObj,lambda);

wMLR_L2 = minFunc(funObjL2,w_init,mfOptions);

wMLR_L2 = reshape(wMLR_L2,nVars,nClasses-1);

fprintf('\nComputing L1-Regularized Multinomial Logistic Regression Coefficients...\n');

wMLR_L1 = L1General2_PSSgb(funObj,w_init,lambda);

wMLR_L1 = reshape(wMLR_L1,nVars,nClasses-1);

fprintf('\nComputing Elastic-Net Multinomial Logistic Regression Coefficients...\n');

wMLR_L1L2 = L1General2_PSSgb(funObjL2,w_init,lambda);

wMLR_L1L2 = reshape(wMLR_L1L2,nVars,nClasses-1);

fprintf('Number of Features Selected by Maximum Likelihood Multinomial Logistic classifier: %d (out of %d)\n',nnz(wMLR_ML(2:end,:)),(nVars-1)*(nClasses-1));

fprintf('Number of Features Selected by L2-regualrized Multinomial Logistic classifier: %d (out of %d)\n',nnz(wMLR_L2(2:end,:)),(nVars-1)*(nClasses-1));

fprintf('Number of Features Selected by L1-regualrized Multinomial Logistic classifier: %d (out of %d)\n',nnz(wMLR_L1(2:end,:)),(nVars-1)*(nClasses-1));

fprintf('Number of Features Selected by Elastic-Net Multinomial Logistic classifier: %d (out of %d)\n',nnz(wMLR_L1L2(2:end,:)),(nVars-1)*(nClasses-1));

figure;

clf;hold on;

subplot(2,2,1);

stem(wMLR_ML(:),'r');

title('Maximum Likelihood Multinomial Logistic');

subplot(2,2,2);

stem(wMLR_L2(:),'b');

title('L2-Regularized Multinomial Logistic');

subplot(2,2,3);

stem(wMLR_L1(:),'g');

title('L1-Regularized Multinomial Logistic');

subplot(2,2,4);

stem(wMLR_L1L2(:),'c');

title('Elastic-Net Multinomial Logistic');

[junk yhat] = max(X*[wMLR_L1 zeros(nVars,1)],[],2);

fprintf('Classification error rate on training data for L1-regularied Multinomial Logistic: %.2f\n',sum(y ~= yhat)/length(y));

pause;

Computing Maximum Likelihood Multinomial Logistic Regression Coefficients

Iteration FunEvals Step Length Function Val Opt Cond

1 2 1.00000e+00 1.04179e+02 1.17219e+01

2 3 1.00000e+00 6.22764e+01 5.13364e+00

3 4 1.00000e+00 3.91936e+01 2.21929e+00

4 5 1.00000e+00 2.42730e+01 1.13250e+00

5 6 1.00000e+00 1.46139e+01 6.12513e-01

6 7 1.00000e+00 7.94355e+00 2.90802e-01

7 8 1.00000e+00 3.59489e+00 1.22797e-01

8 9 1.00000e+00 1.38213e+00 4.79815e-02

9 10 1.00000e+00 5.10257e-01 1.80193e-02

10 11 1.00000e+00 1.88486e-01 6.82512e-03

11 12 1.00000e+00 6.96973e-02 2.55603e-03

12 13 1.00000e+00 2.57607e-02 9.51627e-04

13 14 1.00000e+00 9.51241e-03 3.52916e-04

14 15 1.00000e+00 3.50930e-03 1.30531e-04

15 16 1.00000e+00 1.29366e-03 4.81926e-05

16 17 1.00000e+00 4.76609e-04 1.77716e-05

17 18 1.00000e+00 1.75517e-04 6.54829e-06

Optimality Condition below optTol

Computing L2-Regularized Multinomial Logistic Regression Coefficients...

Iteration FunEvals Step Length Function Val Opt Cond

1 2 1.00000e+00 1.20545e+02 1.02673e+01

2 3 1.00000e+00 9.92603e+01 2.54050e+00

3 4 1.00000e+00 9.63988e+01 4.25870e-01

4 5 1.00000e+00 9.63028e+01 2.67172e-02

5 6 1.00000e+00 9.63026e+01 1.06566e-04

6 7 1.00000e+00 9.63026e+01 1.55572e-09

Optimality Condition below optTol

Computing L1-Regularized Multinomial Logistic Regression Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 1.76103e-03 2.94845e+02 5.21182e+01 37

2 3 1.00000e+00 1.35657e+02 1.26400e+01 35

3 4 1.00000e+00 1.16746e+02 7.63398e+00 37

4 5 1.00000e+00 9.17691e+01 6.58194e+00 35

5 6 1.00000e+00 8.30630e+01 7.05694e+00 35

6 7 1.00000e+00 7.90554e+01 3.16541e+00 36

7 8 1.00000e+00 7.73113e+01 1.38246e+00 32

8 9 1.00000e+00 7.60217e+01 1.01551e+00 32

9 10 1.00000e+00 7.53268e+01 1.12175e+00 30

10 11 1.00000e+00 7.49766e+01 1.13828e+00 30

11 12 1.00000e+00 7.47120e+01 4.41812e-01 29

12 13 1.00000e+00 7.45730e+01 4.17875e-01 29

13 14 1.00000e+00 7.44940e+01 3.95614e-01 29

14 15 1.00000e+00 7.44419e+01 3.31846e-01 29

15 16 1.00000e+00 7.44120e+01 1.84727e-01 28

16 17 1.00000e+00 7.44051e+01 8.31556e-02 29

17 18 1.00000e+00 7.44018e+01 4.36642e-02 27

18 19 1.00000e+00 7.43996e+01 3.15859e-02 27

19 20 1.00000e+00 7.43993e+01 3.41207e-02 27

20 21 1.00000e+00 7.43991e+01 1.57060e-02 27

21 22 1.00000e+00 7.43990e+01 1.24550e-02 27

22 23 1.00000e+00 7.43989e+01 8.23950e-03 27

23 24 1.00000e+00 7.43989e+01 1.04371e-02 27

24 25 1.00000e+00 7.43989e+01 2.68026e-03 27

25 26 1.00000e+00 7.43989e+01 1.90409e-03 27

26 27 1.00000e+00 7.43989e+01 1.70540e-03 27

27 28 1.00000e+00 7.43988e+01 9.66254e-04 27

28 29 1.00000e+00 7.43988e+01 1.11360e-03 27

29 30 1.00000e+00 7.43988e+01 2.63725e-04 27

30 31 1.00000e+00 7.43988e+01 1.19382e-04 27

31 32 1.00000e+00 7.43988e+01 3.08955e-05 27

32 33 1.00000e+00 7.43988e+01 2.96448e-05 27

Progress in parameters or objective below progTol

Computing Elastic-Net Multinomial Logistic Regression Coefficients...

Iter fEvals stepLen fVal optCond nnz

1 2 1.76103e-03 2.94888e+02 5.19179e+01 37

2 3 1.00000e+00 1.44710e+02 1.13796e+01 35

3 4 1.00000e+00 1.31208e+02 6.10043e+00 37

4 5 1.00000e+00 1.20146e+02 4.62499e+00 35

5 6 1.00000e+00 1.18592e+02 4.70310e+00 38

6 7 1.00000e+00 1.17639e+02 8.48525e-01 36

7 8 1.00000e+00 1.17521e+02 6.80493e-01 37

8 9 1.00000e+00 1.17378e+02 5.87050e-01 33

9 10 1.00000e+00 1.17330e+02 1.68602e-01 31

10 11 1.00000e+00 1.17324e+02 1.67430e-01 32

11 12 1.00000e+00 1.17321e+02 7.02801e-02 32

12 13 1.00000e+00 1.17320e+02 5.55746e-02 32

13 14 1.00000e+00 1.17320e+02 3.14440e-02 32

14 15 1.00000e+00 1.17320e+02 3.21882e-02 32

15 16 1.00000e+00 1.17319e+02 1.95754e-02 32

Backtracking...

16 18 3.59348e-01 1.17319e+02 1.42054e-02 32

17 19 1.00000e+00 1.17319e+02 9.01286e-03 32

18 20 1.00000e+00 1.17319e+02 4.78039e-03 32

19 21 1.00000e+00 1.17319e+02 3.84352e-03 32

Backtracking...

20 23 4.22797e-01 1.17319e+02 3.22387e-03 32

21 24 1.00000e+00 1.17319e+02 7.25821e-04 32

22 25 1.00000e+00 1.17319e+02 4.46354e-04 32

23 26 1.00000e+00 1.17319e+02 2.49745e-04 32

Backtracking...

24 28 4.83418e-01 1.17319e+02 1.18676e-04 32

25 29 1.00000e+00 1.17319e+02 3.63166e-05 32

Directional derivative below progTol

Number of Features Selected by Maximum Likelihood Multinomial Logistic classifier: 36 (out of 36)

Number of Features Selected by L2-regualrized Multinomial Logistic classifier: 36 (out of 36)

Number of Features Selected by L1-regualrized Multinomial Logistic classifier: 23 (out of 36)

Number of Features Selected by Elastic-Net Multinomial Logistic classifier: 28 (out of 36)

Classification error rate on training data for L1-regularied Multinomial Logistic: 0.03

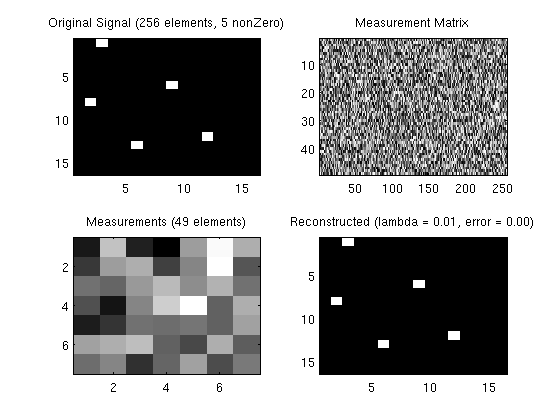

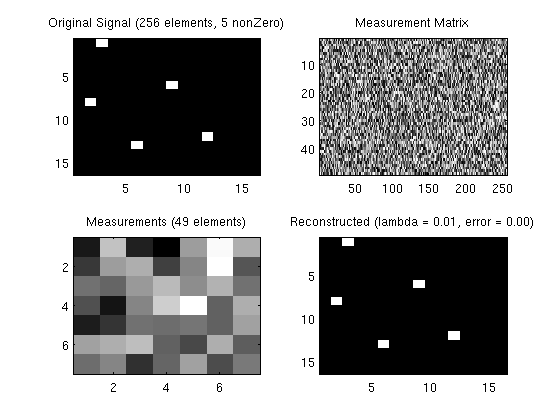

Compressed Sensing

figure;

nVars = 256;

nNonZero = 5;

x = zeros(nVars,1);

x(ceil(rand(nNonZero,1)*nVars)) = 1;

subplot(2,2,1);

imagesc(reshape(x,[16 16]));

colormap gray

title(sprintf('Original Signal (%d elements, %d nonZero)',nVars,nNonZero));

nMeasurements = 49;

phi = rand(nMeasurements,nVars);

subplot(2,2,2);

imagesc(phi);

colormap gray

title('Measurement Matrix');

y = phi*x;

subplot(2,2,3);

imagesc(reshape(y,[7 7]));

colormap gray

title(sprintf('Measurements (%d elements)',nMeasurements));

funObj = @(f)SquaredError(f,phi,y);

f = zeros(nVars,1);

options = [];

for lambda = 10.^[1:-1:-2]

f = L1General2_PSSgb(funObj,f,lambda*ones(nVars,1),options);

residual = norm(phi*f-y)

reconstructionError = norm(f-x)

subplot(2,2,4);

imagesc(reshape(f,[16 16]));

colormap gray

title(sprintf('Reconstructed (lambda = %.2f, error = %.2f)',lambda,reconstructionError));

pause;

end

Iter fEvals stepLen fVal optCond nnz

1 2 3.35502e-05 2.49414e+02 1.21044e+02 256

2 3 1.00000e+00 7.50875e+01 1.22691e+01 256

3 4 1.00000e+00 7.45935e+01 1.20244e+01 256

Backtracking...

4 6 5.58838e-02 7.18038e+01 1.13193e+01 256

Backtracking...

5 8 3.90337e-02 6.98779e+01 1.07825e+01 254

Backtracking...

6 10 3.18286e-02 6.85003e+01 9.87135e+00 248

Backtracking...

7 12 3.66454e-02 6.72506e+01 9.01928e+00 243

Backtracking...

8 14 3.35227e-02 6.62559e+01 8.05011e+00 234

Backtracking...

9 16 5.31147e-02 6.52563e+01 7.07645e+00 224

Backtracking...

10 18 9.72282e-02 6.42318e+01 8.19072e+00 215

Backtracking...

11 20 1.44341e-01 6.33824e+01 9.56689e+00 200

Backtracking...

12 22 3.36791e-01 6.28361e+01 1.21604e+01 181

13 23 1.00000e+00 6.18708e+01 1.13793e+01 171

Backtracking...

14 25 2.96432e-02 6.13845e+01 1.14261e+01 165

Backtracking...

15 27 4.21529e-02 6.08119e+01 1.13936e+01 156

Backtracking...

16 29 4.63575e-02 6.02913e+01 1.13218e+01 146

Backtracking...

17 31 6.34011e-02 5.96866e+01 1.07890e+01 137

Backtracking...

18 33 7.40129e-02 5.88998e+01 1.01510e+01 128

Backtracking...

19 35 1.75350e-01 5.77169e+01 1.01594e+01 112

20 36 1.00000e+00 5.58082e+01 1.38145e+01 64

Backtracking...

21 38 4.43738e-01 5.40059e+01 1.16370e+01 44

Backtracking...

22 40 3.46341e-01 5.31614e+01 5.14224e+00 253

Backtracking...

23 42 1.82283e-01 5.29936e+01 4.57903e+00 200

Backtracking...

24 44 3.57707e-01 5.28811e+01 5.57518e+00 147

25 45 1.00000e+00 5.28628e+01 7.59500e+00 90

26 46 1.00000e+00 5.23126e+01 2.68277e+00 70

27 47 1.00000e+00 5.21133e+01 3.69019e+00 53

28 48 1.00000e+00 5.20151e+01 4.82135e+00 41

29 49 1.00000e+00 5.17088e+01 3.80668e+00 37

Backtracking...

30 51 2.63284e-01 5.13077e+01 4.90943e+00 32

31 52 1.00000e+00 5.08179e+01 5.47259e+00 25

32 53 1.00000e+00 5.02276e+01 4.54655e+00 21

Backtracking...

33 55 2.74455e-02 5.00975e+01 4.24341e+00 166

Backtracking...

34 57 4.68518e-02 4.99615e+01 3.75771e+00 83

Backtracking...

35 59 2.49336e-01 4.95742e+01 2.71343e+00 98

Backtracking...

36 61 5.12947e-02 4.95548e+01 3.20811e+00 47

Backtracking...

37 63 4.22201e-03 4.95288e+01 3.15771e+00 46

Backtracking...

38 65 2.25267e-01 4.93976e+01 3.95104e+00 38

39 66 1.00000e+00 4.93048e+01 6.97752e+00 20

40 67 1.00000e+00 4.90130e+01 4.07106e+00 19

Backtracking...

41 69 2.96373e-01 4.89290e+01 2.51626e+00 155

Backtracking...

42 71 1.77165e-02 4.89241e+01 2.65326e+00 144

Backtracking...

43 73 8.54997e-02 4.89215e+01 2.88308e+00 132

Backtracking...

Backtracking...

Backtracking...

Backtracking...

Backtracking...

44 79 5.88547e-04 4.89215e+01 2.88672e+00 131

Backtracking...

45 81 4.24407e-01 4.88942e+01 3.00351e+00 81

Backtracking...

46 83 2.89523e-01 4.88677e+01 3.54518e+00 68

Backtracking...

47 85 1.96644e-01 4.88420e+01 3.66921e+00 54

Backtracking...

48 87 3.08337e-01 4.88323e+01 3.99057e+00 46

49 88 1.00000e+00 4.86986e+01 3.53232e+00 26

Backtracking...

50 90 5.60421e-01 4.85536e+01 3.97612e+00 21

Backtracking...

51 92 1.69999e-01 4.85064e+01 2.92795e+00 20

52 93 1.00000e+00 4.84441e+01 2.74973e+00 20

Backtracking...

53 95 2.33193e-01 4.82388e+01 3.14110e+00 22

Backtracking...

54 97 5.33995e-01 4.81137e+01 3.96201e+00 16

Backtracking...

55 99 1.10694e-02 4.80970e+01 3.84399e+00 15

Backtracking...

56 101 3.54207e-02 4.80793e+01 4.02648e+00 15

Backtracking...

57 103 2.79191e-01 4.80710e+01 3.57274e+00 13

58 104 1.00000e+00 4.80036e+01 1.39523e+00 13

59 105 1.00000e+00 4.79970e+01 2.39056e+00 31

Backtracking...

60 107 2.61682e-01 4.79932e+01 2.41536e+00 26

Backtracking...

61 109 3.66070e-01 4.79906e+01 2.22905e+00 24

62 110 1.00000e+00 4.79802e+01 2.18098e+00 22

63 111 1.00000e+00 4.79649e+01 2.52345e+00 17

64 112 1.00000e+00 4.79286e+01 2.00820e+00 15

Backtracking...

65 114 1.00000e-03 4.79280e+01 2.00336e+00 15

Backtracking...

66 116 4.84845e-01 4.78797e+01 1.79993e+00 12

Backtracking...

Backtracking...

67 119 8.01789e-02 4.78755e+01 1.47826e+00 12

68 120 1.00000e+00 4.78534e+01 1.70749e+00 13

Backtracking...

69 122 2.16452e-01 4.78455e+01 1.38289e+00 12

70 123 1.00000e+00 4.78335e+01 2.19775e+00 18

Backtracking...

71 125 1.21312e-02 4.78286e+01 2.18760e+00 17

72 126 1.00000e+00 4.78151e+01 1.58356e+00 16

Backtracking...

73 128 4.16636e-01 4.78139e+01 2.62897e-01 17

74 129 1.00000e+00 4.78139e+01 4.58304e-01 15

75 130 1.00000e+00 4.78085e+01 2.25101e-01 15

76 131 1.00000e+00 4.78077e+01 2.89047e-01 17

77 132 1.00000e+00 4.78050e+01 1.02011e-01 16

78 133 1.00000e+00 4.78032e+01 1.17981e-01 16

Backtracking...

79 135 3.88570e-01 4.78023e+01 1.10667e-01 15

Backtracking...

80 137 3.96675e-01 4.78014e+01 2.01171e-01 15

Backtracking...

81 139 4.24047e-01 4.78003e+01 6.48385e-02 15

82 140 1.00000e+00 4.77999e+01 4.88097e-02 15

83 141 1.00000e+00 4.77998e+01 6.40268e-02 15

84 142 1.00000e+00 4.77991e+01 4.83560e-02 15

Backtracking...

85 144 3.71882e-01 4.77990e+01 3.98244e-02 15

86 145 1.00000e+00 4.77988e+01 2.93461e-02 15

87 146 1.00000e+00 4.77987e+01 2.24206e-02 15

88 147 1.00000e+00 4.77987e+01 1.82457e-02 15

89 148 1.00000e+00 4.77987e+01 1.31130e-02 15

90 149 1.00000e+00 4.77987e+01 1.45262e-02 15

91 150 1.00000e+00 4.77987e+01 1.41998e-02 15

92 151 1.00000e+00 4.77987e+01 1.17305e-02 15

93 152 1.00000e+00 4.77987e+01 7.98338e-03 15

94 153 1.00000e+00 4.77987e+01 5.00422e-03 15

95 154 1.00000e+00 4.77987e+01 3.40224e-03 15

96 155 1.00000e+00 4.77987e+01 1.98160e-03 15

97 156 1.00000e+00 4.77987e+01 1.05096e-03 15

98 157 1.00000e+00 4.77987e+01 5.64512e-04 15

99 158 1.00000e+00 4.77987e+01 3.50266e-04 15

100 159 1.00000e+00 4.77987e+01 1.75665e-04 15

101 160 1.00000e+00 4.77987e+01 7.10882e-05 15

102 161 1.00000e+00 4.77987e+01 1.34542e-05 15

Directional derivative below progTol

residual =

1.4837

reconstructionError =

0.5158

Iter fEvals stepLen fVal optCond nnz

Backtracking...

1 3 1.60236e-04 5.68849e+00 2.34637e+00 256

2 4 1.00000e+00 5.67463e+00 2.32625e+00 256

Backtracking...

3 6 2.25231e-02 5.63884e+00 2.27424e+00 256

Backtracking...

4 8 1.82919e-02 5.61157e+00 2.19021e+00 243

Backtracking...

5 10 2.50015e-02 5.59090e+00 2.02519e+00 230

Backtracking...

6 12 6.56450e-02 5.56967e+00 1.79559e+00 210

Backtracking...

7 14 1.50409e-01 5.55205e+00 1.43738e+00 185

8 15 1.00000e+00 5.53844e+00 2.08636e+00 155

9 16 1.00000e+00 5.51437e+00 1.35597e+00 213

10 17 1.00000e+00 5.49252e+00 1.41764e+00 176

Backtracking...

11 19 3.68872e-02 5.48114e+00 1.33078e+00 161

Backtracking...

12 21 1.06121e-01 5.46949e+00 1.11425e+00 147

Backtracking...

13 23 2.31599e-01 5.45976e+00 1.30507e+00 128

14 24 1.00000e+00 5.44864e+00 1.69869e+00 108

15 25 1.00000e+00 5.42534e+00 1.81223e+00 104

Backtracking...

16 27 2.64930e-01 5.40175e+00 1.95337e+00 103

17 28 1.00000e+00 5.36302e+00 1.93737e+00 98

18 29 1.00000e+00 5.30890e+00 1.12735e+00 84

Backtracking...

19 31 1.48389e-01 5.29321e+00 9.68236e-01 73

20 32 1.00000e+00 5.26064e+00 9.60619e-01 57

Backtracking...

Backtracking...

21 35 1.84666e-02 5.25926e+00 1.51982e+00 55

22 36 1.00000e+00 5.23156e+00 1.45435e+00 46

Backtracking...

Backtracking...

23 39 6.37792e-02 5.19768e+00 2.08783e+00 27

Backtracking...

24 41 2.63467e-01 5.19192e+00 1.53348e+00 230

25 42 1.00000e+00 5.18808e+00 1.36498e+00 171

26 43 1.00000e+00 5.18357e+00 1.25639e+00 126

27 44 1.00000e+00 5.18325e+00 1.53692e+00 92

28 45 1.00000e+00 5.16997e+00 1.00934e+00 87

29 46 1.00000e+00 5.16124e+00 1.25961e+00 72

30 47 1.00000e+00 5.13198e+00 1.09025e+00 37

Backtracking...

31 49 4.13547e-02 5.13057e+00 1.26071e+00 35

32 50 1.00000e+00 5.11300e+00 1.17742e+00 29

Backtracking...

33 52 2.80916e-01 5.07539e+00 1.84497e+00 21

Backtracking...

34 54 5.23250e-02 5.07332e+00 1.82016e+00 25

Backtracking...

35 56 1.46962e-02 5.04210e+00 9.63966e-01 19

36 57 1.00000e+00 5.02598e+00 4.11094e-01 17

37 58 1.00000e+00 5.02203e+00 6.04572e-01 14

Backtracking...

38 60 3.86740e-01 5.01287e+00 8.12598e-01 14

39 61 1.00000e+00 5.01036e+00 1.07048e+00 10

Backtracking...

40 63 2.48744e-01 4.99471e+00 3.52853e-01 10

41 64 1.00000e+00 4.99341e+00 8.89207e-01 95

Backtracking...

42 66 2.54049e-01 4.99044e+00 7.83691e-01 71

Backtracking...

43 68 1.98328e-01 4.98749e+00 4.74051e-01 65

Backtracking...

Backtracking...

44 71 8.60715e-02 4.98729e+00 5.06820e-01 56

45 72 1.00000e+00 4.98350e+00 3.42037e-01 22

Backtracking...

Backtracking...

46 75 4.72320e-02 4.98338e+00 3.28807e-01 18

Backtracking...

Backtracking...

47 78 7.07891e-02 4.98323e+00 2.25100e-01 17

Backtracking...

48 80 4.18919e-01 4.98293e+00 1.94093e-01 13

49 81 1.00000e+00 4.98238e+00 1.17359e-01 11

50 82 1.00000e+00 4.98198e+00 1.29208e-01 13

51 83 1.00000e+00 4.98051e+00 1.25856e-01 10

52 84 1.00000e+00 4.97900e+00 1.29891e-01 9

53 85 1.00000e+00 4.97872e+00 1.88020e-01 8

Backtracking...

54 87 4.30720e-03 4.97869e+00 1.87528e-01 8

55 88 1.00000e+00 4.97835e+00 4.69479e-02 8

56 89 1.00000e+00 4.97827e+00 3.12822e-02 8

57 90 1.00000e+00 4.97821e+00 2.65621e-02 13

58 91 1.00000e+00 4.97819e+00 6.62817e-02 14

Backtracking...

Backtracking...

59 94 5.17236e-04 4.97818e+00 6.93045e-02 14

Backtracking...

60 96 3.43483e-01 4.97814e+00 4.80769e-02 13

61 97 1.00000e+00 4.97811e+00 2.36317e-02 12

62 98 1.00000e+00 4.97811e+00 4.64090e-02 15

Backtracking...

Backtracking...

63 101 5.21036e-03 4.97811e+00 4.71775e-02 13

Backtracking...

Backtracking...

64 104 4.48009e-04 4.97811e+00 4.74027e-02 13

Backtracking...

65 106 8.98018e-02 4.97810e+00 3.76276e-02 13

Backtracking...

66 108 2.87381e-02 4.97808e+00 3.60121e-02 12

67 109 1.00000e+00 4.97804e+00 2.25433e-02 12

68 110 1.00000e+00 4.97799e+00 6.35032e-03 13

Backtracking...

69 112 1.72141e-01 4.97799e+00 6.71866e-03 12

70 113 1.00000e+00 4.97799e+00 5.32645e-03 15

Backtracking...

71 115 8.01806e-02 4.97799e+00 6.22308e-03 14

Backtracking...

72 117 3.96239e-01 4.97799e+00 9.08961e-03 14

73 118 1.00000e+00 4.97799e+00 5.18465e-03 14

74 119 1.00000e+00 4.97799e+00 4.70484e-03 15

75 120 1.00000e+00 4.97799e+00 1.20413e-02 15

Backtracking...

76 122 4.91569e-01 4.97799e+00 5.21229e-03 15

77 123 1.00000e+00 4.97799e+00 1.72499e-03 15

78 124 1.00000e+00 4.97799e+00 1.38505e-03 15

79 125 1.00000e+00 4.97799e+00 5.78246e-04 15

80 126 1.00000e+00 4.97799e+00 2.71256e-04 15

81 127 1.00000e+00 4.97799e+00 2.37731e-04 15

82 128 1.00000e+00 4.97799e+00 1.53940e-04 15

83 129 1.00000e+00 4.97799e+00 4.19501e-05 15

Directional derivative below progTol

residual =

0.1484

reconstructionError =

0.0516

Iter fEvals stepLen fVal optCond nnz

Backtracking...

1 3 1.60236e-04 5.06885e-01 2.34663e-01 256

2 4 1.00000e+00 5.06747e-01 2.32651e-01 256

Backtracking...

3 6 2.25218e-02 5.06389e-01 2.27449e-01 256

Backtracking...

4 8 1.82910e-02 5.06116e-01 2.19045e-01 243

Backtracking...

5 10 2.50012e-02 5.05909e-01 2.02543e-01 230

Backtracking...

6 12 6.56438e-02 5.05697e-01 1.79583e-01 210

Backtracking...

7 14 1.50413e-01 5.05521e-01 1.43745e-01 185

8 15 1.00000e+00 5.05385e-01 2.08634e-01 155

9 16 1.00000e+00 5.05144e-01 1.35624e-01 213

10 17 1.00000e+00 5.04926e-01 1.41787e-01 176

Backtracking...

11 19 3.68734e-02 5.04812e-01 1.33108e-01 161

Backtracking...

12 21 1.06018e-01 5.04695e-01 1.11461e-01 147

Backtracking...

13 23 2.31630e-01 5.04598e-01 1.30482e-01 128

14 24 1.00000e+00 5.04487e-01 1.69865e-01 108

15 25 1.00000e+00 5.04254e-01 1.81175e-01 104

Backtracking...

16 27 2.64380e-01 5.04018e-01 1.95298e-01 103

17 28 1.00000e+00 5.03631e-01 1.93785e-01 98

18 29 1.00000e+00 5.03090e-01 1.12675e-01 84

Backtracking...

19 31 1.49860e-01 5.02932e-01 9.69812e-02 73

20 32 1.00000e+00 5.02606e-01 9.59290e-02 57

Backtracking...

Backtracking...

21 35 1.81477e-02 5.02589e-01 1.48497e-01 55

22 36 1.00000e+00 5.02312e-01 1.45984e-01 46

Backtracking...

Backtracking...

23 39 8.50074e-02 5.02006e-01 2.19799e-01 27

Backtracking...

24 41 2.42453e-01 5.01938e-01 1.58443e-01 248

25 42 1.00000e+00 5.01897e-01 1.27765e-01 172

26 43 1.00000e+00 5.01855e-01 1.18496e-01 125

Backtracking...

27 45 3.09388e-01 5.01819e-01 1.06252e-01 97

28 46 1.00000e+00 5.01758e-01 1.13786e-01 72

Backtracking...

29 48 3.14004e-01 5.01709e-01 1.49059e-01 43

30 49 1.00000e+00 5.01571e-01 1.05665e-01 40

31 50 1.00000e+00 5.01059e-01 2.32989e-01 24

Backtracking...

32 52 1.55235e-01 5.00981e-01 1.55188e-01 155

Backtracking...

33 54 1.30240e-01 5.00876e-01 1.12352e-01 128

Backtracking...

34 56 6.52915e-03 5.00872e-01 1.12149e-01 114

Backtracking...

35 58 4.02910e-01 5.00750e-01 1.57582e-01 45

Backtracking...

36 60 2.11957e-03 5.00747e-01 1.55973e-01 44

Backtracking...

37 62 1.95066e-01 5.00675e-01 1.38185e-01 26

Backtracking...

38 64 1.91051e-03 5.00664e-01 1.40163e-01 26

Backtracking...

39 66 1.42964e-01 5.00432e-01 1.63985e-01 23

Backtracking...

Backtracking...

40 69 1.39894e-01 5.00275e-01 6.71169e-02 18

Backtracking...

Backtracking...

41 72 1.55481e-04 5.00259e-01 5.90533e-02 78

Backtracking...

Backtracking...

42 75 1.77622e-05 5.00243e-01 4.83415e-02 49

Backtracking...

43 77 3.47796e-02 5.00232e-01 3.59950e-02 37

Backtracking...

44 79 4.07696e-01 5.00154e-01 4.49068e-02 30

Backtracking...

45 81 1.00000e-03 5.00146e-01 4.15653e-02 30

46 82 1.00000e+00 5.00122e-01 8.86935e-02 21

Backtracking...

Backtracking...

47 85 1.06394e-06 5.00119e-01 8.87844e-02 21

Backtracking...

48 87 2.58230e-01 5.00042e-01 7.43836e-02 19

Backtracking...

Backtracking...

49 90 3.44717e-04 5.00039e-01 7.22945e-02 19

Backtracking...

50 92 4.86071e-01 4.99980e-01 5.27179e-02 18

Backtracking...

51 94 1.75207e-01 4.99958e-01 4.10484e-02 17

Backtracking...

Backtracking...

52 97 2.51529e-04 4.99956e-01 3.83362e-02 17

Backtracking...

53 99 2.99588e-01 4.99923e-01 4.59696e-02 16

54 100 1.00000e+00 4.99882e-01 3.31557e-02 13

Backtracking...

Backtracking...

55 103 8.47893e-05 4.99879e-01 3.47154e-02 13

Backtracking...

56 105 3.64022e-01 4.99860e-01 2.26680e-02 13

57 106 1.00000e+00 4.99822e-01 4.35095e-02 43

Backtracking...

58 108 8.33157e-03 4.99821e-01 4.41126e-02 39

Backtracking...

Backtracking...

59 111 1.78770e-03 4.99821e-01 4.40774e-02 38

Backtracking...

Backtracking...

60 114 1.63657e-02 4.99820e-01 4.33328e-02 33

Backtracking...

61 116 1.00000e-03 4.99819e-01 4.19340e-02 30

Backtracking...

62 118 2.45278e-03 4.99818e-01 4.15687e-02 29

Backtracking...

63 120 2.68086e-01 4.99817e-01 4.30702e-02 23

Backtracking...

64 122 4.58076e-03 4.99816e-01 4.25081e-02 22

65 123 1.00000e+00 4.99808e-01 1.02988e-02 14

Backtracking...

66 125 1.00000e-03 4.99808e-01 1.08631e-02 23

Backtracking...

Backtracking...

67 128 2.78058e-05 4.99807e-01 1.34438e-02 21

Backtracking...

68 130 5.91947e-02 4.99806e-01 1.70758e-02 16

Backtracking...

Backtracking...

Backtracking...

69 134 1.12997e-05 4.99806e-01 1.75139e-02 14

Backtracking...

Backtracking...

Backtracking...

Backtracking...

70 139 6.92373e-08 4.99806e-01 9.47738e-03 11

Backtracking...

Backtracking...

Backtracking...

71 143 6.71580e-08 4.99805e-01 1.01570e-02 17

Backtracking...

Backtracking...

72 146 1.03015e-05 4.99805e-01 1.09567e-02 14

Backtracking...

Backtracking...

73 149 1.27424e-06 4.99805e-01 1.09161e-02 16

Backtracking...

Backtracking...

74 152 4.20603e-04 4.99805e-01 1.29725e-02 14

Backtracking...

75 154 2.71789e-01 4.99801e-01 1.65521e-02 12

Backtracking...

76 156 3.59669e-01 4.99790e-01 1.49572e-02 11

Backtracking...

77 158 1.00000e-03 4.99790e-01 1.46746e-02 11

Backtracking...

78 160 2.04111e-01 4.99788e-01 1.18333e-02 11

Backtracking...

79 162 4.47321e-01 4.99784e-01 5.64353e-03 11

80 163 1.00000e+00 4.99782e-01 7.52085e-03 12

Backtracking...

81 165 1.17572e-02 4.99782e-01 6.50864e-03 20

Backtracking...

82 167 1.81064e-03 4.99782e-01 5.86296e-03 15

83 168 1.00000e+00 4.99781e-01 3.70905e-03 17

Backtracking...

84 170 1.00000e-03 4.99781e-01 3.10069e-03 16

Backtracking...

85 172 6.34585e-02 4.99781e-01 1.41734e-03 14

86 173 1.00000e+00 4.99780e-01 2.46138e-03 14

Backtracking...

Backtracking...

87 176 2.12295e-03 4.99780e-01 1.41918e-03 14

88 177 1.00000e+00 4.99780e-01 1.25534e-03 14

89 178 1.00000e+00 4.99780e-01 3.82409e-03 15

Backtracking...

90 180 3.79342e-02 4.99780e-01 3.33626e-03 15

Backtracking...

91 182 5.00220e-03 4.99780e-01 3.38568e-03 15

Backtracking...

92 184 3.86838e-01 4.99780e-01 2.71508e-03 15

93 185 1.00000e+00 4.99780e-01 1.49377e-03 17

Backtracking...

Backtracking...

94 188 4.42474e-02 4.99780e-01 1.45697e-03 16

Backtracking...

95 190 5.95538e-03 4.99780e-01 1.48054e-03 16

96 191 1.00000e+00 4.99780e-01 1.78872e-03 15

97 192 1.00000e+00 4.99780e-01 6.27976e-04 15

98 193 1.00000e+00 4.99780e-01 8.24886e-04 15

99 194 1.00000e+00 4.99780e-01 6.87867e-04 15

100 195 1.00000e+00 4.99780e-01 3.09047e-04 15

101 196 1.00000e+00 4.99780e-01 1.95504e-04 15

102 197 1.00000e+00 4.99780e-01 2.07888e-04 15

103 198 1.00000e+00 4.99780e-01 1.90420e-04 15

104 199 1.00000e+00 4.99780e-01 1.05667e-04 15

105 200 1.00000e+00 4.99780e-01 6.84437e-05 15

Directional derivative below progTol

residual =

0.0148

reconstructionError =

0.0052

Iter fEvals stepLen fVal optCond nnz

Backtracking...

1 3 1.60237e-04 5.00688e-02 2.34886e-02 256

2 4 1.00000e+00 5.00675e-02 2.32872e-02 256

Backtracking...

3 6 2.25313e-02 5.00639e-02 2.27664e-02 256

Backtracking...

4 8 1.82917e-02 5.00612e-02 2.19247e-02 243

Backtracking...

5 10 2.50170e-02 5.00591e-02 2.02739e-02 230

Backtracking...

6 12 6.56545e-02 5.00570e-02 1.79802e-02 210

Backtracking...

7 14 1.50108e-01 5.00552e-02 1.43878e-02 185

8 15 1.00000e+00 5.00538e-02 2.08023e-02 155

9 16 1.00000e+00 5.00514e-02 1.35429e-02 213

10 17 1.00000e+00 5.00492e-02 1.42249e-02 176

Backtracking...

11 19 3.60938e-02 5.00481e-02 1.33511e-02 161

Backtracking...

12 21 1.05507e-01 5.00470e-02 1.12078e-02 146

Backtracking...

13 23 2.23302e-01 5.00460e-02 1.28397e-02 128

14 24 1.00000e+00 5.00449e-02 1.68841e-02 109

15 25 1.00000e+00 5.00424e-02 1.71820e-02 105

Backtracking...

16 27 1.76697e-01 5.00400e-02 1.79058e-02 94

17 28 1.00000e+00 5.00380e-02 2.48689e-02 77

Backtracking...

18 30 4.05591e-01 5.00354e-02 1.15970e-02 204

19 31 1.00000e+00 5.00318e-02 1.51273e-02 112

Backtracking...

20 33 2.12182e-01 5.00312e-02 2.04940e-02 70

21 34 1.00000e+00 5.00287e-02 1.81159e-02 92

Backtracking...

22 36 3.66422e-01 5.00237e-02 1.19228e-02 42

23 37 1.00000e+00 5.00200e-02 1.10827e-02 31

24 38 1.00000e+00 5.00160e-02 1.29873e-02 22

Backtracking...

Backtracking...

25 41 2.57354e-01 5.00155e-02 2.46521e-02 20

Backtracking...

26 43 3.44045e-01 5.00133e-02 1.51349e-02 254

27 44 1.00000e+00 5.00123e-02 1.12316e-02 197

28 45 1.00000e+00 5.00113e-02 1.30541e-02 134

29 46 1.00000e+00 5.00106e-02 1.60556e-02 80

Backtracking...

30 48 3.14852e-01 5.00095e-02 1.23390e-02 67

31 49 1.00000e+00 5.00089e-02 1.24535e-02 66

32 50 1.00000e+00 5.00088e-02 1.48105e-02 46

33 51 1.00000e+00 5.00065e-02 7.97435e-03 42

Backtracking...

34 53 3.30928e-01 5.00061e-02 8.89595e-03 35

Backtracking...

35 55 1.86199e-01 5.00059e-02 8.23941e-03 32

Backtracking...

Backtracking...

36 58 1.00000e-06 5.00057e-02 6.43879e-03 32

37 59 1.00000e+00 5.00040e-02 6.64221e-03 29

Backtracking...

38 61 4.64992e-03 5.00039e-02 6.50540e-03 29

Backtracking...

39 63 2.89876e-01 5.00031e-02 8.43046e-03 26

Backtracking...

40 65 1.52717e-02 5.00025e-02 7.55624e-03 25

41 66 1.00000e+00 5.00008e-02 6.59390e-03 22

42 67 1.00000e+00 5.00003e-02 4.99323e-03 21

Backtracking...

43 69 4.18505e-01 5.00000e-02 3.26197e-03 150

Backtracking...

44 71 1.81638e-01 5.00000e-02 4.00789e-03 126

Backtracking...

45 73 4.36390e-03 5.00000e-02 3.96882e-03 124

Backtracking...

Backtracking...

46 76 3.26264e-02 5.00000e-02 4.04217e-03 115

Backtracking...

47 78 2.14299e-03 5.00000e-02 4.03723e-03 107

Backtracking...

48 80 1.00000e-03 5.00000e-02 3.97649e-03 106

Backtracking...

49 82 1.00000e-03 4.99999e-02 3.87416e-03 104

Backtracking...

50 84 3.92451e-03 4.99999e-02 3.48224e-03 102

Backtracking...

51 86 1.13284e-01 4.99999e-02 3.83733e-03 84

Backtracking...

52 88 2.82300e-02 4.99999e-02 3.65085e-03 81

53 89 1.00000e+00 4.99997e-02 2.53542e-03 66

Backtracking...

54 91 2.46015e-01 4.99996e-02 3.51781e-03 65

Backtracking...

Backtracking...

55 94 3.50341e-04 4.99996e-02 3.59789e-03 58

Backtracking...

56 96 1.00000e-03 4.99995e-02 2.92133e-03 51

Backtracking...

57 98 9.23513e-03 4.99995e-02 2.95674e-03 49

Backtracking...

58 100 1.00000e-03 4.99995e-02 3.04431e-03 44

59 101 1.00000e+00 4.99991e-02 4.12260e-03 38

Backtracking...

Backtracking...

60 104 5.23265e-06 4.99991e-02 4.06727e-03 37

Backtracking...

61 106 1.00000e-03 4.99990e-02 3.61422e-03 37

62 107 1.00000e+00 4.99989e-02 4.04238e-03 21

Backtracking...

63 109 2.88973e-03 4.99989e-02 3.43913e-03 21

Backtracking...

64 111 2.29268e-01 4.99987e-02 2.12131e-03 21

65 112 1.00000e+00 4.99985e-02 2.67954e-03 28

Backtracking...

Backtracking...

Backtracking...

66 116 4.15876e-06 4.99985e-02 2.63678e-03 27

Progress in parameters or objective below progTol

residual =

0.0015

reconstructionError =

6.2500e-04

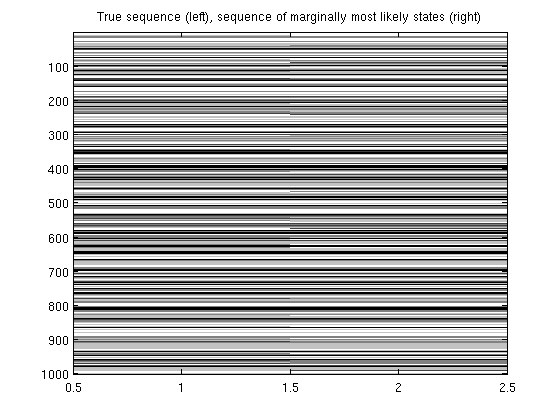

Chain-structured conditional random field

load wordData.mat

lambda = 1;

[w,v_start,v_end,v] = crfChain_initWeights(nFeatures,nStates,'zeros');

featureStart = cumsum([1 nFeatures(1:end)]);

sentences = crfChain_initSentences(y);

nSentences = size(sentences,1);

maxSentenceLength = 1+max(sentences(:,2)-sentences(:,1));

fprintf('Training chain-structured CRF\n');

wv = [w(:);v_start;v_end;v(:)];

funObj = @(wv)crfChain_loss(wv,X,y,nStates,nFeatures,featureStart,sentences);

wv = L1General2_PSSgb(funObj,wv,lambda*ones(size(wv)),options);

[w,v_start,v_end,v] = crfChain_splitWeights(wv,featureStart,nStates);

trainErr = 0;

trainZ = 0;

yhat = zeros(size(y));

for s = 1:nSentences

y_s = y(sentences(s,1):sentences(s,2));

[nodePot,edgePot]=crfChain_makePotentials(X,w,v_start,v_end,v,nFeatures,featureStart,sentences,s);

[nodeBel,edgeBel,logZ] = crfChain_infer(nodePot,edgePot);

[junk yhat(sentences(s,1):sentences(s,2))] = max(nodeBel,[],2);

end

trainErrRate = sum(y~=yhat)/length(y)

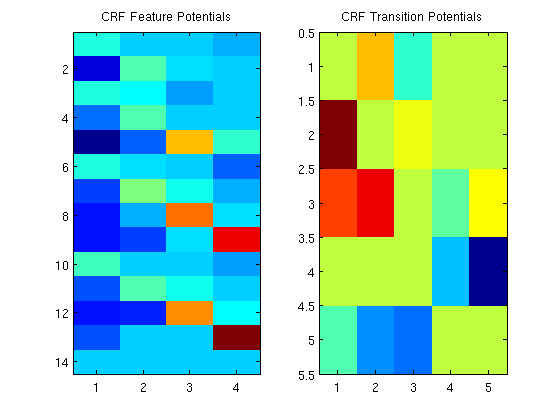

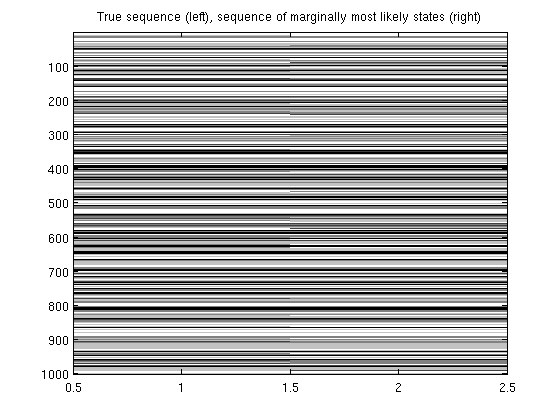

figure;

imagesc([y yhat]);

colormap gray

title('True sequence (left), sequence of marginally most likely states (right)');

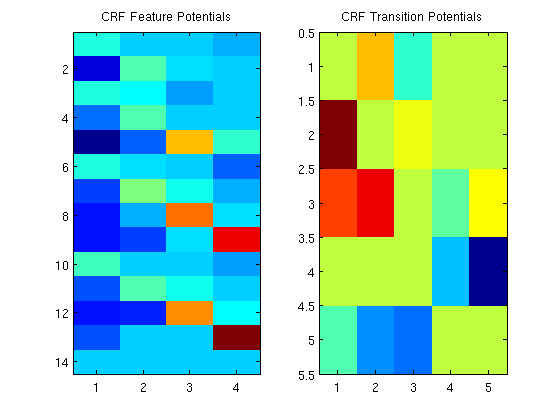

figure;

subplot(1,2,1);

imagesc(w);

title('CRF Feature Potentials');

subplot(1,2,2);

imagesc([v_start' 0;v v_end]);

title('CRF Transition Potentials');

pause;

Training chain-structured CRF

Iter fEvals stepLen fVal optCond nnz

1 2 4.86086e-04 1.14143e+03 1.17753e+02 78

2 3 1.00000e+00 6.63197e+02 3.76440e+01 70

3 4 1.00000e+00 5.82276e+02 4.57577e+01 68

4 5 1.00000e+00 5.25860e+02 1.59569e+01 77

5 6 1.00000e+00 4.91382e+02 6.99251e+00 71

6 7 1.00000e+00 4.80566e+02 4.91975e+00 75

7 8 1.00000e+00 4.75227e+02 3.16558e+00 75

8 9 1.00000e+00 4.70818e+02 4.92525e+00 73

9 10 1.00000e+00 4.68909e+02 5.41885e+00 68

10 11 1.00000e+00 4.66096e+02 2.13411e+00 74

11 12 1.00000e+00 4.64156e+02 2.64028e+00 69

12 13 1.00000e+00 4.62693e+02 3.33599e+00 65

13 14 1.00000e+00 4.61455e+02 1.75989e+00 66

14 15 1.00000e+00 4.60940e+02 1.66060e+00 66

15 16 1.00000e+00 4.60451e+02 1.22923e+00 65

16 17 1.00000e+00 4.59987e+02 1.48194e+00 66

17 18 1.00000e+00 4.59660e+02 1.21257e+00 64

18 19 1.00000e+00 4.59362e+02 2.13169e+00 61

19 20 1.00000e+00 4.59175e+02 1.26938e+00 59

20 21 1.00000e+00 4.59062e+02 6.69563e-01 60

21 22 1.00000e+00 4.58984e+02 1.11080e+00 60

22 23 1.00000e+00 4.58956e+02 5.26510e-01 59

23 24 1.00000e+00 4.58917e+02 4.26663e-01 59

24 25 1.00000e+00 4.58896e+02 2.76077e-01 58

25 26 1.00000e+00 4.58883e+02 2.61146e-01 58

26 27 1.00000e+00 4.58861e+02 2.19739e-01 56

27 28 1.00000e+00 4.58849e+02 3.06288e-01 56

28 29 1.00000e+00 4.58835e+02 2.35700e-01 58

29 30 1.00000e+00 4.58829e+02 1.19030e-01 58

30 31 1.00000e+00 4.58823e+02 8.89767e-02 58

31 32 1.00000e+00 4.58819e+02 1.49742e-01 58

32 33 1.00000e+00 4.58817e+02 5.09001e-02 57

33 34 1.00000e+00 4.58816e+02 4.17467e-02 58

34 35 1.00000e+00 4.58815e+02 2.90042e-02 59

35 36 1.00000e+00 4.58814e+02 4.90847e-02 59

36 37 1.00000e+00 4.58814e+02 2.03209e-02 59

37 38 1.00000e+00 4.58814e+02 1.70537e-02 59

38 39 1.00000e+00 4.58814e+02 1.05995e-02 59

39 40 1.00000e+00 4.58814e+02 2.75948e-02 58

40 41 1.00000e+00 4.58814e+02 6.81921e-03 58

41 42 1.00000e+00 4.58814e+02 5.25640e-03 58

42 43 1.00000e+00 4.58814e+02 7.96413e-03 58

43 44 1.00000e+00 4.58813e+02 8.19545e-03 58

44 45 1.00000e+00 4.58813e+02 9.21045e-03 57

45 46 1.00000e+00 4.58813e+02 2.46348e-03 57

46 47 1.00000e+00 4.58813e+02 2.04623e-03 57

47 48 1.00000e+00 4.58813e+02 1.51764e-03 57

48 49 1.00000e+00 4.58813e+02 1.90082e-03 57

49 50 1.00000e+00 4.58813e+02 6.58426e-04 57

50 51 1.00000e+00 4.58813e+02 4.29058e-04 57

51 52 1.00000e+00 4.58813e+02 3.59842e-04 57

52 53 1.00000e+00 4.58813e+02 2.59996e-04 57

53 54 1.00000e+00 4.58813e+02 1.56046e-04 57

54 55 1.00000e+00 4.58813e+02 1.83365e-04 57

55 56 1.00000e+00 4.58813e+02 5.31323e-05 57

56 57 1.00000e+00 4.58813e+02 3.68219e-05 57

57 58 1.00000e+00 4.58813e+02 2.98633e-05 57

Directional derivative below progTol

trainErrRate =

0.1030

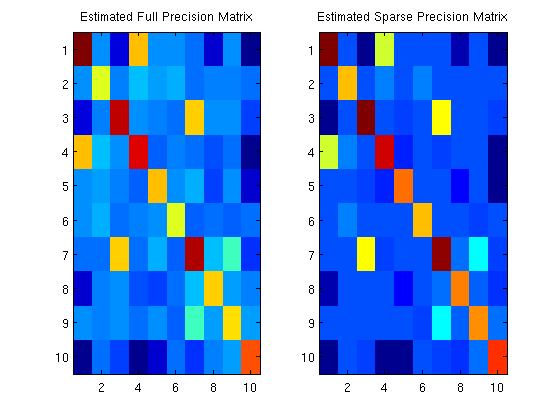

Graphical Lasso

lambda = .05;

nNodes = 10;

adj = triu(rand(nNodes) > .75,1);

adj = setdiag(adj+adj',1);

P = randn(nNodes).*adj;

P = (P+P')/2;

tau = 1;

X = P + tau*eye(nNodes);

while ~ispd(X)

tau = tau*2;

X = P + tau*eye(nNodes);

end

mu = randn(nNodes,1);

C = inv(X);

R = chol(C)';

X = zeros(nInstances,nNodes);

for i = 1:nInstances

X(i,:) = (mu + R*randn(nNodes,1))';

end

X = standardizeCols(X);

sigma_emp = cov(X);

nonZero = find(ones(nNodes));

funObj = @(x)sparsePrecisionObj(x,nNodes,nonZero,sigma_emp);

Kfull = eye(nNodes);

fprintf('Fitting full Gaussian graphical model\n');

Kfull(nonZero) = minFunc(funObj,Kfull(nonZero),options);

funObj = @(x)sparsePrecisionObj(x,nNodes,nonZero,sigma_emp);

Ksparse = eye(nNodes);

fprintf('Fitting sparse Gaussian graphical model\n');

Ksparse(nonZero) = L1General2_PSSgb(funObj,Ksparse(nonZero),lambda*ones(nNodes*nNodes,1),options);

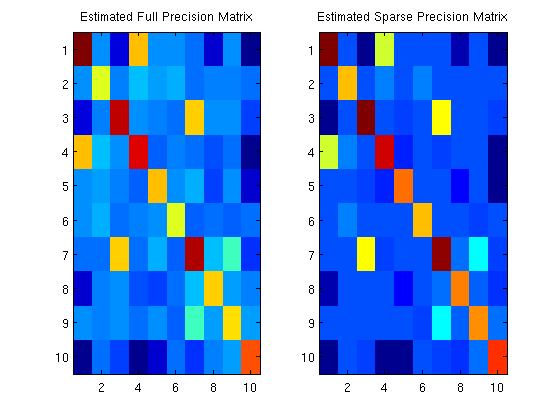

figure;

subplot(1,2,1);

imagesc(Kfull);

title('Estimated Full Precision Matrix');

subplot(1,2,2);

imagesc(Ksparse);

title('Estimated Sparse Precision Matrix');

pause;

Fitting full Gaussian graphical model

Iteration FunEvals Step Length Function Val Opt Cond

1 4 4.56509e-01 8.71032e+00 3.59715e-01

2 5 1.00000e+00 8.36687e+00 2.68547e-01

3 6 1.00000e+00 8.03081e+00 1.37907e-01

4 7 1.00000e+00 7.89537e+00 1.20463e-01

5 8 1.00000e+00 7.82045e+00 6.59930e-02

6 9 1.00000e+00 7.79321e+00 4.23325e-02

7 10 1.00000e+00 7.78270e+00 4.04436e-02

8 11 1.00000e+00 7.77689e+00 2.48096e-02

9 12 1.00000e+00 7.77590e+00 1.28531e-02

10 13 1.00000e+00 7.77565e+00 7.40367e-03

11 14 1.00000e+00 7.77539e+00 4.47854e-03

12 15 1.00000e+00 7.77522e+00 5.34326e-03

13 16 1.00000e+00 7.77496e+00 4.07508e-03

14 17 1.00000e+00 7.77467e+00 2.88622e-03

15 19 2.24018e-01 7.77463e+00 2.41829e-03

16 20 1.00000e+00 7.77458e+00 6.65333e-04

17 21 1.00000e+00 7.77458e+00 8.04254e-05

18 22 1.00000e+00 7.77458e+00 1.78096e-05

19 23 1.00000e+00 7.77458e+00 1.18396e-05

Directional Derivative below progTol

Fitting sparse Gaussian graphical model

Iter fEvals stepLen fVal optCond nnz

1 2 9.68356e-02 1.02198e+01 5.51510e-01 68

Backtracking...

Halving Step Size

2 4 5.00000e-01 9.47195e+00 2.65052e-01 68

3 5 1.00000e+00 9.30345e+00 1.55103e-01 70

4 6 1.00000e+00 9.14340e+00 9.95526e-02 66

5 7 1.00000e+00 9.11583e+00 8.57312e-02 58

6 8 1.00000e+00 9.09648e+00 3.34571e-02 62

7 9 1.00000e+00 9.09328e+00 2.54082e-02 60

8 10 1.00000e+00 9.09255e+00 1.75467e-02 62

9 11 1.00000e+00 9.09176e+00 7.25425e-03 58

10 12 1.00000e+00 9.09167e+00 4.48355e-03 60

11 13 1.00000e+00 9.09164e+00 1.20526e-03 60

12 14 1.00000e+00 9.09163e+00 1.31647e-03 58

13 15 1.00000e+00 9.09162e+00 1.40570e-03 58

14 16 1.00000e+00 9.09162e+00 6.74013e-04 58

15 17 1.00000e+00 9.09162e+00 4.52143e-04 58

16 18 1.00000e+00 9.09162e+00 1.52627e-04 58

17 19 1.00000e+00 9.09162e+00 1.13633e-04 58

18 20 1.00000e+00 9.09162e+00 1.06931e-04 58

19 21 1.00000e+00 9.09162e+00 1.21023e-04 58

20 22 1.00000e+00 9.09162e+00 2.43673e-05 58

21 23 1.00000e+00 9.09162e+00 1.73472e-05 58

22 24 1.00000e+00 9.09162e+00 9.34430e-06 58

First-order optimality below optTol

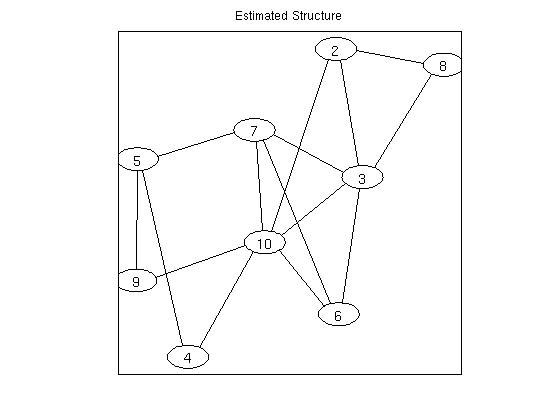

Markov Random Field Structure Learning

lambda = 5;

nInstances = 500;

nNodes = 10;

edgeDensity = .5;

nStates = 2;

ising = 1;

tied = 0;

useMex = 1;

y = UGM_generate(nInstances,0,nNodes,edgeDensity,nStates,ising,tied);

adjInit = fullAdjMatrix(nNodes);

edgeStruct = UGM_makeEdgeStruct(adjInit,nStates,useMex);

Xnode = ones(nInstances,1,nNodes);

Xedge = ones(nInstances,1,edgeStruct.nEdges);

[nodeMap,edgeMap] = UGM_makeMRFmaps(nNodes,edgeStruct,ising,tied);

nNodeParams = max(nodeMap(:));

nParams = max(edgeMap(:));

nEdgeParams = nParams-nNodeParams;