To avoid costly global illumination algorithms, we pre-compute the outgoing light field of a given lamp and store away these radiance values in a four-dimensional data structure. This discretized lighting information can later be used to reconstruct the radiance emitted by the light source at every point in every direction. Instead of generating a global illumination solution, a Canned Light Source could also be generated by resampling measured data or simply by computing radiance values to achieve special effects.

A Canned Light Source can easily be integrated in standard global illumination algorithms, e.g. ray-tracing or radiosity. Due to the huge amount of data, speed-up techniques such as shadow maps or adaptive downsampling become mandatory for real world applications. This image shows the illumination caused by a point source located in the focal point of a parabolic reflector.

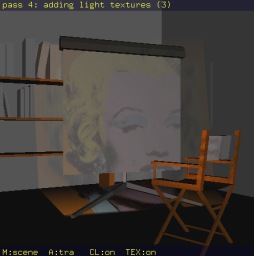

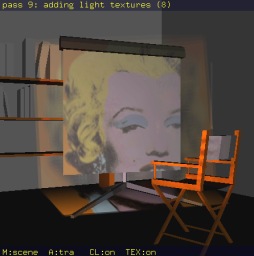

The next image shows are more complicated scene which was rendered on a 32 x PentiumII 300Mhz cluster. This is an example of simulating a real-world slide projector.

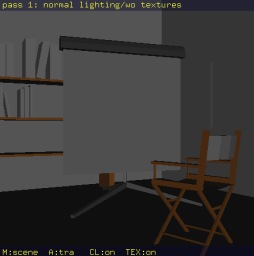

In addition to ray tracing, it is also possible to use graphics hardware to reconstruct the illumination caused by a canned light source. In the following paragraphs we give a brief overview on how this algortihm can be implemented using the OpenGL graphics API. For further details see [1].

Since the four-dimensional Lumigraph can be interpreted as a two-dimensional array of two-dimensional images, we can use texture mapping to project the illumination into the scene. These results can be combined using the accumulation buffer or alpha blending (with the blending operation set to GL_FUNC_ADD_EXT and the source and destination factors set to GL_ONE). This approach reduces the quadrilinear interpolation to a bi-linear interpolation on the image plane (using GL_LINEAR for both texture coordinates) and a constant basis on the eye plane (by using fixed evaluation points on the uv-plane as center of projection).

Segal et. al [2] used projective texture mapping to simulate high-quality spotlights or slide projectors. Our algortihm can be seen as an extension to Segal's aproach since we are also considering attenuation due to distance (quadratic fall-off) and angle (cosine term). This is done by modulating the texture's color with an additional spot light.

This scene, which is now part of the color section in the new OpenGL Programing Guide (3rd edition), was rendered on a SGI Onyx2 Base Reality (resolution 512x512). Depending on the resolution of the Canned Lightsource (the uv-resolution is responsible for the number of rendering passes), this scene can be rendered at interactive frame rates.