416 Distributed Systems: Assignment 3

Due: Feb 1st at 9PM

Winter 2016

In this assignment you will learn about leader election and membership management. You will design and implement a leader election algorithm that builds on a simple key-value service. The leader will use the key-value service to track and advertise the set of active nodes in the system. Your implementation will be resilient to a few kinds of failure.

High-level overview

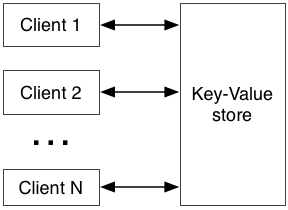

You will be provided with an implementation of a simple key-value

service that implements an RPC-accessible hash table in which keys are

associated with values. Nodes in the system should not communicate

with each other directly and must use this service for all

communication. The following diagram is a high-level view of the

setup in this assignment:

Your task is to implement node logic that allows an arbitrary set of active nodes to agree on a "leader" node. If the leader fails, the remaining nodes should elect a new leader. Once elected, the leader must determine the active nodes in the system and advertise this set to all the nodes in the system (through the key-value service). The set of active nodes may change (as nodes may fail or join the system) and the leader must re-advertise the node set to reflect these events. Active nodes should periodically retrieve this list of active nodes and print it out.

Individual keys in the key-value service may experience permanent unavailability. Your node implementation must be robust to such unavailability and continue to elect leaders that will properly advertise the set of active nodes.

Key-value service

The key value service associates string keys with string values. Think of it as a remote hash table, providing the same strong consistency semantics that you would expect of a local hash table. The service starts with an empty hash table: every key is mapped to the empty string "". The service supports the following three atomic operations via RPC:

- curr-value ← get(key)

- Retrieves the current value for a key. curr-value contains the current value associated with key, or is set to "unavailable" if key is unavailable.

- ret-val ← put(key, value)

- Associates value with key. ret-val is either "", which indicates success, or is set to "unavailable" if the key is unavailable.

- curr-value ← testset(key, test-value, new-value)

- Tests if the current value associated with key is test-value. If yes, then it associates key with new-value and returns new-value. Otherwise, it returns the value currently associated with key. curr-value is set to "unavailable" if the key is unavailable.

One of the arguments to the key-value service implementation is a key failure probability. This controls the likelihood of a key becoming unavailable during any one of the above three operations. Initially all keys are available. Once a key becomes unavailable, it is a permanent unavailability (i.e., until the service is restarted). A key's availability is independent from the availability of other keys in the key-value service. When a key is unavailable, the return value for an operation is always set to "unavailable".

Download the key-value service implementation and an example client that exercises the service. You will be notified of any changes to this posted code via Piazza.

Implementation requirements

- All nodes run the same code.

- All nodes communicate only indirectly, through the key-value service. This means that a node does not know which other nodes are participating in the system, how many there are in total, where they are located, etc.

- Given a sufficiently long time during which failures do not occur, an active (i.e., alive) node is eventually elected as a leader.

- Given a sufficiently long time during which failures do not occur, the elected leader will eventually advertise an accurate list of all active nodes in the system. And, each active node (including the leader) will retrieve the latest version of this list from the key-value service.

- Each node must continually print a listing of the set of active

nodes and the leader node id to stdout, one listing per line, in the

following format:

ID1 ID2 ID3 ... IDn

Where IDi is an active node's id and the first id in the list (i.e., ID1) indicates the id of the current leader node. The other active node ids in the listing do not have to be appear in any particular order. Note that it is sufficient to print a new listing whenever the leader/active nodes information changes. - Your implementation must be robust to node halting failures, including leader halting failures.

- Your implementation must be robust to nodes that restart (i.e., halt and later re-join the system with the same identity).

- Your implementation must be robust to varying RPC times.

- You cannot change the implementation of the key-value service. I gave you the key-value service code so that you can experiment with it locally. But, your solution should work even if I administer the key value service (i.e., you don't have control over the service).

Assumptions you can make

- The key-value service does not fail/restart and does not misbehave.

- No network failures.

- Each node has a unique identifier (specified on the command line).

- The key-value service is dedicated to nodes in your system (one KV-service per student group).

Assumptions you cannot make

- Nodes have synchronized clocks (e.g., running on the same physical host).

Solution spec

Write a go program node.go that implements a node in the system, as described above, and has the following usage:

go run node.go [ip:port] [id]- [ip:port] : address of the key-value service

- [id] : a unique string identifier for the node (no spaces)

What to hand in:

- A writeup (at most one page long) that describes your design and how it satisfies the above requirements. The writeup should be in .pdf format and should appear at top-level as design.pdf file in your repository.

- Your implementation of a node in the system as node.go.

Advice

- Be methodical, spec out the core features you need to build and implement them separately. First, ignore key unavailability and design a leader election algorithm that is not robust to node failures and only works with a constant group of nodes. Then, extend the algorithm to handle leader failures and joining nodes. Finally, implement active node tracking/advertising, and at the very end make all of these robust to key unavailability.

- Most of the mark will be based on the functionality of your code without key unavailability. Therefore, work towards a complete solution before extending it to work with key unavailability.

- The writeup is a key deliverable. Dedicate time to do a good job on cogently describing your design.

- Develop a suite of scripts to test your implementation in both the failure-free case and in the case where a variety of failures occur. Here are some examples:

- join, elect, advertise, join, re-advertise: One node starts, it is elected the leader and it advertises a list of the one node (itself). A new node joins and the leader advertises a list of two nodes. No key unavailability.

- join x 2, elect, advertise: Two nodes start, one is elected a leader, the leader advertises a list of the two nodes. No key unavailability.

- join x 3, elect, leader-fail, elect, advertise: Three nodes start, one is elected a leader, the leader advertises a list of the three nodes. The elected leader fails, a new node is elected a leader, and the new leader advertises a list of the two remaining active nodes. No key unavailability.

- join x 3, elect, advertise, non-leader-fail, re-advertise: Three nodes start, one is elected a leader, the leader advertises a list of the three nodes. A non-leader node fails. The leader advertises a list of remaining two nodes. No key unavailability.

- join x 2, elect, advertise, key-fail, re-advertise: Two nodes start, one is elected a leader, the leader advertises a list of the two nodes. Keys used to advertise the nodes are made unavailable by the key-value service and the leader re-advertises the same set of nodes using new keys.

- Make sure that the code you hand in does not generate any stdout/stderr output except the specified output above. We rely on this output in marking your solutions.

Rough grading scheme

Approximate percentages for different aspects of the solution:

- 25%: Solution has working leader election and nodes advertisement algorithms that work without node failures and without key unavailability.

- 25%: Solution handles node failures (including leader failures).

- 10%: Solution handles nodes that restart (rejoin with identical IDs).

- 30%: Solution handles key unavailability.

- 10%: The writeup cogently describes the design and how it meets the requirements set out above.

Make sure to follow the course collaboration policy and refer to the assignments instructions that detail how to submit your solution.