Teaching Cooperation in Multi-Agent Systems with the help of the ARES System

Melissa Bergen, Jörg Denzinger, Jordan Kidney

Department of Computer Science, University of Calgary

![]()

ABSTRACT

In this paper we present the Agent Rescue Emergency Simulator (ARES) system, which is a simulation environment for agent teams developed and implemented by students as part of an introductory multi-agent systems course. ARES puts the students' agents into a disaster area with the task to find and rescue survivors. While ARES abstracts from quite a lot of details from a true disaster scenario, it still provides an environment that beginners can enjoy working with. Moreover ARES also provides an instructor with enough variations to focus on different aspects of cooperative behavior. In addition this also helps to change the basic world of the assignment from semester to semester, to avoid any "reuse" issues.

Keywords: Multi-Agent Systems, Cooperation, Simulation, Teaching tool, Disaster rescue

![]()

1. Introduction

In the past few years, multi-agent systems (MAS) have become a very active research area that has connections to many other areas, both inside and outside of Computer Science. Consequently, < a href= "http://www.AgentLink.org/resources/teaching-db.html">courses about MAS are starting to be developed and even the first text books are on the market. Perhaps even more than in other areas of Computer Science, teaching MAS has to lead to the involvement of practical experiences by the students. Since the interaction of agents has many surprises (similar to the interaction of human beings) students need hands-on experiences with issues like timing of actions to achieve cooperation, communication and the effects of it, or (unpredictable) changes in the surroundings. These necessities in turn allow students to understand not only the basic problems at hand but also to learn why certain concepts are the way they are.

In order for a student to gain practical experience with developing multi-agent systems, the students need an environment (or testbed) in which their agents will interact, that sets the basic rules for the agents and guards these rules against violations by the agents. Many such environments are available, such as the RoboCup Simulation League Soccer Server or the TAC Game Servers of the Trading Agent Competition (see [Wellman et al. 2001]). However, for teaching purposes the goals for the development of these environments do not completely agree with the goals required under a teaching environment. To begin with, having successful systems available via the web allows for a lot of cheating (and thus requires a lot of work of the instructor spend on counter actions). This in turn can impede upon the experiences that students are supposed to make. Also, the two cited environments are rather specialized, so that certain experiences are outside of their scope. There are a lot of other didactic reasons for not using testbeds that were developed and are used to evaluate research systems, as we will see later in this paper.

An alternative to the current environments is the ARES system (Agent Rescue Emergency Simulator) that is intended to be a testbed for multi-agent systems and to be used for teaching MAS. ARES follows the lead of the Robo-Cup Rescue Initiative in choosing the scenario of rescuing survivors in a disaster zone. The basic tasks that students are given is to design agents who rescue survivors in the ARES system by locating survivors and removing rubble to reach and rescue those survivors. ARES allows for many different variants of the basic setting, such as the ability to vary the information the agents have when starting, the cost of communication, the methods for agents to regain energy, and so on. While many basic requirements of acting in a real disaster scenario are touched, they are nevertheless simplified within ARES towards a game-like scenario. In this scenario, groups of students are working together in order to develop agents in ARES during the four month timeline of the MAS course.

This paper is organized as follows: After this introduction, we take a closer look at the requirements on a testbed for MAS (resp. MAS concepts) that is aimed at helping in teaching MAS basics. This then leads to stating our goals in developing ARES. In Section 3, we present the system using two different views: the view of a student using ARES and the view of an instructor configuring ARES for his/her course (some information about the implementation of ARES is also provided in this section). In Section 4, we present observations we made when using ARES for teaching MAS to a mixed class of graduate and undergraduate students at the University of Calgary. Finally, we will conclude with some remarks on future work regarding ARES.

2. Our Goals in Developing ARES

The term agent is used by many people in many different areas. Even if we look at its uses only in Computer Science we still see numerous different definitions of it. In fact, as pointed out in [Stone and Veloso 2000], the only thing people agree upon regarding the definition of an agent is that they do not agree. If we want to develop an agent testbed to enhance teaching MAS, then this problem is less severe (the agents will be defined by the students based on the different definitions presented to them in the lectures). Nevertheless, there are two extremes regarding multi-agent environments: cooperating agents with a common goal and competing agents with rather different goals. While we present concepts and examples for both extremes and some in-between settings in our course, we nevertheless feel that for the assignment of the course -which is implementing a multi-agent system acting in our testbed- we have to make a decision between a common goal for all agents and selfish agents. Since we see at the moment more to be gained by developing very cooperative systems, we have chosen the common goal, which is also the situation the student teams themselves are in (or should be).

In deciding which general problems the agents (developed by the students) have to solve cooperatively, we did not have to look far. The reasons layed out in [Kitano 2000] by the RoboCup Rescue initiative are very good and convincing, the idea of robots taking on risky tasks in dangerous environments has been a common goal since the beginning of Robotics (and even before if we look into Science Fiction). For determining precisely what tasks we want to have in our testbed, there were a lot of things to consider from a didactic/teaching point of view:

Teams of students have to be able to implement a team of agents acting

in our testbed (i.e. the ARES system) within the four months that the

basic course in MAS takes to teach. We must also assume that these student

know only how to program in general (and that they take the course

concurrently to working in/with ARES).

| We want the students to apply the basic concepts they are supposed to

learn in the course. This means that care must be taken to avoid a large

emphasis on implementation tricks

and fine-tuning of the agents (although we will never be able to totally

avoid this).

| The ARES testbed and the course requirements have to be easy to handle

for students. This means that there has to be enough detail to make the

tasks reasonable, but also enough abstraction and simplification to

allow students to easily grasp the application area and the "laws" (or

rules) of the world in which the agents act in.

| Naturally, motivation is a big factor here. Our first three points

highlight the importance of the student motivation in that

if they are not fulfilled the students` motivation will suffer. But

there are other factors that can affect the motivation. One important

factor is not to use testbeds for competitions of research systems,

especially if these competitions have already been held several times.

Naturally, the research systems that are successful in these competitions

are the results of a lot of effort by the groups producing them and they

often reflect a lot of experiences made in earlier competitions. Systems

by students doing their first steps in MAS will not produce results

that even come close to the competition winners. Also, making

not-so-obvious mistakes is a good learning experience.

| On the other side, having the student teams compete with each other often is a plus with respect to motivation (especially if the competition is not about grades but something else; although the best system should be guaranteed an A). Finally, for an instructor it is very important that there are variations

in the assignments for a course over the semesters. We are not only

talking about avoiding cheating, although obviously this has to be a

concern, but also about motivation, again, and having a research

experience. If each class has to deal with a different "world" their

agents act in, then different tasks under different laws governing it have

to be implemented. This

can foster the development of new strategies by the students, since they

are in a research-like environment.

| |

In addition to these didactic requirements and goals for our testbed, there are requirements with regard to the MAS topics that we want the students to learn by implementing agents acting within the testbed:

As already stated, we want the students to learn about cooperation of

agents. This means they should have to deal with the problem of planning

in and for a MAS, together with cooperative decision making and finding a

good organization.

| Communication and its costs are an extremely important factor in many

multi-agent systems. Very often the concrete communication costs

(including the amount of processing needed to understand what is

communicated to an agent) decide which cooperation concept is best

suited for a certain application. Students have to become aware of this.

In our testbed, we want to be able to vary communication costs as part

of the world laws.

| As seen in a rescue scenario and many other applications, acting

in real-time is a basic requirement. Unfortunately, full real-time

requirements make the systems much more complex and turn the development

focus to subjects not at the core of MAS. Therefore we have to ignore the

issue of a real-time implementation.

| Nevertheless, we think that some real-time aspects should be included into the students' task. Therefore, we organized the world within ARES as a sequence of turns (points in time, events, or moves) where each turn stands for simultaneous actions by all agents. For their decisions, which involve what to do in such a turn, the agents then only have a limited amount of computing time available. This means that they have to balance working on the task at hand (i.e. deciding about the next action) and long-term planning. |

Our goal in developing ARES was to fulfill the requirements stated above to the highest degree possible. With this in mind, the basic setting that ARES presents to the agents is a search and rescue mission. In each particular scenario, a certain number of dying survivors are placed on a grid world. In most cases many of them are buried under layers of rubble, where each layer may require the combined effort of several of the agents to be removed. The goal for the agents is to rescue as many living survivors as possible within a given amount of time (i.e. a given number of turns). The rescue agents developed by the students are given several such grid worlds (world scenarios) and the grading and winner determination is based on the performance in all these example worlds.

3. The ARES Simulator System

As already stated in Section 2, the ARES simulator system is a multi-agent testbed that puts a group of agents (that supposedly control robots) with same capabilities into a world that is an urban disaster zone. In essence, we envision a city struck by an earthquake. The world is structured into grids which describe the potential zone of direct influence a single agent has. The current system version has:

Instant killer grids (think of them as holes our agents can fall into and

vanish)

| Fire grids (that might spread in later versions)

| Recharging grids

| "Normal" grids consisting of a stack of rubble layers and survivors.

| |

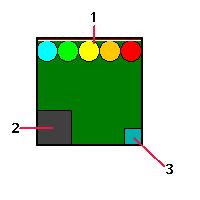

The figure above shows the graphical representation of a normal grid (as presented by the world viewer, see later) The depicted grid is occupied by five rescue agents (1). The field (3) indicates the amount of energy agents have to spend to move on the grid, in a color code, while the field (2) represents what is at the top of the grid stack (which in the figure is rubble). The goal of the agent team is to rescue as many survivors as possible in a given amount of turns.

Starting with some initial information about the world (that is more or less accurate), the agents have to perform a search and rescue operation where cooperation is more or less part of both search and rescue. The need for cooperation is especially imperative during the rescue stage, as some pieces of rubble require more than one agent to be removed. In addition, the need for speed in the rescue stage is critical, since the number of turns to operate is limited, and the survivors weaken with every turn.

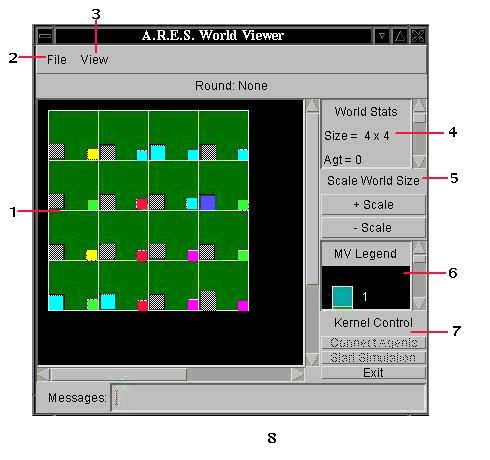

With respect to interaction with a human user, the ARES simulations are represented in a world viewer. This allows one to see the run of a team of rescue agents reacting to a given world scenario (from different perspectives) as well as it allows one to define world scenarios (see Section 3.2). The figure below shows a screen shot of the world viewer depicting a world scenario. The grid world is displayed as (1). The file menu (2) can be used to read and store a world scenario, as well as to display previous runs of some rescue agents from a stored protocol file. The view menu (3) allows one to modify the view in various ways, such as to view the layers of rubble and survivors on a specific grid, or following a specific agent.

On the right side of the viewer are fields with additional information and buttons influencing runs of agents in ARES. The world statistics field (4) provides, as the name says, statistical information about the current scenario, such as the initial number of survivors, the number of living survivors, or the number of saved survivors. We are also informed about the agents themselves, such as how many are still active and how many are not alive anymore. The other information field is the move cost legend (6) that displays the color codes for the amount of energy it will cost an agent to move onto a particular grid. The scale buttons (5) can be used to increase and decrease the scale of the display of the world scenario. The buttons Connect Agents and Start Simulator (7) are used, as their names suggest, to connect the rescue agents to ARES and to start a run. Finally, the viewer displays any error messages in the messages field (8) at the bottom.

In the following subsections, we provide more details about ARES from 3 different perspectives. First, we describe the perspective of a student who is taking a basic MAS course and has to implement agents that are running in ARES -together with his/her team mates. Then we take the perspective of an instructor and look at the world features ARES allows to manipulate in order to set different foci for courses. Finally, we take a short look at the implementation of ARES itself and provide information that, together with the documented source code, should allow people to modify ARES to include additional features or additional feature instantiations. For more detailed information, see [Bergen et al. 2002]. ARES can be obtained here.

3.1 The student view

For a student, the assignment starts with downloading and installing the ARES system on his/her team's account. At the moment, ARES runs only under UNIX (more precisely Sun OS) and has both C++ and Java components. The agents the student teams will develop are, from the point of view of ARES, processes that the ARES Kernel is communicating with. As a result, agents can be implemented in whatever programming language the students wish to use. However, the students must take into account the fact that ARES interrupts and restarts the agent processes in order to limit the amount of processor time available to an agent each turn. With this in mind, a certain efficiency of the agents regarding processing time is definitely required and some programming languages might make this more difficult than others.

Students must also be aware of the flow of communication in ARES. In addition to the fact that ARES can send messages to an agent after each turn, the agents can also send messages back to ARES. In general, most messages sent from ARES to the agents (after action commands are sent from the agents to ARES) contain, in addition to other information, information about an agent's surroundings. This surrounding information tells an agent the type and layers of the grid it is standing on (and the coordinates of the grid in the grid world). Additionally, for all neighboring grids it reveals accurate information about the grid type, the accumulated life energy of all survivors on this grid and what is at the top of the stack of layers on the grid.

ARES accepts from agents the following commands/messages:

CONNECT

| SEND_MESSAGE(receivers, msg-size, message)

| MOVE(direction)

| TEAM_DIG

| SAVE_SURV

| SLEEP

| OBSERVE(coordinates)

| |

The first action that an agent must perform is to send the CONNECT command to the ARES system in order to tell it that it will participate in the run. An agent can then send a message to other agents by using the SEND_MESSAGE command, where ARES allows any bit-string as the message text. This gives the students total freedom in developing the communication language of their agents. With this in mind, students are thus allowed to transmit all kinds of data in the agents' internal formats. The receivers of the message are listed in the receivers list and ARES allows there one, some or all other agents. An agent can MOVE in any direction to a neighboring grid, however this will result in the loss of a certain amount of energy (depending on the move cost associated with the grid it moves to). Since an agent only receives information about the current grid and the direct neighbors from ARES, it also has the possibility to send the OBSERVE command to observe a grid farther away. The quality of the information resulting from this action depends on the distance between current grid and observed grid, so that agents have to deal with vague and even incorrect information (see also later).

Because the main goal of the agents is to rescue survivors, they have first to dig them out by sending the TEAM_DIG command as a (sub)team and then SAVE the SURVivors. Since rubble might require several agents to remove it, ARES will remove the top piece of rubble on a grid only if at least the necessary number of agents (indicated by the piece of rubble) stand on the grid and perform in the same turn the TEAM_DIG command. If a survivor or a survivor group surfaces to the top of the stack of the grid, then one agent has to perform the SAVE_SURV action to get them to safety.

Regarding survivors, it should be noted that they start out with a certain amount of "life energy" and loose some of the energy every turn. If the energy level of a survivor goes to zero or below, this survivor dies. In contrast to this, the agents can replenish their energy by performing the SLEEP action (however, it depends on the world rules of whether they can do this action everywhere or only on the recharging grids).

The messages that come back from ARES to the agents mirror mostly the actions that the agents can perform:

CONNECT_OK(ID,energ-level,location,file)

| UNKNOWN

| DISCONNECT

| FWD_MESSAGE(from,msg-size,receivers,message)

| ROUND_START, ROUND_END

| MOVE_RESULT(energ-level,surround-info)

| SLEEP_RESULT(energ-level)

| OBSERVE_RESULT(energ-level,grid-info)

| TEAM_DIG_RESULT(energ-level,surround-info)

| SAVE_SURV_RESULT(energ-level,surround-info)

| DEATH_CARD

| |

CONNECT_OK acknowledges that the agent is connected to ARES and assigns to it an ID, an energy level and its start location. Additionally, it tells the agent in which file it can find some initial world scenario information. ARES sends out the DISCONNECT command when it shuts down after a run. Messages that agents send to other agents are forwarded by the use of the FWD_MESSAGE command, which in addition to the message "text" tells the agent from which other agent the message is and who else got the message. ROUND_START and ROUND_END are used to tell an agent when it can start computing for the next round and when the time for computation is over. If during this computation time an agent sends several commands to ARES, then ARES will execute the last command it received (except if communication is free, then it will also forward all messages that were sent by the agent).

In addition, for all commands sent by any agent to ARES that involve an action, ARES will reply with a message that informs the agent of its new energy level (as all actions use energy or, in case of SLEEP, result in a gain of energy). For those actions that change the world (moving, removing rubble or survivors) ARES will also send back feedback on the already explained surrounding information. When an agent dies, ARES shuts out the agent by sending it the DEATH_CARD message. Finally, ARES also has a very generic error message, UNKNOWN, that tells an agent that its last command could not be recognized. If a command is understood, but could not be executed -for example, a move out of the grid world- then ARES simply does not execute the command (and the agent looses this turn). There is no error message in this case.

3.2 The instructor view

From the instructor's point of view, the relevant components of ARES concern the generation of world scenarios and the observation of the performance of a team of rescue agents developed by a team of students. For the generation of world scenarios, some general world rules are required. These rules will be common to all scenarios and they are told to the students at the beginning of the course so that they can focus their development on these world rules.

As already stated in Section 2, being able to have different world rules in different semesters has a lot of advantages. Therefore ARES consults the so-called world_rules file that the instructor has to distribute in addition to the standard ARES distribution. So far, an instructor has the following world rules for which he/she has to choose an instantiation:

Communication cost: Either a communication action is

treated as every other action, which means that communication does not

allow

for any other action for an agent in a turn, or ARES forwards every

message by an agent to the intended receivers and also allows

each turn to perform one of the other possible actions.

| Distortion of initial world: Obviously, after a disaster

has

struck, some of the knowledge from before the disaster occurred can give

one an idea about where there may be survivors.

Therefore the agents acting in ARES are initially given a "map" of the

world scenario that has some resemblance to reality (by the

CONNECT_OK message). ARES takes

the real world model and distorts it (by randomly changing numerical

values, like accumulated life energy per grid, within a given limit)

to produce this map.

How much distortion takes place, (i.e. how big the limits are), can be

defined by this world rule which thus influences the initial planning

capability of the agent team.

| Charge vs. Sleep: Since agents loose energy during every

turn

there must be a way to replenish this energy. In ARES, we can select

from two different ways of doing this: charging at a charging grid or

sleeping on an arbitrary grid. If agents can only charge at a charging

grid, long-term planning of actions is much more important.This is because

if

they do not reach the charging grid with the energy that they have, the

agents will die and be out of the run. Using the sleep option as the

world rule allows for a more reactive planning without much need for a

long-term perspective.

| Maximum lifting requirement: The number of agents

necessary to

lift and remove a piece of rubble has quite an impact on the

strategies that agents use (as was demonstrated in our first usage of

ARES in a multi-agent course, see Section 4). If all agents

might be needed, they should not get too far apart. On the other hand,

separating agents may allow for more diversified plans. Therefore we allow

an instructor to set a limit for the number of agents needed for

lifting that will be observed in all scenarios that the agent teams are

facing.

| |

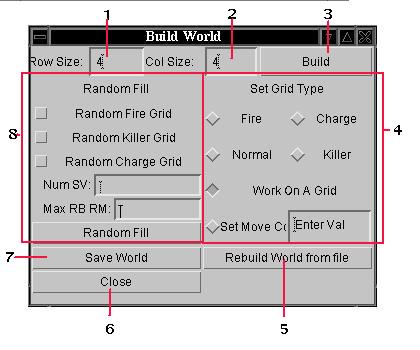

After having defined the common rules for all world scenarios, different scenarios are required to avoid having the students do some over-optimization (which with only a few scenarios is possible, by simply encoding optimal strategies for each of the scenarios). For creating a world scenario, ARES provides an instructor with a graphical interface (and this interface, as part of the world viewer, is also available to students for testing particular aspects of their agents). The figure above this paragraph shows a screen shot of the additional graphical interface that deals with building a world.

A new world scenario can either be build out of another scenario (by using (5) to load this other scenario, which then also allows to modify it) or it can be generated randomly (with an "empty" scenario being an additional option). For a randomly generated world scenario, the instructor fills out (1) and (2) to define the size of the world. The check-boxes in (8) allow to define if fire grids, killer grids and charge grids should already be created (if not checked, then the instructor has to create them individually, if needed, later). Also in (8), it is possible to define the number of survivors that can be distributed among the layers of the stacks of all grids (randomly, of course) and we can define the maximal number of agents needed to remove any piece of rubble in the world (which naturally has to be lower than the value defined in the world rule; we found it a good idea to have world scenarios that are "easier" with this regard than others to see if the students plan for it). By clicking on (3) and after this on RandomFill, the random world scenario is then created. A world scenario can be saved using (7) and we can leave the additional world building interface by clicking on (6).

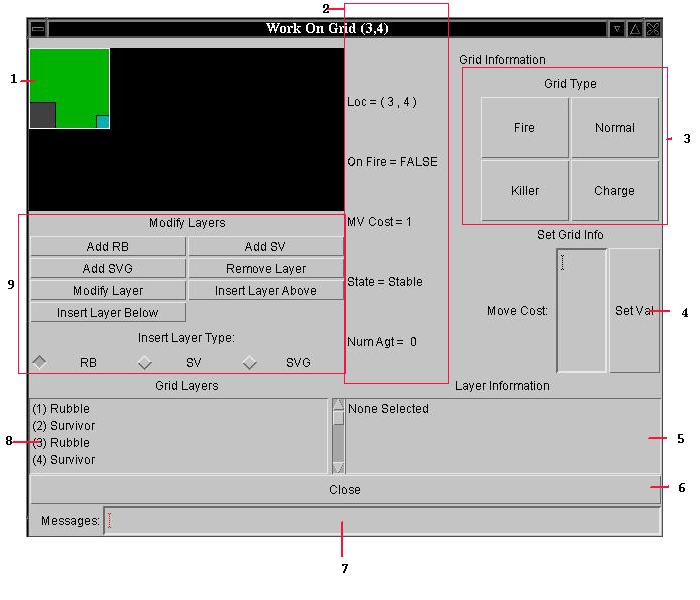

Naturally, an instructor also has to be able to edit individual grids (of a randomly generated scenario, an already existing one, or an "empty" one). To do this, he/she clicks on the grid in the world viewer and then uses (4) in the world building interface. Clicking on "Work On A Grid" (as indicated in the figure above) opens an additional window that displays the stack of layers of this grid and allows to edit it. This additional window is depicted below:

The selected grid's graphical representation in the world viewer is repeated in (1). (2) given then a more detailed textual description of this grid. (3) allows to change the type of the grid, while in (4) we can change the move cost this grid takes from the energy of an agent moving on it. (8) displays the layers of the grid and if one of these layers is clicked on, then (5) displays detailed information about the particular layer. The buttons in (9) allow to modify the stack of layers of the grid. The Add buttons add a layer of the indicated type on top of the stack. After pressing one of them, an additional window asks for details about the layer to add. The other buttons require a layer to be selected in (8). Remove Layer just removes this selected layer, while the other 3 buttons result in an additional window for entering details about the layer (to add or modify), again. As usual, we have a button for closing the window (6) and a message area (7).

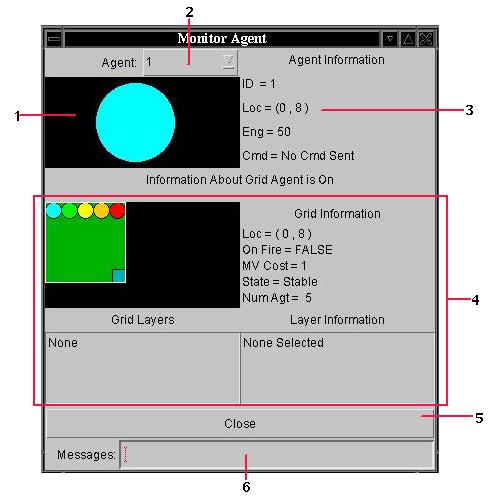

Another important feature of ARES is its ability to evaluate and compare the results of the agent teams produced by the students. After tests have been run the world viewer can be used to replay the events that occurred during the simulation. This replay can be viewed in different modes such as a slow or fast animation, but also turn by turn, and with different focus to observe each agent individually. The students hand in the code for their agents (and a paper describing the ideas that they implemented) and the tests can be run as batch jobs over night. Then all or selected runs can be looked at using the generated protocols. The picture below shows the window that allows to focus on a particular agent.

This window has to be seen as addition to the usual world viewer window. At (1), it shows the agent so that the color can be used to identify it on the world viewer window. (2) allows to select which agent we want to observe, to which (3) provides the actual information, like its energy level. (4) shows information about the grid and its layers the agent is standing on at the moment, similar to the information in the "Work On A Grid" window. (5) and (6) are the usual close window button and the message area.

3.3 Some implementation details

Due to the well-known software engineering advantages, ARES also has been designed as a multi-agent system, consisting of the Kernel agent, the WID agent and the viewer agent. Each agent is treated as a different process and the agents communicate using UDP sockets. All processes are running on the same computer. The Kernel handles all connections to the agents written by the students, whereby it receives their actions/messages and in return sends back the results of these actions/messages. The WID agent (World Information Database agent) handles the simulation of the world scenarios. Triggered by the Kernel agent, the WID modifies the current world scenario state based on the actions it receives. Additionally, the Kernel makes sure that only the last action submitted by a student agent is forwarded and, depending on the world rule, also the messages. The viewer agent also communicates with the WID agent in order to get information necessary to display the world state. With the ability to view the statistics and activities of multiple agents in separate windows, the viewer has proven to be a valuable tool for analysis.

At the moment, ARES provides much flexibility in our teaching. However, in the future we plan to add more features to the system. The internal architecture of ARES and our implementation allow for easy addition of new objects to the world or of additional actions/commands for the rescue agents. The division between the Kernel and WID agent also allows for easy integration of simulations for changing the world into the WID agent (for example, to achieve spreading fire).

4. Experiences with ARES

ARES was used in the major assignment of our multi-agent course. This assignment required students to develop and implement a number of agents that worked together as a team. The goal of each team of agents was to rescue as many survivors as possible in different world scenarios in ARES. In the course each team of students were given the task to develop a team strategy for five agents. In order to prepare the students for this, each student was given one of five general cooperation ideas from literature (and a paper describing it to start with) and he/she had to produce a midterm paper describing the idea in general and suggesting ways how this idea could be employed by the agents acting in ARES. Each student in a team was given a different cooperation idea out of the following list

Cooperation by giving out selected information

| Cooperation using blackboards/shared memory

| Cooperation via a Master-Slave Approach

| Cooperation using Negotiations

| Cooperation using Market Mechanisms

| |

Not only did these papers encourage students to start thinking about the task early in the course, it also exposed students to the idea of collaboration. Since each member of the group was assigned a different topic, a diversity of knowledge was encouraged and discussions among the students necessary.

The world rules that we choose were as follows:

Communication was made expensive by having the SEND_MESSAGE command as

an action like all the other commands.

| We distorted the initial world map by 5 percent.

| We allowed the agents to replenish their energy by sleeping whenever

they wanted.

| The maximum number of agents lifting requirement was set to 5.

| |

The agents received one second of processor time per turn for determining their move (and whatever other computations they wished to do). For testing purposes, the students were given three randomly generated world scenarios and naturally they could create their own scenarios. At the end of the semester, we evaluated the rescue agent teams of the students using the randomly generated world scenarios and several other "pathological" world scenarios (not known to them before) created to test some abilities of individual agents and teams. Such tests were, for example,

testing the navigation abilities of the agents/teams, by

locating the survivors within an area surrounded by fire and

allowing a seemingly easy and short path (that will cost a lot of energy)

and

a longer path (that had much less energy consumption when taken),

| testing the decision making of the team by

placing survivor groups of different sizes in different distances from

the agents, with the larger groups being farther away and a rather strict

time limit, so that rescuing a larger group becomes impossible if you

stop to rescue a small group, or

| testing the usage of the initial world information by taking the last

test scenario and additionally giving the survivors in the larger

survivor groups less life energy, so that in the initial world

information small and large groups have approximately the same amount of

life energy.

| |

Given the results of ARES first use in a course (and following suggestions by some of the students), future tests will include world scenarios that require the agents to spread out in the world by needing only a few of the agents to rescue any of the survivors.

Overall, each team of students produced rather different agents and team strategies. The rescue team of each team of students was able to be the best team in at least one world scenario. But there was one team that was best in most scenarios. This most successful implementation of the agents was found to be when all five agents act as one "super-agent". At the start of a run, all agents used the world information to determine the highest concentration of survivors (resp. life energy) to determine the initial meeting spot, rescuing survivors on the way if possible (i.e. if a single agent can remove the rubble). After the agents join together, they stay together and always move to the nearest grid with sufficiently high concentration of survivors. The agents use Dijkstra's algorithm for finding the shortest path between a start and destination grid and since they all have the same information, only very little communication is necessary. In fact, the agents communicate at the beginning their start positions to each other and later they only communicate if one agent is low on energy, so that all agents stop and sleep until this agent has sufficient energy, again.

Obviously, the students tailored their agents well to the chosen world rules: communication is expensive, so try to minimize it. Since the initial world information is rather accurate and common to all agents, base your decisions on it so that you do not have to communicate. And because all agents might be needed to dig out survivors, keeping them together came forth. Also obviously, by having the ability to change the world rules, we can define changes that will make this particular setup to fail. It should also be noted that the students of the best rescue team did not make use of the OBSERVE action and this together with having the agents not scout around, meant that they did not have data about how weak survivors were getting. So, if we had one very strong survivor in one grid and further away several weak survivors, both grids would have the same accumulated life energy and the rescue agents would first go to the nearer strong survivor and this way might loose the survivor group. This was how the rescue teams of the other student teams were able to beat the team in a few world scenarios.

5. Conclusion and Future Work

We have presented the ARES system, a testbed for rescue agent teams that are developed by students as the major assignment of a beginners course in multi-agent systems. ARES has been especially developed with teaching in mind, so that on the one hand it let students focus on the multi-agent issues cooperation and communication, and on the other hand it fulfills the wishes of instructors, like being able to have different foci in the assignment every semester. We successfully used ARES in our course and so indicated experimentally that our goals in developing ARES are fulfilled.

Naturally, there are a lot of possibilities of how ARES can be improved. Over time, we expect to find a lot of candidates for additional world rules, like starting the rescue agents with the same information versus different information or having fire that spreads versus fire that does not spread (in our first use of ARES, no rescue agent died in a scenario, spreading fire might change this). And with some of the instantiations of such rules it will be possible to make the assignment harder to reflect, for example, the preparation students got from other courses (which differs from university to university) or to use ARES both for undergraduate courses and graduate courses. We also see the need for additional testbeds that, like ARES, are targeted on teaching instead of evaluating research ideas. Such other testbeds could focus on scenarios in which competition between agents is the main issue. In fact, a possible extension of ARES could be to have the agents of all student teams act in one world scenario with the possibility for cooperating with each other, but also competiting with each other about who rescues more survivors.

References

- Bergen, M.D.; Denzinger, J.; Kidney, J.: Teaching Multi-Agent Systems with the help of ARES: Motivation and Manual, Internal Report 2002-707-10, Department of Computer Science, University of Calgary, 2002.

- Kitano, H.: RoboCup Rescue: A Grand Challenge for Multi-Agent Systems, Proc. ICMAS-2000, IEEE Press, 2000, pp. 5-12.

- Stone, P. and Veloso, M.: Multiagent Systems: A Survey from a Machine Perspective, Autonomous Robotics 8:3, 2000.

- Wellman, M.P.; Wurman, P.R.; O'Malley, K.; Bangera, R.; Lin, S.; Reeves, D.; Walsh, W.E.: Designing the Market Game for a Trading Agent Competition, IEEE Internet Computing 5(2), 2001, pp. 43-51.

![]()

Jörg Denzinger

Department of Computer Science

University of Calgary

2500 University Drive NW, Calgary, Alberta, Canada T2N 1N4

E-mail: denzinge@cpsc.ucalgary.ca

![]()