|

Markerless Garment CaptureDerek Bradley, Tiberiu Popa, Alla Sheffer, Wolfgang Heidrich, Tamy Boubekeur |

|

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2008)

Download: PDF AVI Slides Data

Bibtex:

@ARTICLE{Bradley:2008,

author = {Derek Bradley and Tiberiu Popa and Alla Sheffer and Wolfgang Heidrich and Tamy Boubekeur},

title = {Markerless Garment Capture},

journal = {ACM Trans. Graphics (Proc. SIGGRAPH)},

year = {2008},

volume = {27},

number = {3},

pages = {99},

}

AbstractA lot of research has recently focused on the problem of capturing the geometry and motion of garments. Such work usually relies on special markers printed on the fabric to establish temporally coherent correspondences between points on the garment’s surface at different times. Unfortunately, this approach is tedious and prevents the capture of off-the-shelf clothing made from interesting fabrics.In this paper, we describe a marker-free approach to capturing garment motion that avoids these downsides. We establish temporally coherent parameterizations between incomplete geometries that we extract at each timestep with a multiview stereo algorithm. We then fill holes in the geometry using a template. This approach, for the first time, allows us to capture the geometry and motion of unpatterned, off-the-shelf garments made from a range of different fabrics. |

[with audio] Click here for a lower quality version if you're using a slow connection. |

Overview

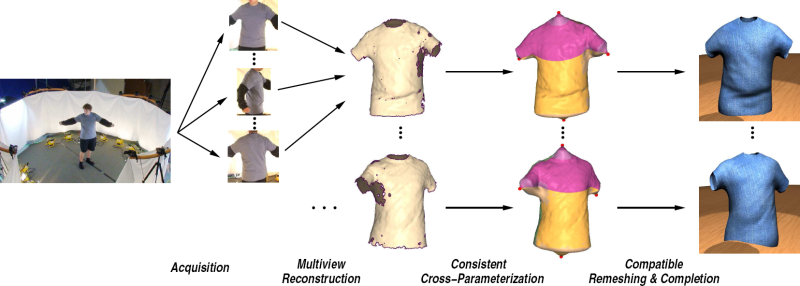

Our method for markerless garment capture consists of four key components:

- Acquisition: We deploy a unique 360◦ high-resolution acquisition setup using sixteen inexpensive high-definition consumer video cameras. Our lighting setup avoids strong shadows by using indirect illumination. The cameras are set up in a ring configuration in order to capture the full garment undergoing a range of motions.

- Multiview Reconstruction: The input images from the sixteen viewpoints are fed into a custom-designed multiview stereo reconstruction algorithm to obtain an initial 3D mesh for each frame. The resulting meshes contain holes in regions occluded from the cameras, and each mesh has a different connectivity.

- Consistent Cross-Parameterization: We then compute a consistent cross-parameterization among all input meshes. To this end, we use strategically positioned off-surface anchors that correspond to natural boundaries of the garment. Depending on the quality of boundaries extracted in the multiview stereo step, the anchors are placed either fully automatically, or with a small amount of user intervention.

- Compatible Remeshing and Surface Completion: Finally, we introduce an effective mechanism for compatible remeshing and hole completion using a template mesh. The template is constructed from a photo of the garment, laid out on a flat surface. We crossparameterize the template with the input meshes, and then deform it into the pose of each frame mesh. We use the deformed template as the final per-frame surface mesh. As a result, all the reconstructed per-frame meshes have the same, compatible, connectivity, and the holes are completed using the appropriate garment geometry.

Results

Capture results for five frames of a T-shirt. From top to bottom: input images, captured geometry, T-shirt is replaced in the original images.

Capture results for a fleece vest. From left to right: one input frame, captured geometry, vest is replaced in the original image, the scene is augmented by adding a light source and making the vest more specular.

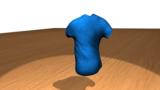

Capture results for five frames of a blue dress. Input images (top) and reconstructed geometry (bottom).

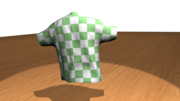

Capture results for two frames of a large T-shirt. Input images (top) and reconstructed geometry (bottom). |

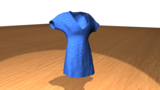

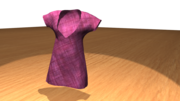

Capture results for two frames of a pink dress. Input images (top) and reconstructed geometry (bottom). |

Capture result for a long-sleeve nylon-shell down jacket.