|

Accurate Multi-View Reconstruction

Derek Bradley,

Tamy Boubekeur,

Wolfgang Heidrich

|

|

Download: PDF

Abstract

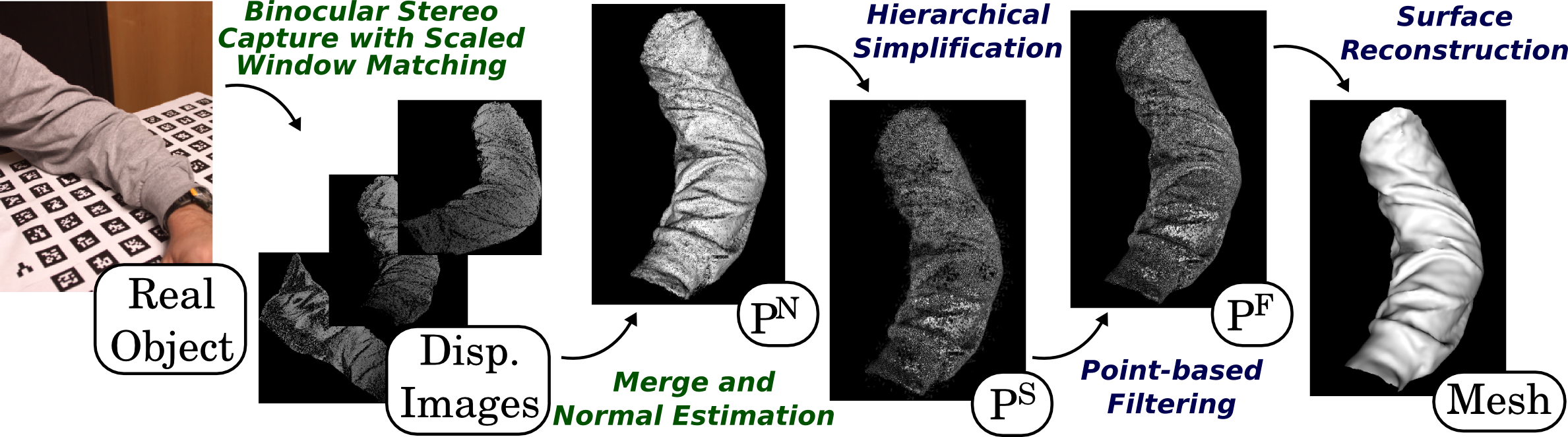

This paper presents a new algorithm for multi-view reconstruction that demonstrates both accuracy and efficiency. Our method is based on robust binocular stereo matching, followed by adaptive point-based filtering of the merged point clouds, and efficient, high-quality mesh generation. All aspects of our method are designed to be highly scalable with the number of views. Our technique produces the most accurate results among current algorithms for a sparse number of viewpoints according to the Middlebury datasets. Additionally, we prove to be the most efficient method among non-GPU algorithms for the same datasets. Finally, our scaled-window matching technique also excels at reconstructing deformable objects with high-curvature surfaces, which we demonstrate with a number of examples.Overview

The binocular stereo part of our algorithm creates depth maps from pairs of adjacent viewpoints using a scaled-window matching technique. The depth images are converted to 3D points and merged into a single dense point cloud. The second part of the algorithm reconstructs a triangular mesh from the initial point cloud by downsampling, cleaning, and meshing.

Results

Please click on any image to see the full-resolution version.

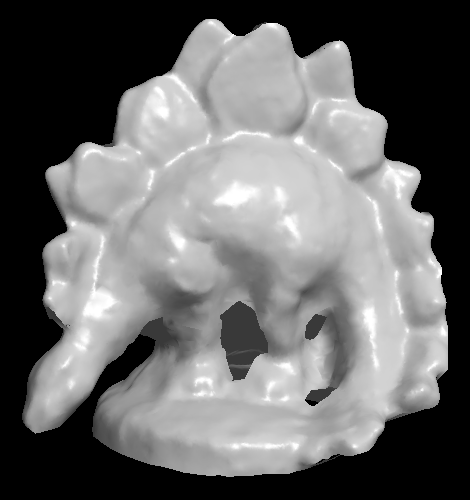

Dino reconstruction from sparse (16) viewpoints. Left: one input image (640x480 resolution). Middle: 944,852 points generated by the binocular stereo stage. Right: reconstructed model (186,254 triangles).

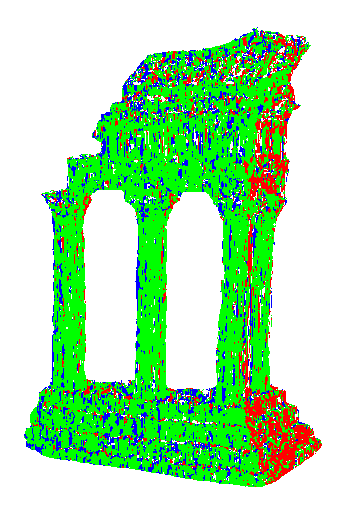

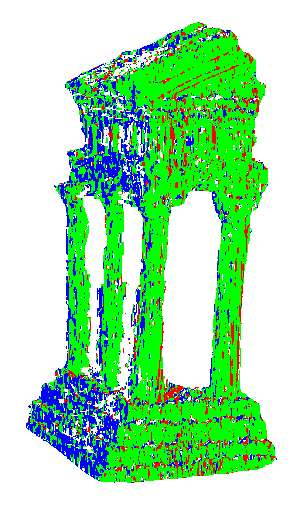

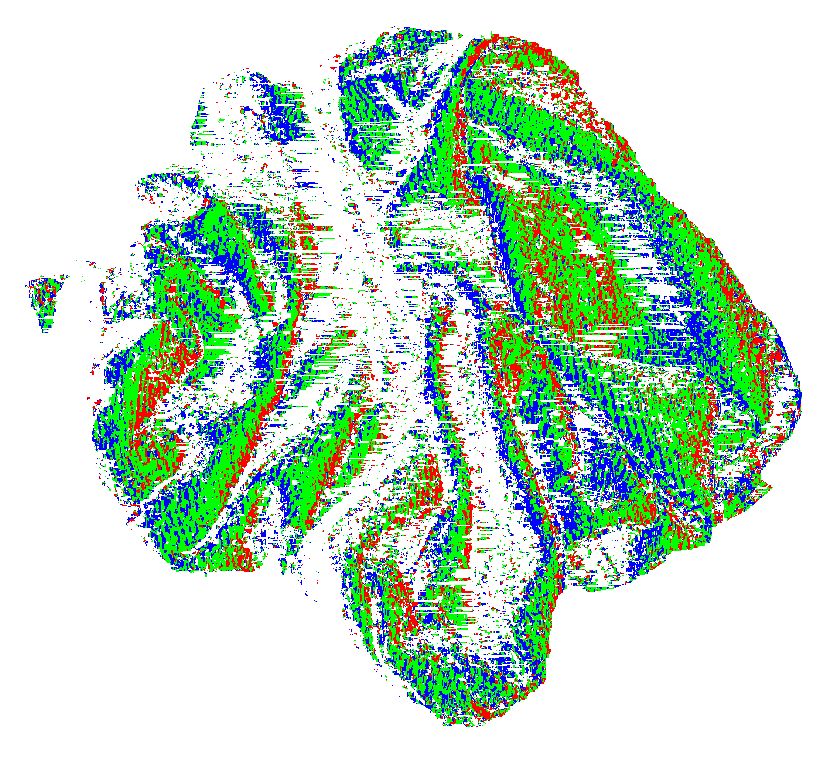

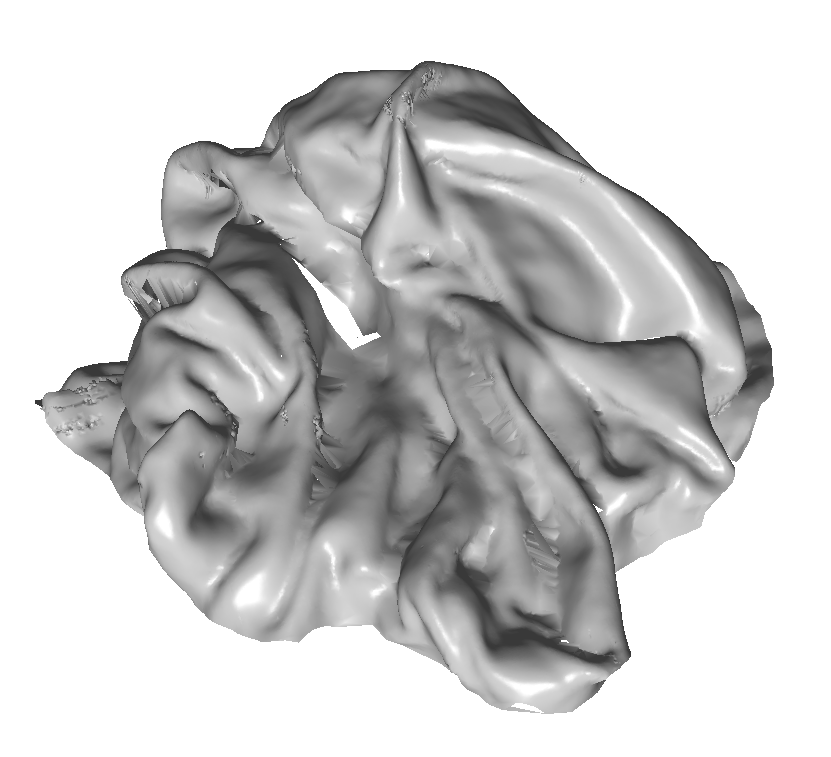

Scaled window matching on the templeRing dataset. Left: one of 47 input images (640x480 resolution). Middle: two disparity images re-colored to show which horizontal window scale was used for matching. Red = sqrt(2), Green = 1, and Blue = 1/sqrt(2). Right: reconstructed model (869,803 triangles).

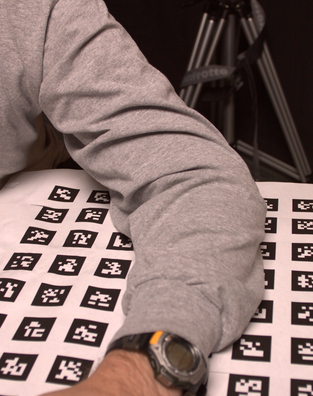

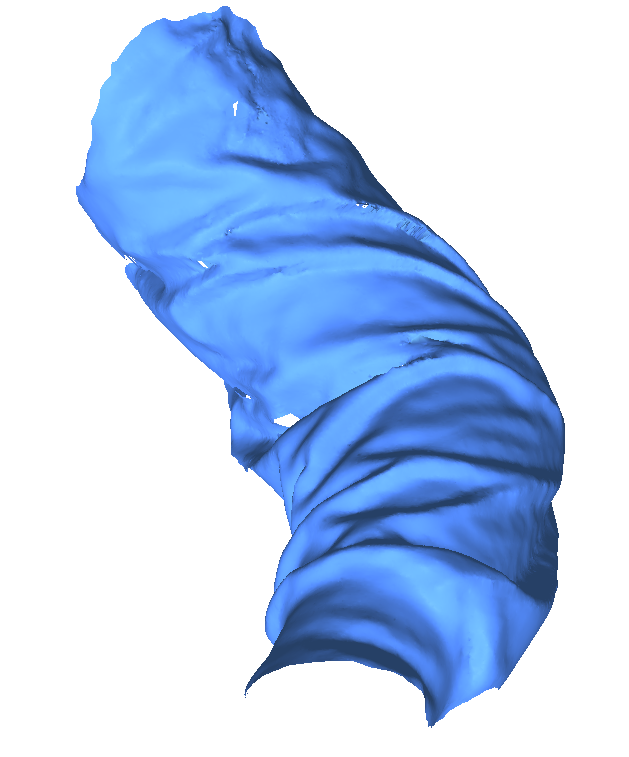

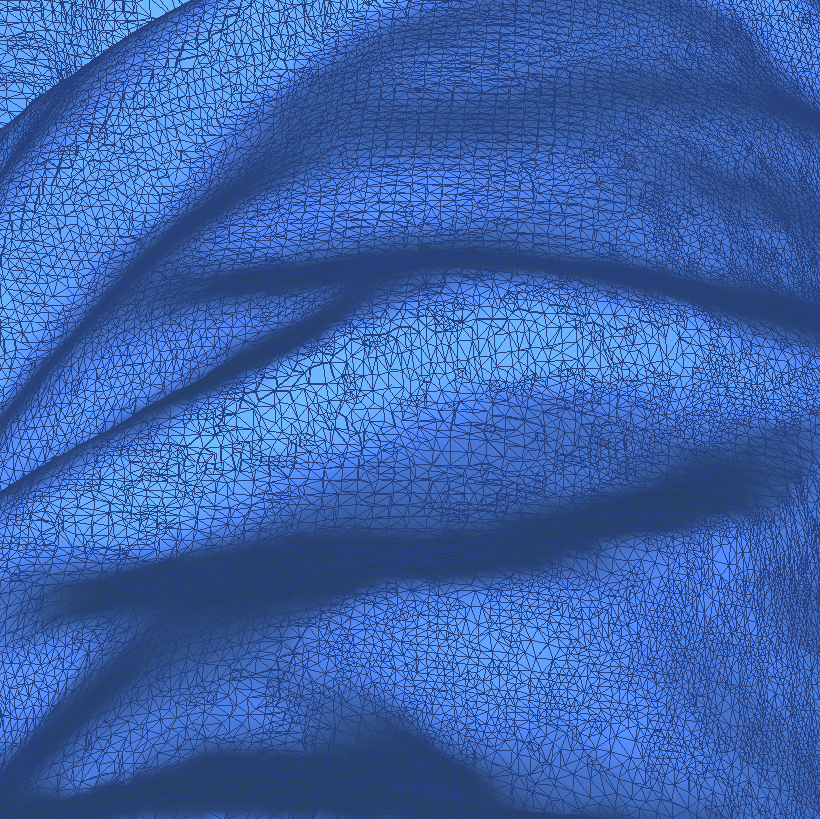

Sleeve reconstruction. Left: cropped region of 1 of 14 input images (2048x1364). Middle: final reconstruction (290,171 triangles). Right: zoom region to show fine detail.

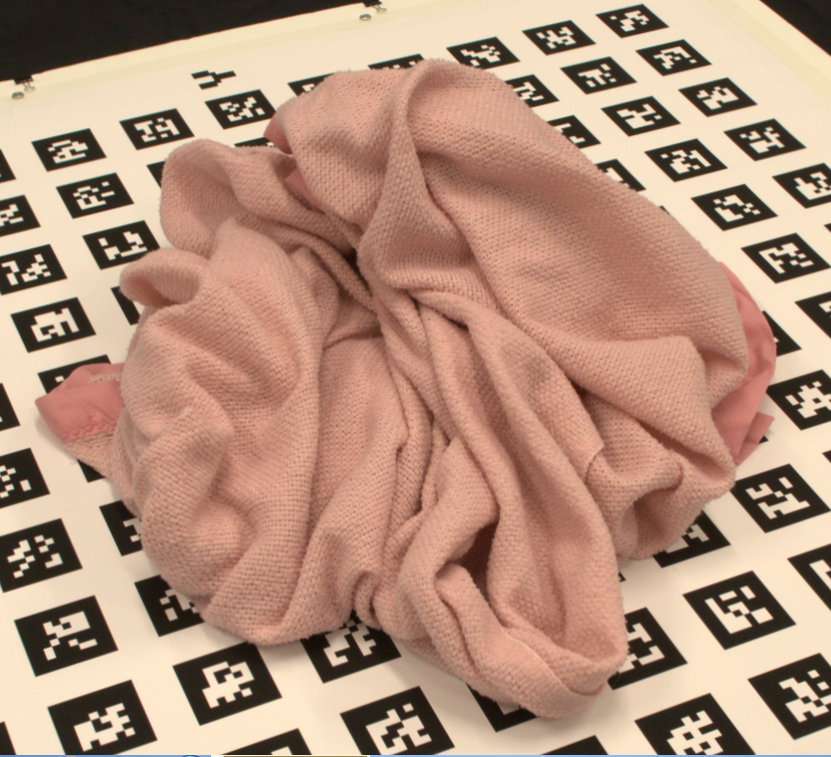

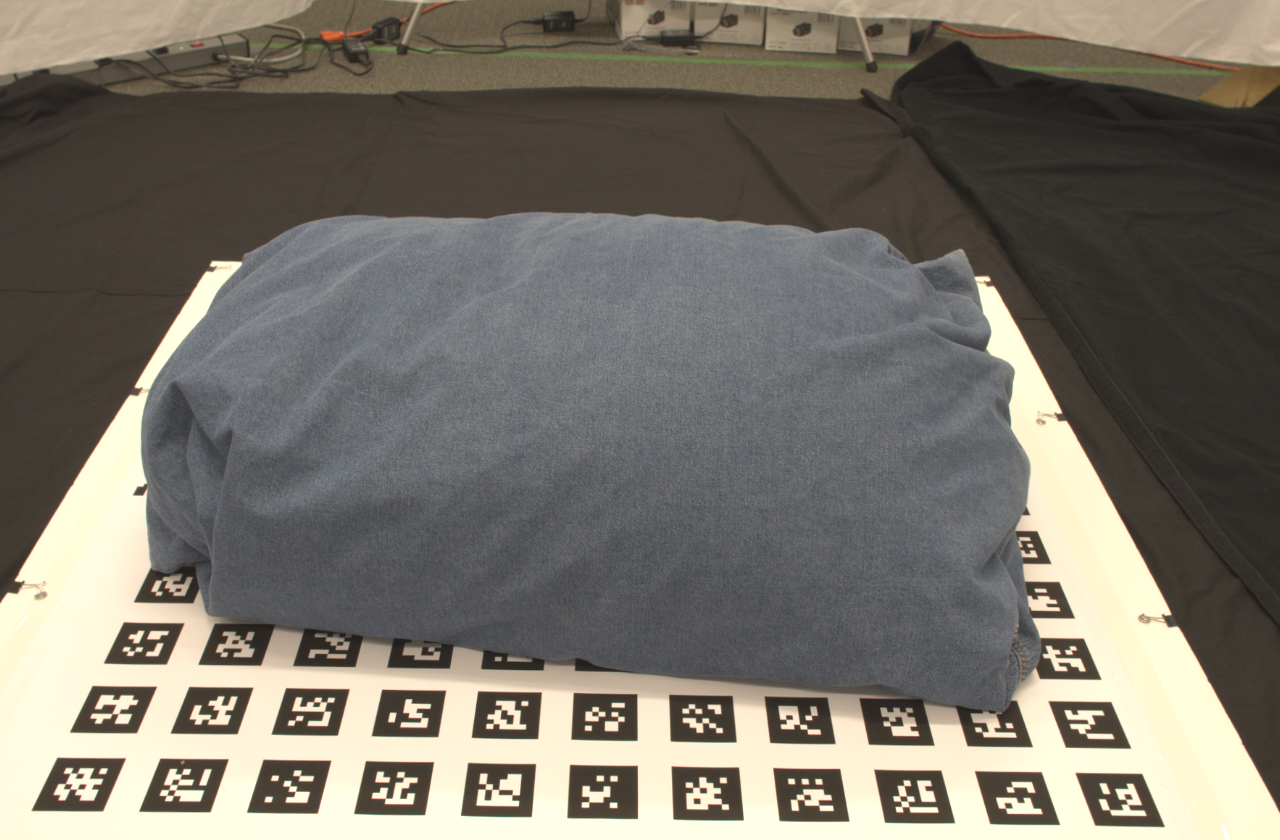

Crumpled blanket. Left: 1 of 16 input images (1954x1301). Middle: re-colored disparity image to show window scales. Right: final reconstruction (600,468 triangles).

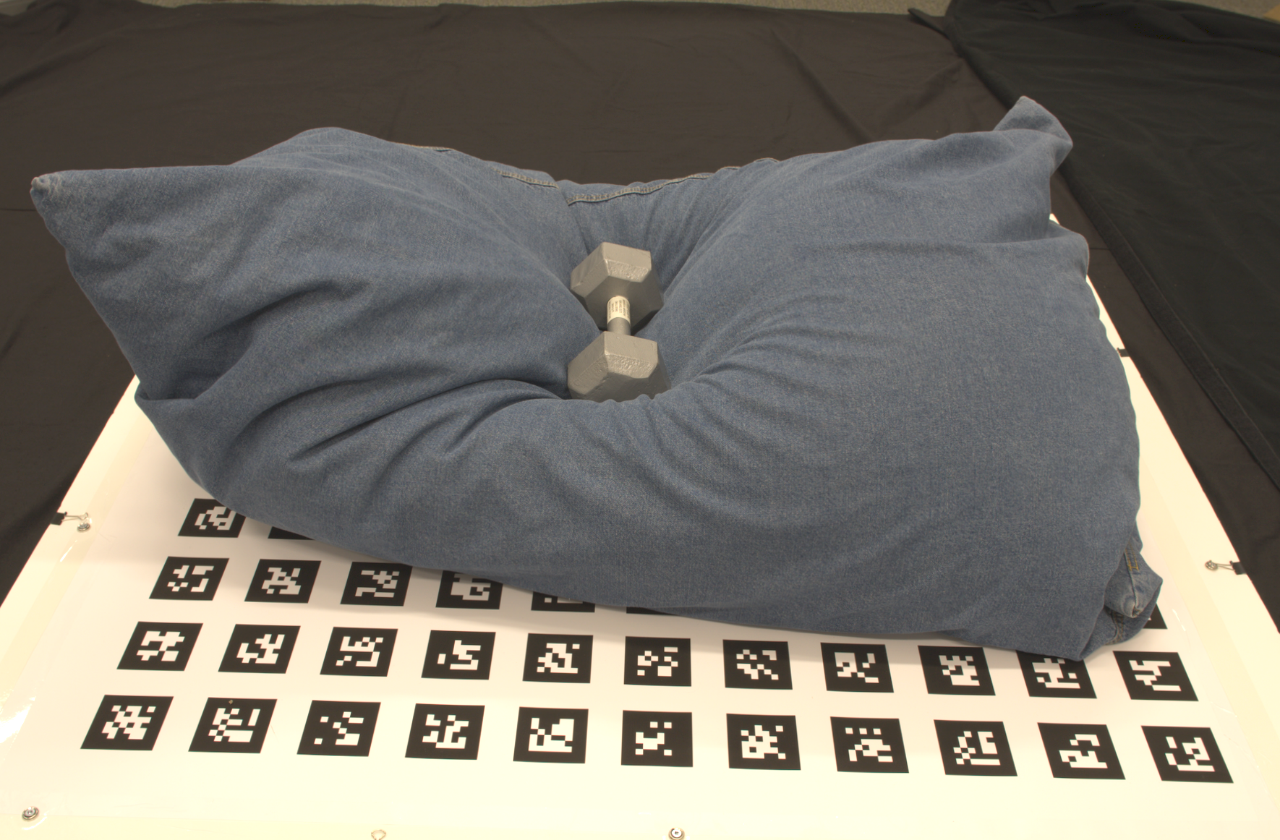

Bean-bag chair reconstruction, before (top) and after (bottom) deformation. Left: 1 of 16 input images (1954x1301). Right: final reconstruction (4,045,820 and 3,370,641 triangles).