How does Hydra work?

The key idea behind Hydra is that a new candidate algorithm should be preferred exactly to the extent that it improves upon the performance of a (slightly idealized) portfolio. Hydra is thus implemented by changing the performance measure given to the algorithm configuration. A candidate algorithm is scored with its actual performance in cases where it is better than the existing portfolio, but with the portfolios in cases where it is worse. Thus an algorithm is not penalized for bad performance on instances for which it should not be selected anyway, but is only rewarded to the extent that it outperforms the portfolio. We note that our method implicitly assumes that a new algorithm will be selected if and only if it would outperform the portfolios previous selection.

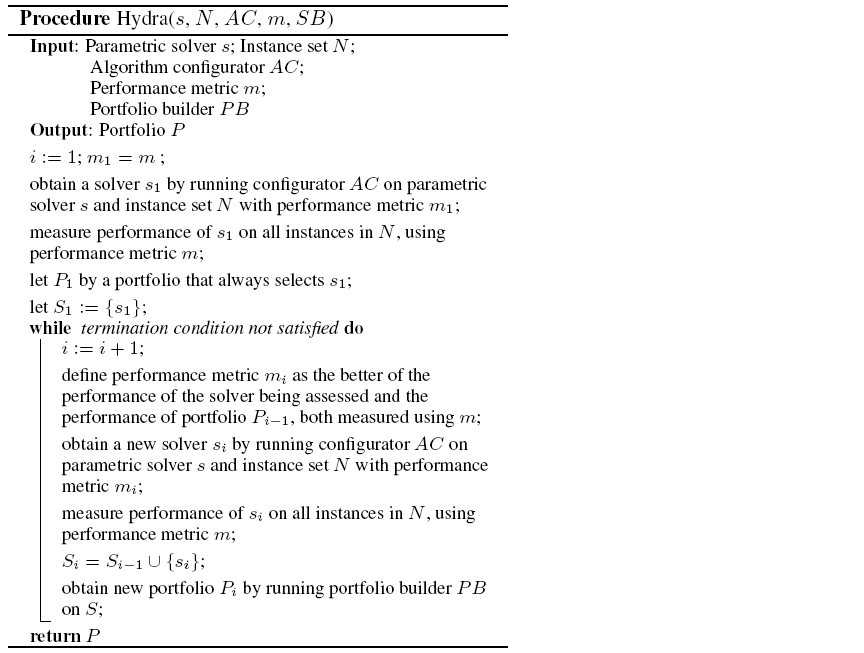

As shown in the pseudo-code, Hydra takes five inputs: a parameterized solver , a set of training problem instances , an algorithm configuration procedure with a performance metricmto be optimized, and a procedure for building portfolio-based algorithm selectors .

In its first iteration, Hydra uses configurator to produce a configuration of , dubbed , that is optimized on training set according to performance metric . Solver is then run on all instances of in order to collect data that can eventually be input to ; runs performed during the earlier configuration process can be cached and reused as appropriate. We define portfolio as the portfolio that always selects , and solver set as .

Then, in each subsequent iteration , Hydra defines a modified performance metric as the better of the performance of the solver being assessed and the performance of the current portfolio, both measured according to . The configurator is run to find a configuration of that optimizes on the entire training set . As before, the resulting solver is evaluated on the entire set and then added to the solver set . We then use to construct a new portfolio from the given set of solvers. We note that in each iteration of Hydra, the size of the candidate solver set grows by one; however, may drop solvers that do not contribute to the performance of portfolio (this is done, e.g., in SATzilla). Hydra can be terminated using various criteria, such as a user-specified bound on the number of iterations and/or a total computation-time budget.

The algorithm configuration procedure used within Hydra must be able to deal efficiently with configurations having equal performance on some or all instances, because such configurations can be expected to be encountered frequently. (For example, all configurations dominated by portfolio will have equal performance under performance metric ). It is also possible to exploit for computational gain when optimizing runtime. Specifically, a run of on some instance in can be terminated during configuration once its runtime reaches portfolio 's runtime on .